Create new dialogs with Bot Framework Composer

July 22, 2021

July 22, 2021

Here you can find how to create your own custom dialogs using Bot Framework Composer.

Dialogs provide a way to manage a long-running conversation with the user. A dialog performs a task that can represent part of or a complete conversational thread. It can span just one turn or many, and can span a short or long period of time.

Create

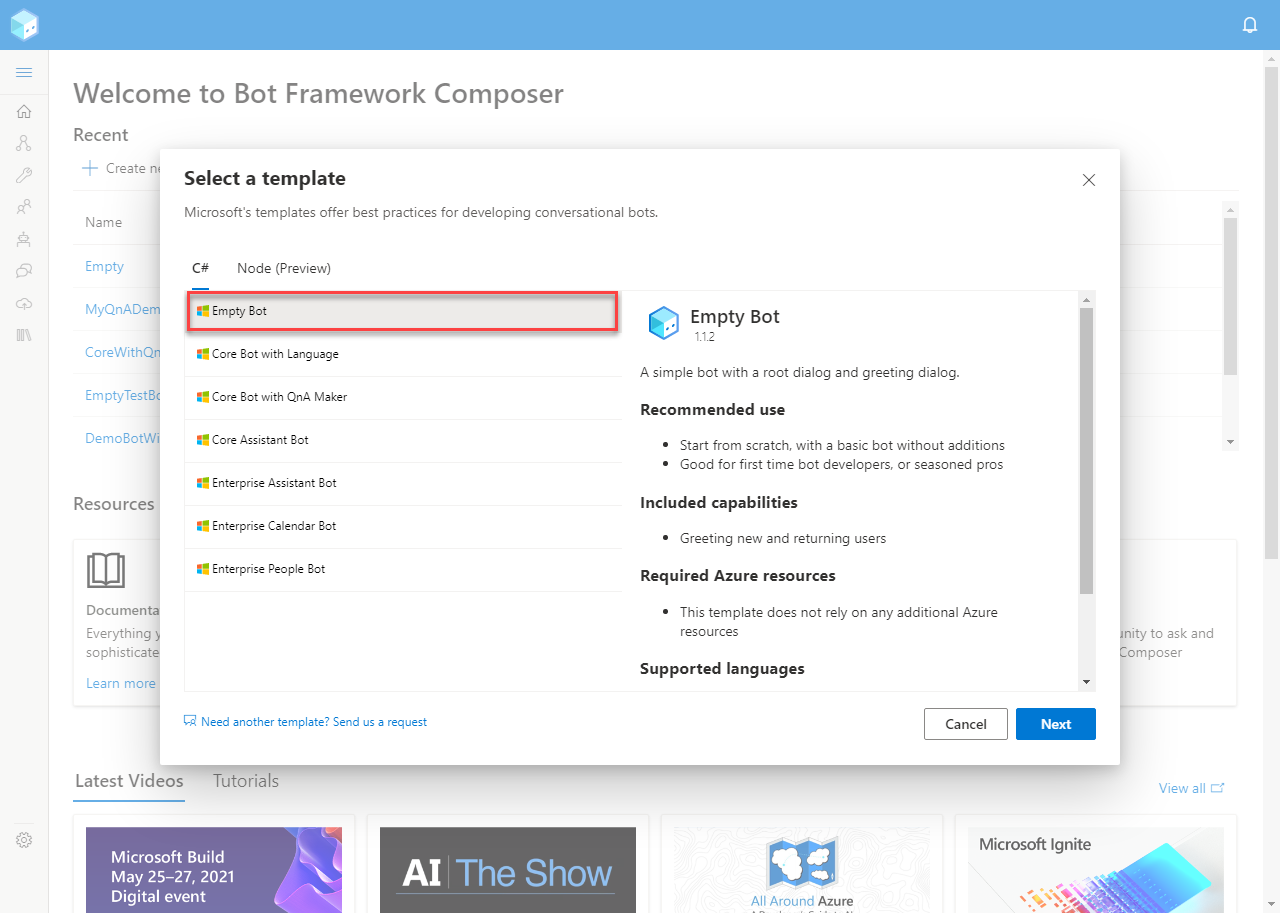

Open up Bot Framework Composer and create an Empty Bot.

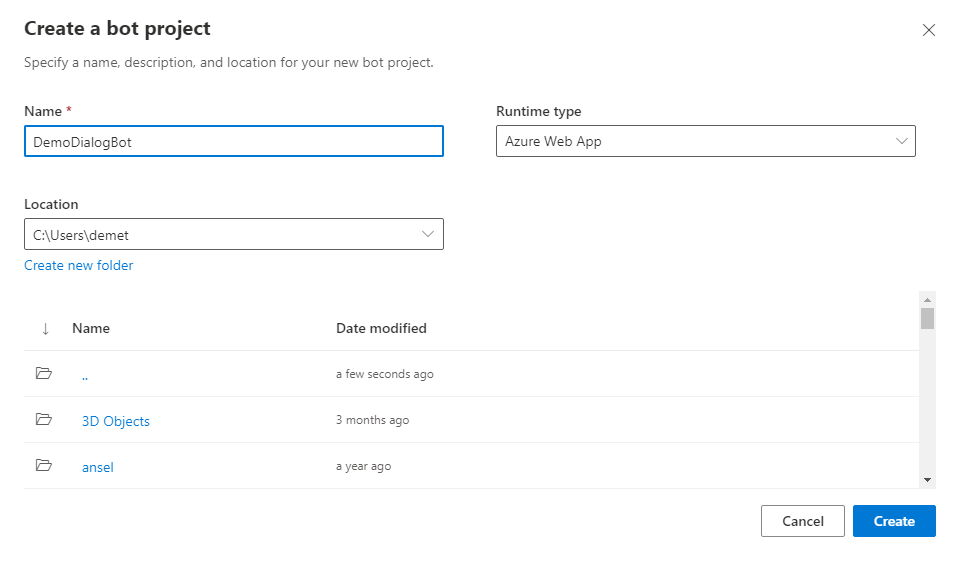

Give a name to your bot and click Next.

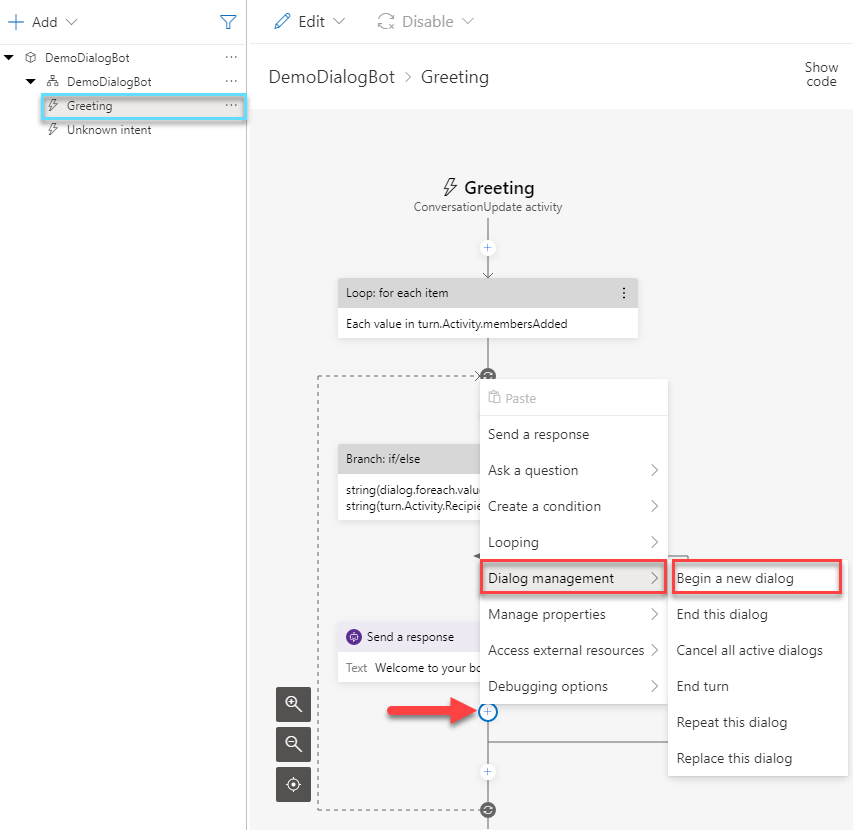

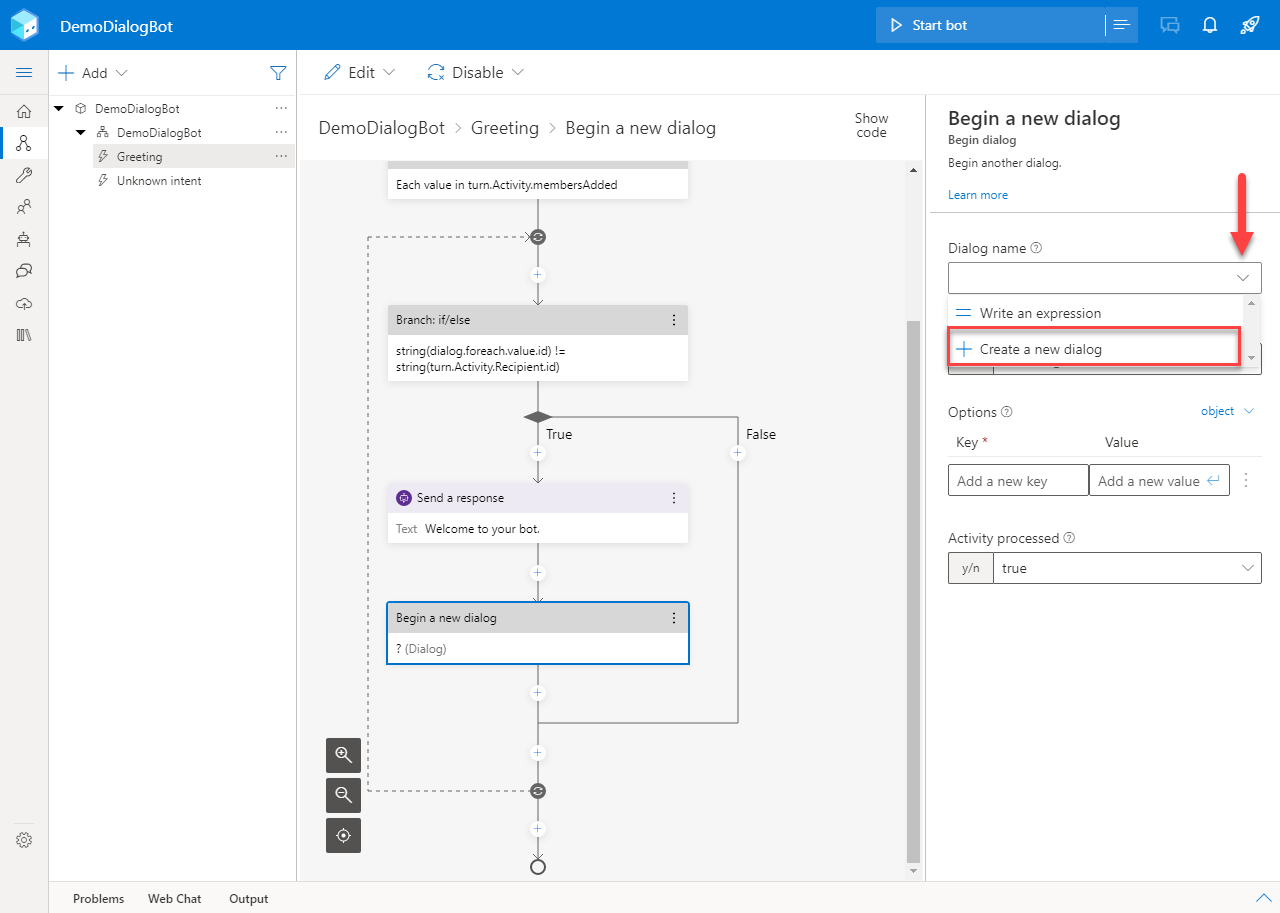

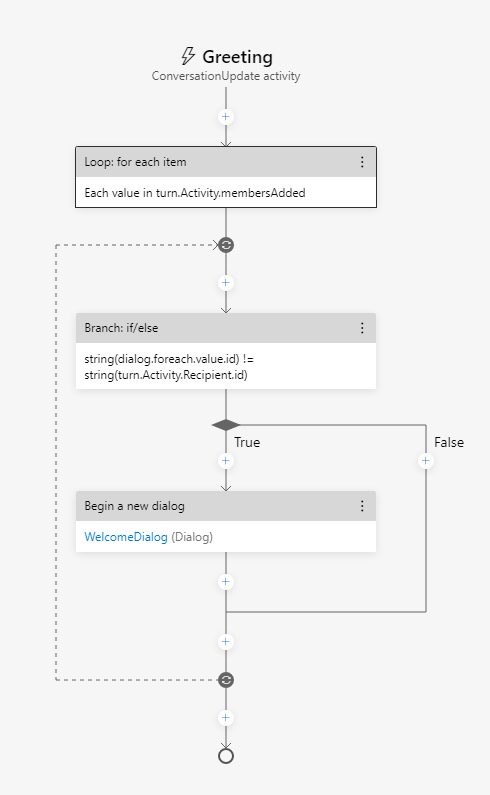

In the Greeting trigger click on the little “+” sign bellow the last response. Then, choose the Begin a new dialog option from Dialog management.

Press the arrow in the Dialog name field and click Create new dialog.

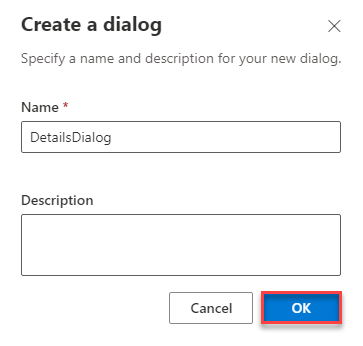

Give a name to your dialog and click OK.

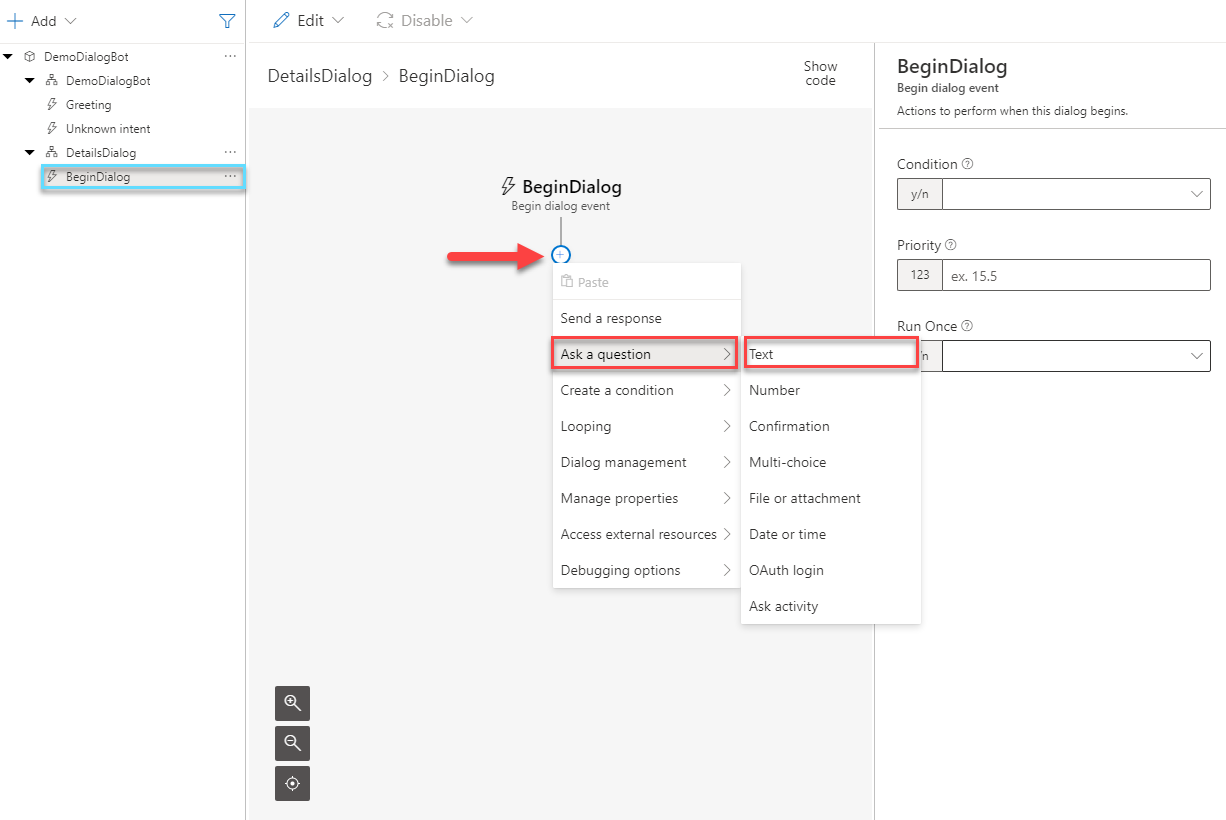

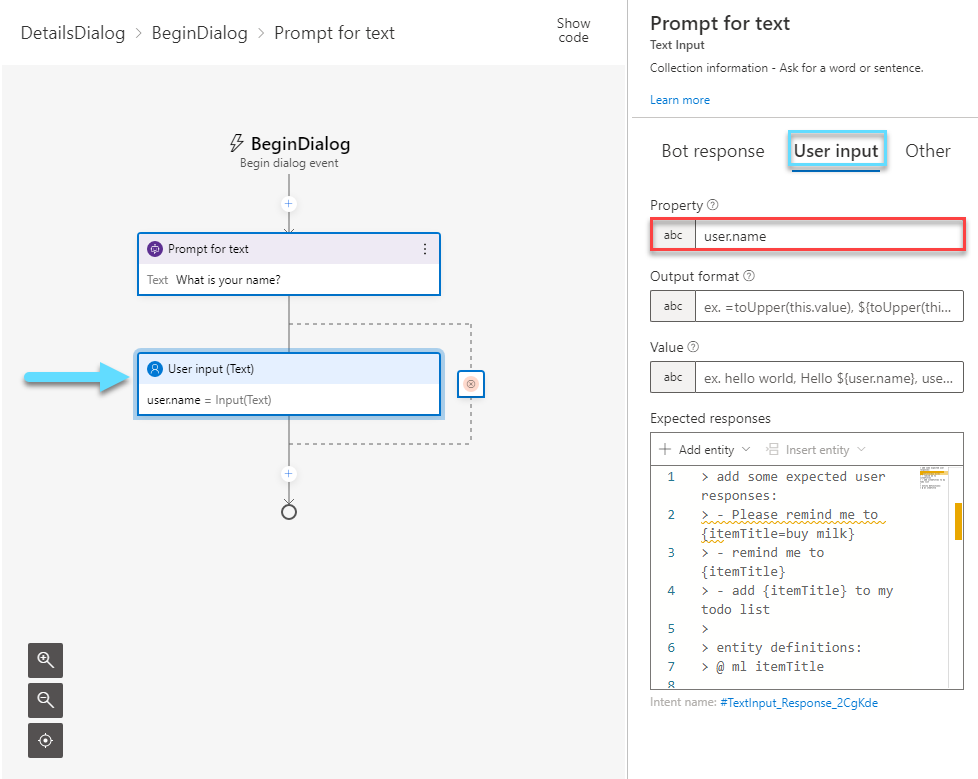

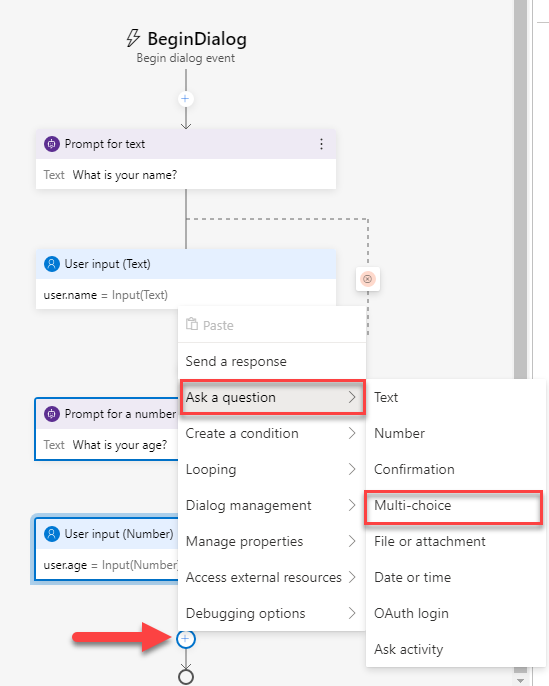

In your new dialog, navigate to the new trigger. Press the “+” sign and select Text from the Ask a question list.

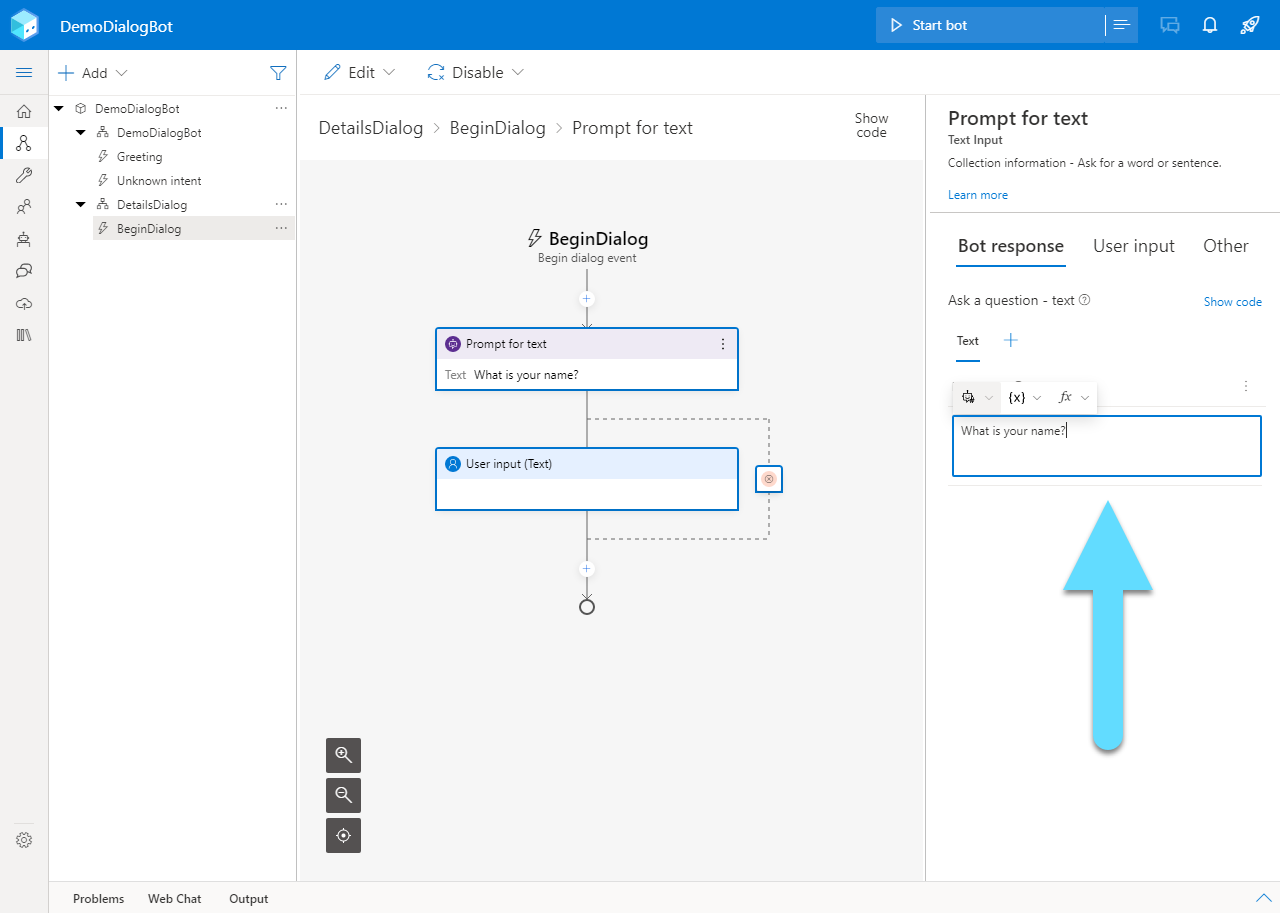

Here you can enter the contents of your new question.

In the Property field of the new User Input, put the name of the variable that stores the user’s answer. For example “user.name”.

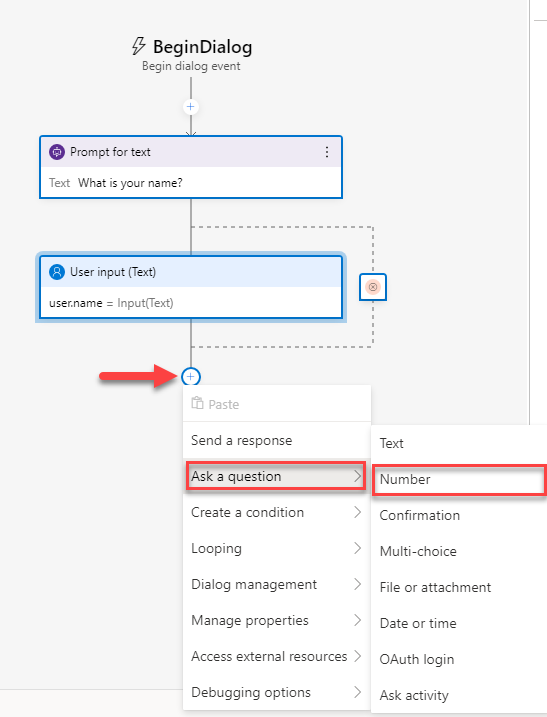

Continue with asking a Number question.

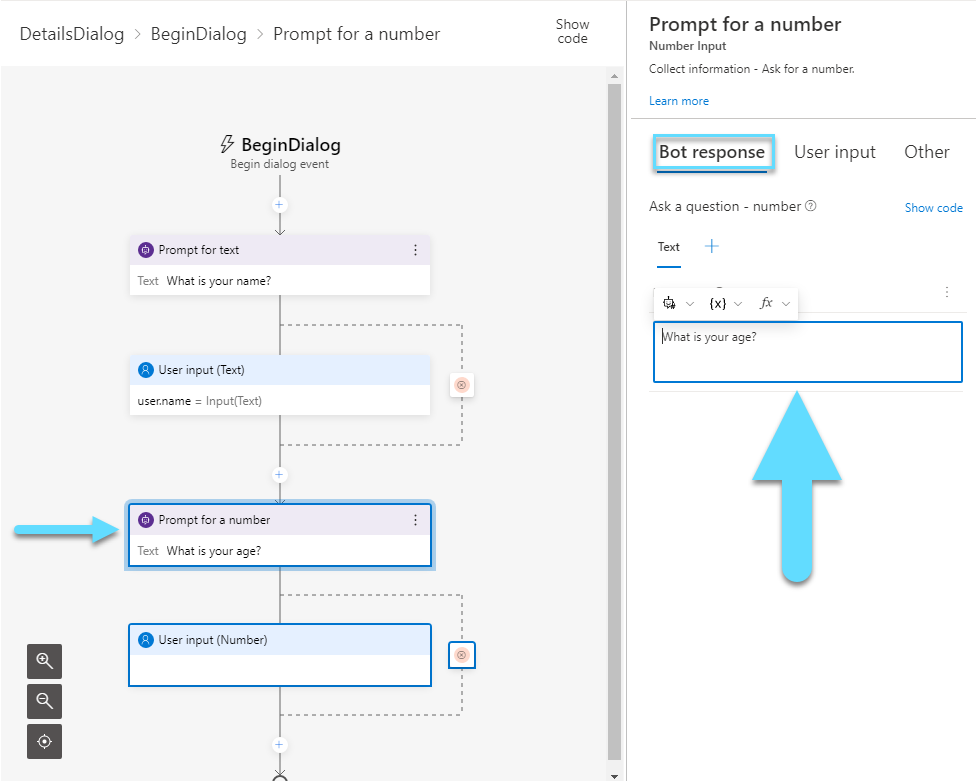

Enter a question that can be answered with a number.

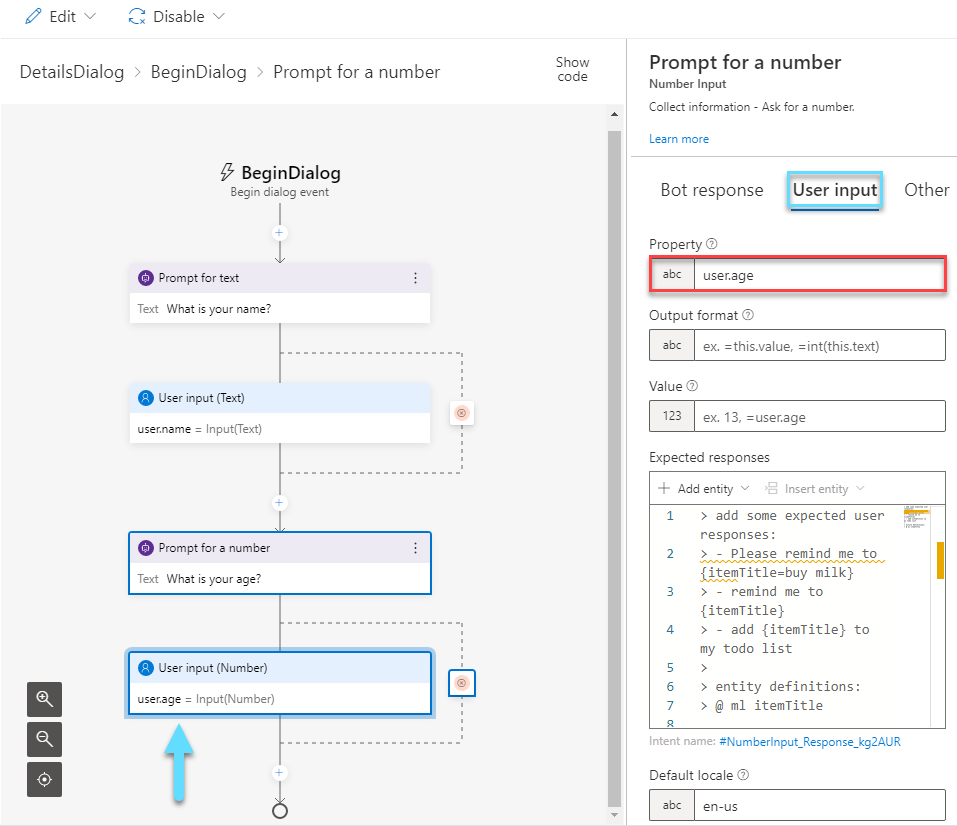

In the Property field of User input, you have to give a name to the variable again. For example “user.age”

Now add a Multi-choice question.

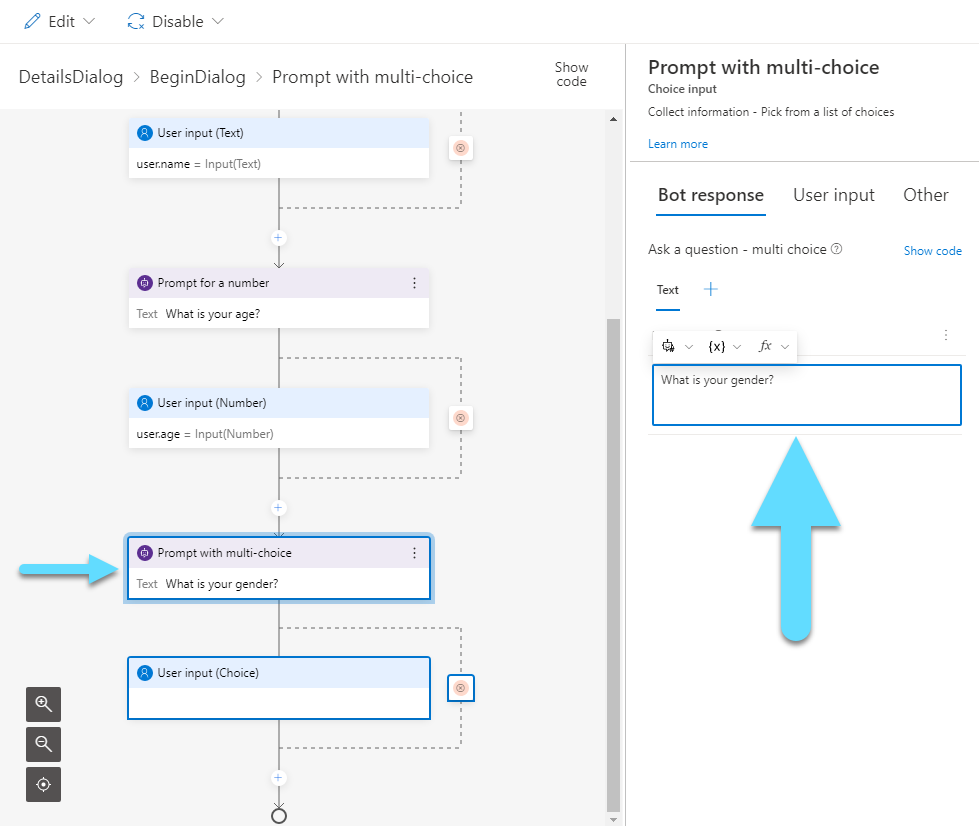

Enter the text for your question.

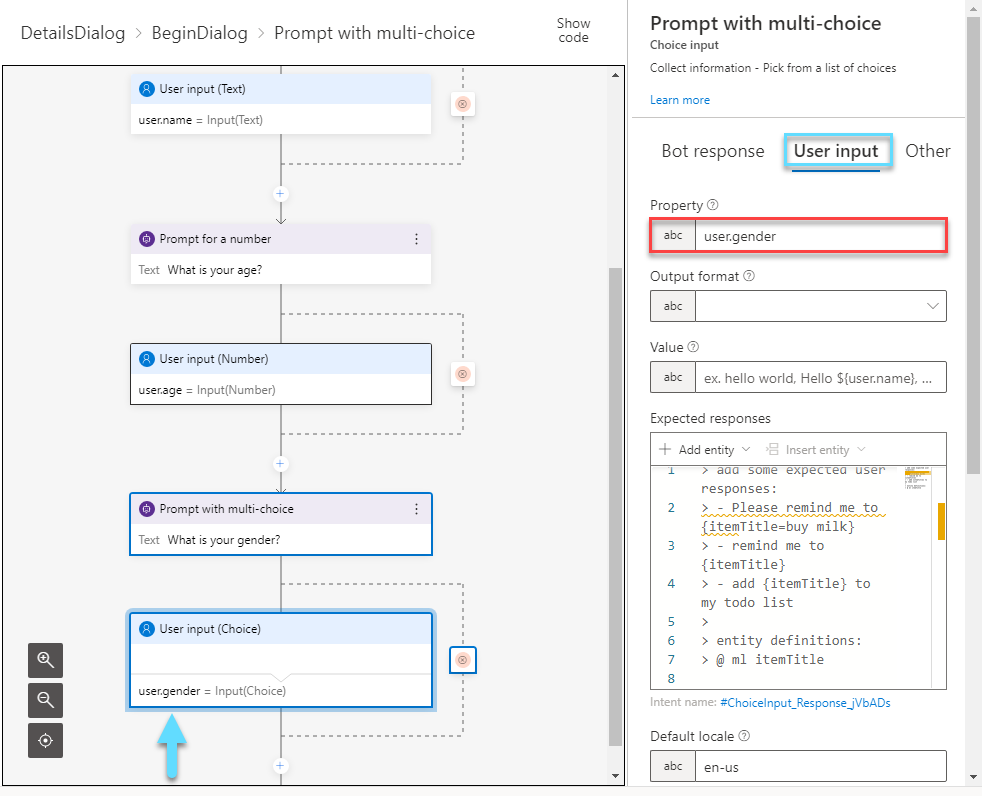

And again, enter the variable name in the Property field of User input, like “user.gender”.

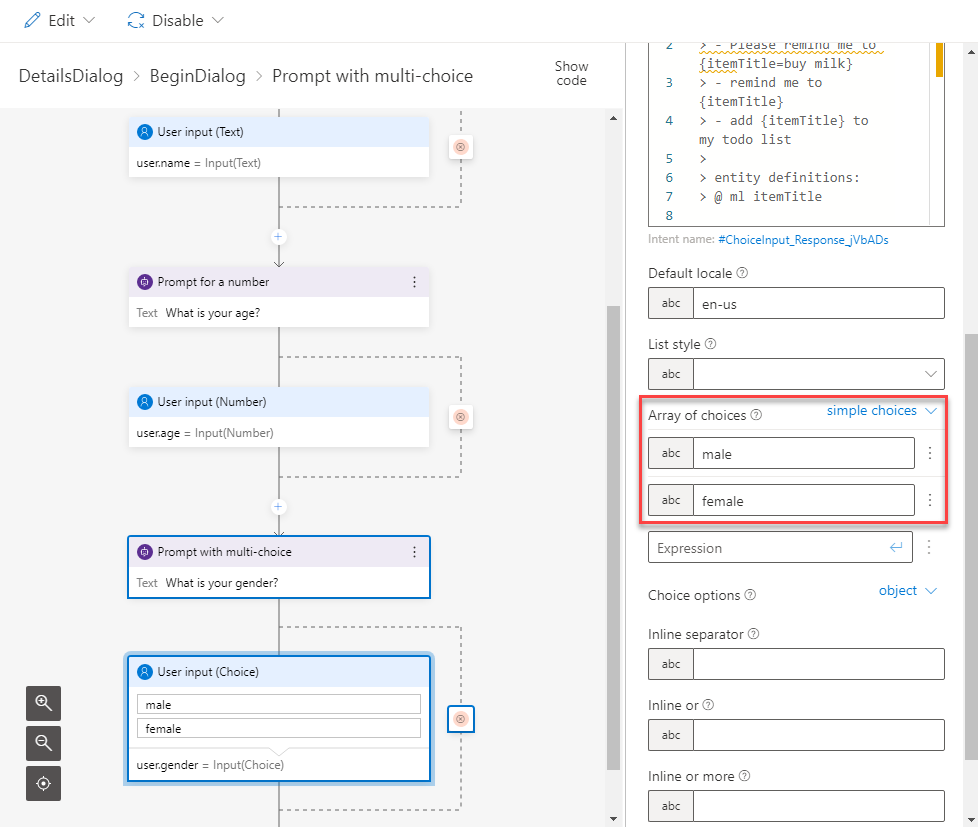

If you scroll down, you will find an Array of choices. Here you can add all the choices that will be available to the user as answers.

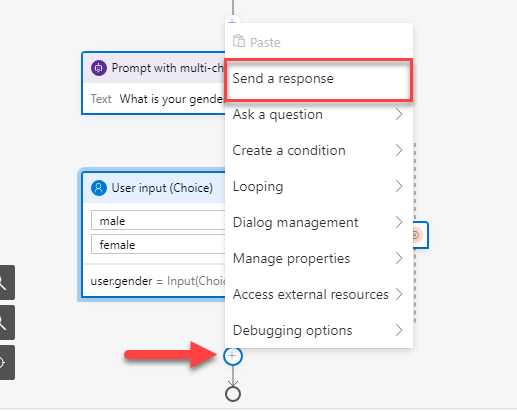

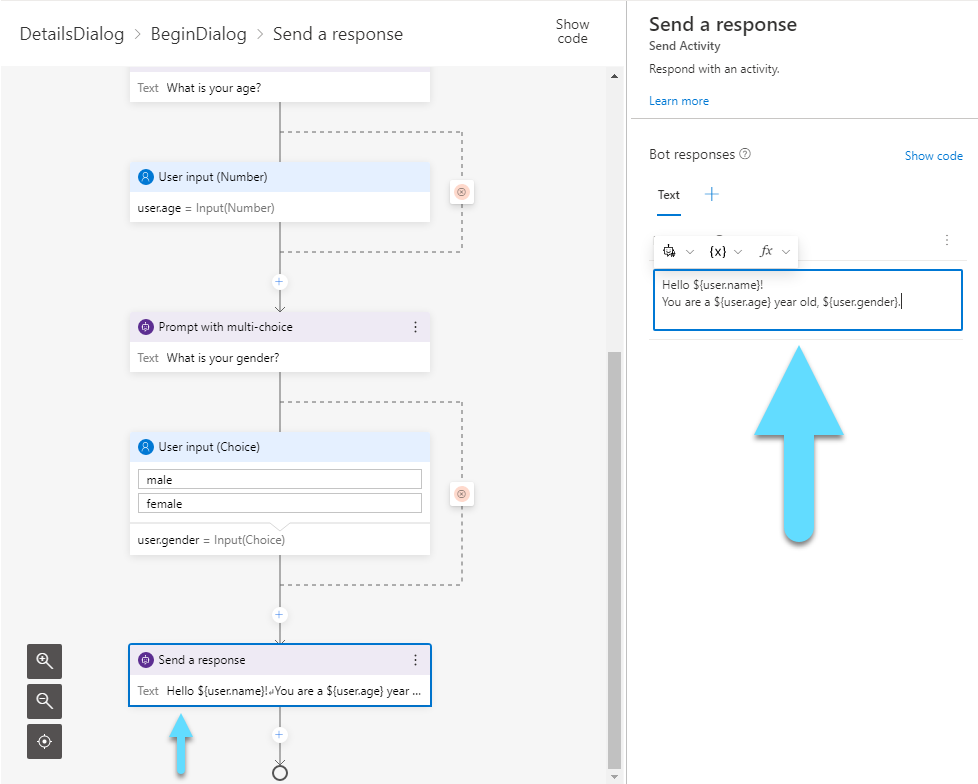

Now, let’s send a message to the user with all the info we gathered. Click the “+” and select Send a response.

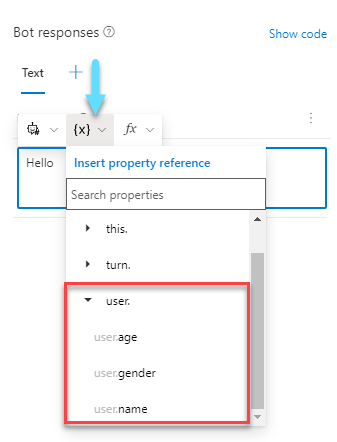

You can find all of your variables under the {x} list.

Use the variables above to compose your message.

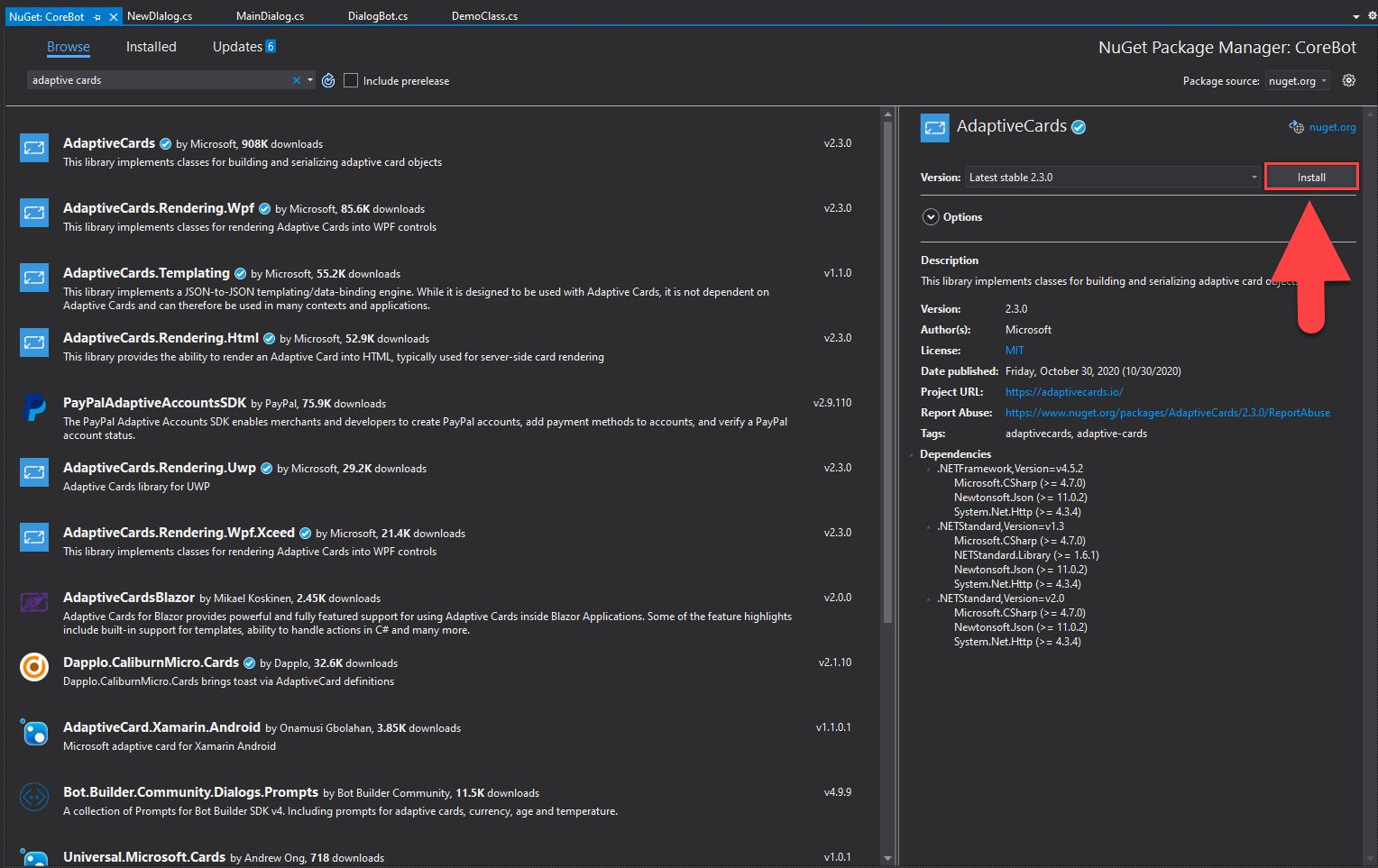

Adaptive Card

Another way of presenting information to the user, is with Adaptive Cards. They look more appealing to the user and provide a better user experience. If you want to learn more about Adaptive Cards, check out this post.

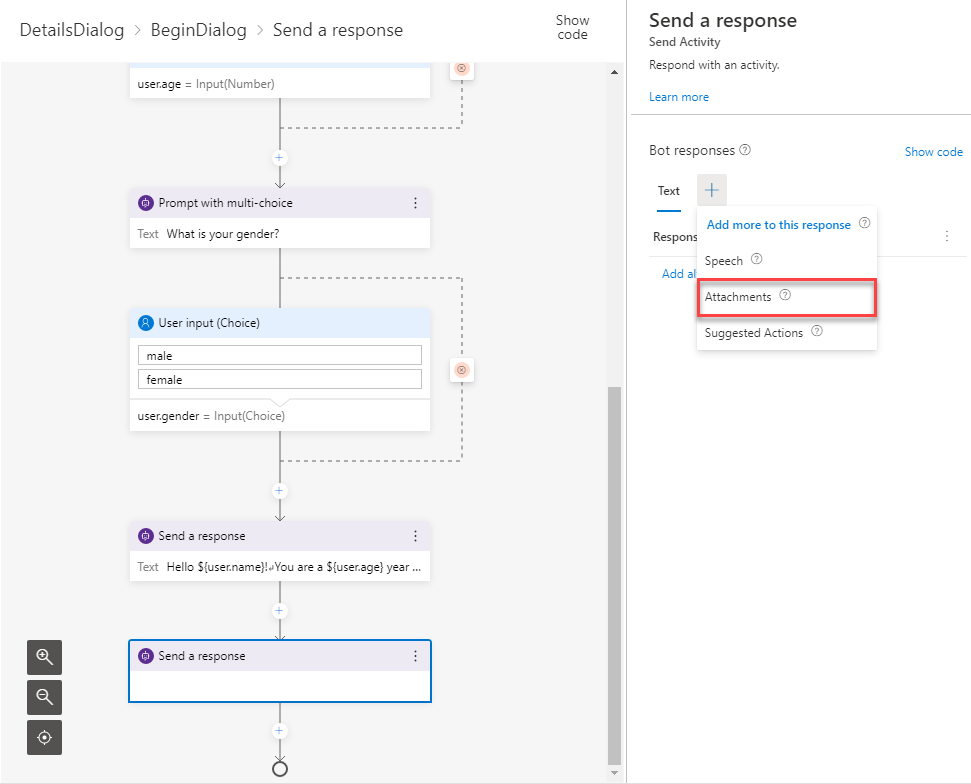

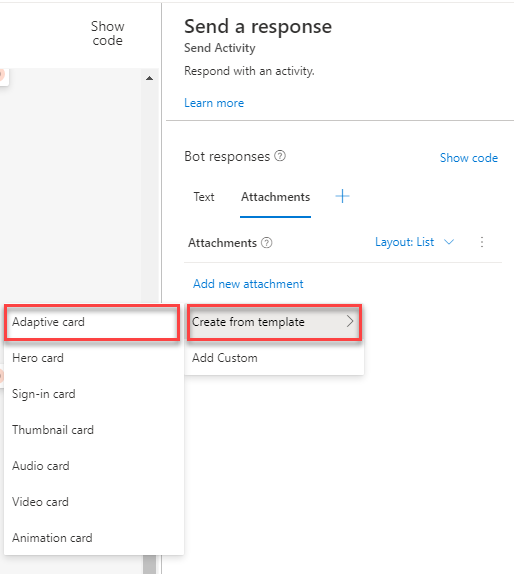

After you select a new response, click on the big “+” sign at Bot responses. From there select Attachments.

Select Add new attachment -> Create form template -> Adaptive card.

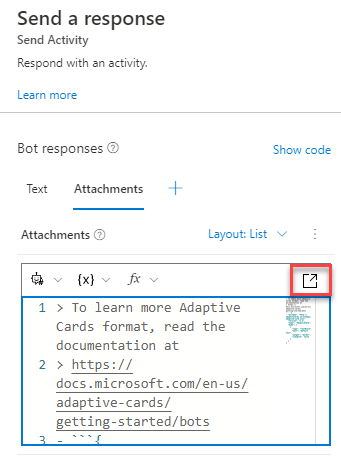

Click the icon at your right to enlarge the code box.

Relpace your card’s body with the JSON bellow.

"body": [

{

"type": "TextBlock",

"text": "Name",

"weight": "bolder",

"isSubtle": false

},

{

"type": "TextBlock",

"text": "${user.name}",

"isSubtle": false

},

{

"type": "TextBlock",

"text": "Age",

"weight": "bolder",

"isSubtle": false

},

{

"type": "TextBlock",

"text": "${user.age}",

"isSubtle": false

},

{

"type": "TextBlock",

"text": "Gender",

"weight": "bolder",

"isSubtle": false

},

{

"type": "TextBlock",

"text": "${user.gender}",

"isSubtle": false

}

]

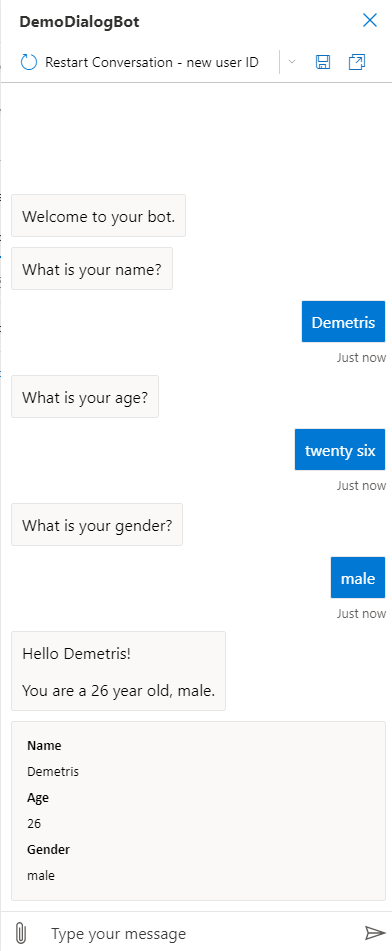

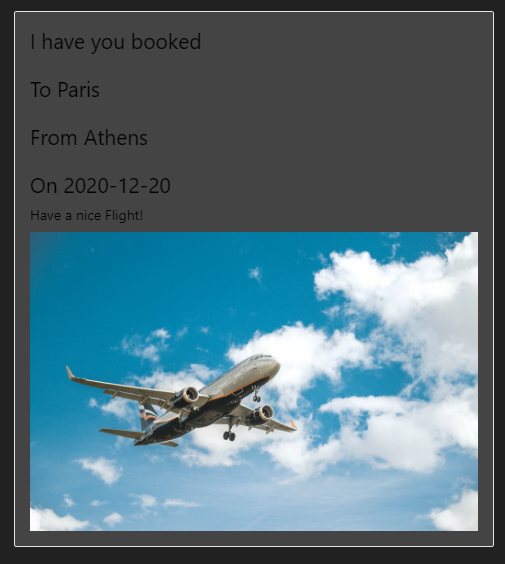

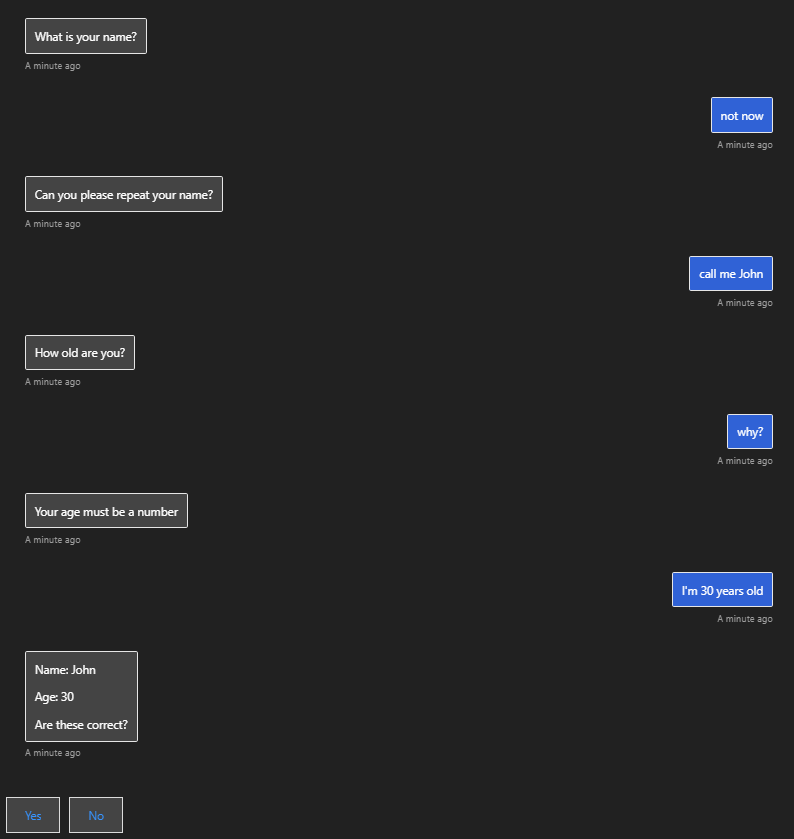

Test

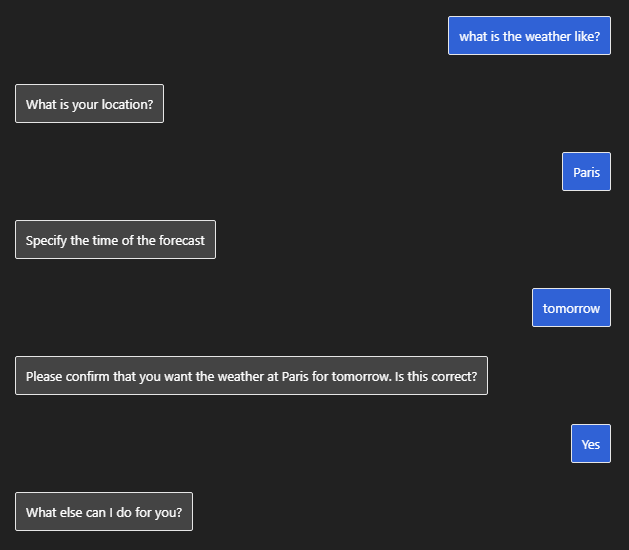

Now it is time to test our bot.

As you can see, our bot can hold the dialog pretty well and the card looks quite nice!

Intergrade QnA Maker to your bot using Bot Framework Composer

July 12, 2021

July 12, 2021

QnA Maker is a cloud-based API service, part of Azure Cognitive Services, that lets you create a conversational question-and-answer layer over your existing data. It gives you the ability to build knowledge bases and extract questions and answers to incorporate in your bot.

Preface

QnA Maker offers a bot the ability to answer questions from a knowledge base. This is a subject that I have covered in the past at this blog. However this is a new, faster, easier and code-free way to implement it to your bots, so it deserves a new post. Let us get into it!

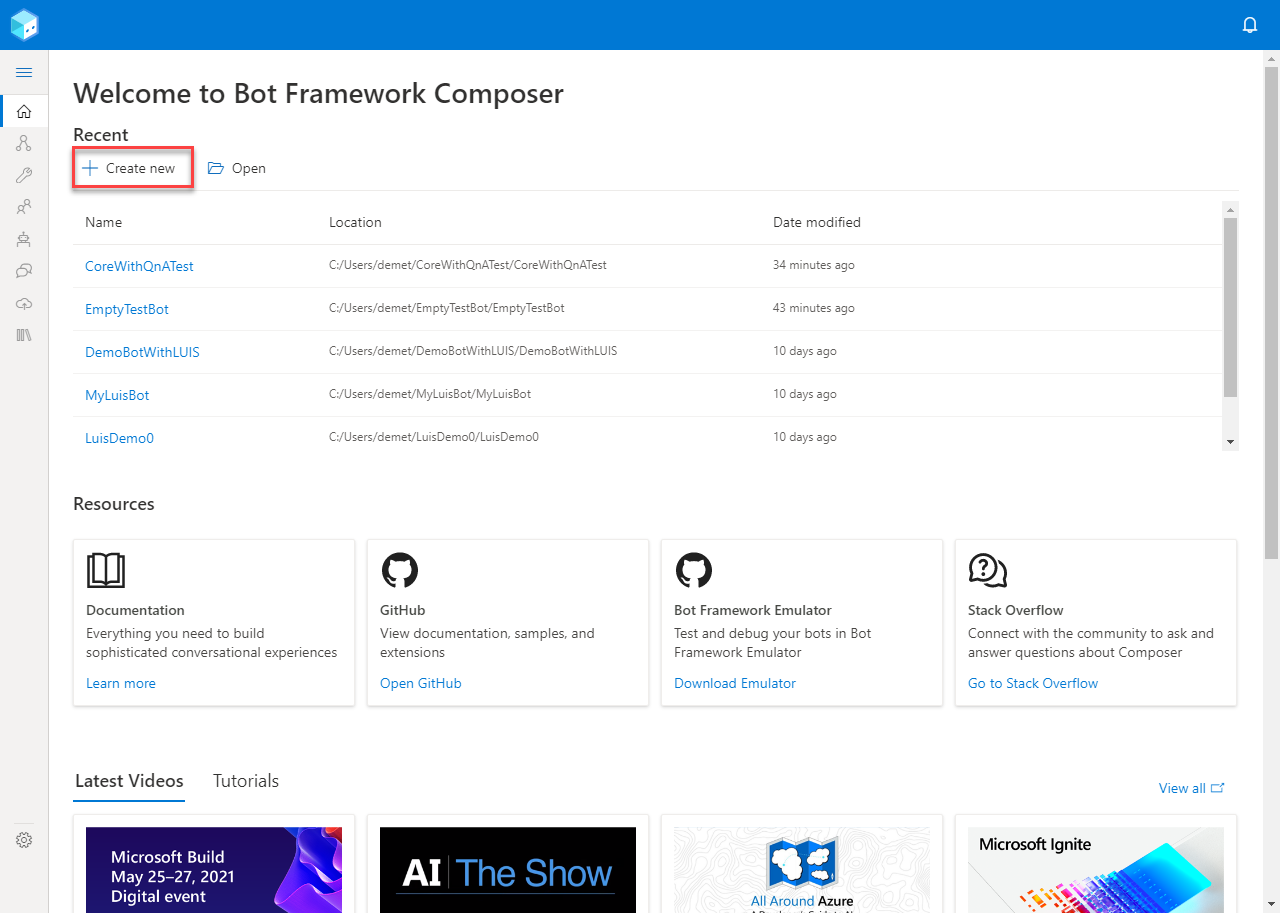

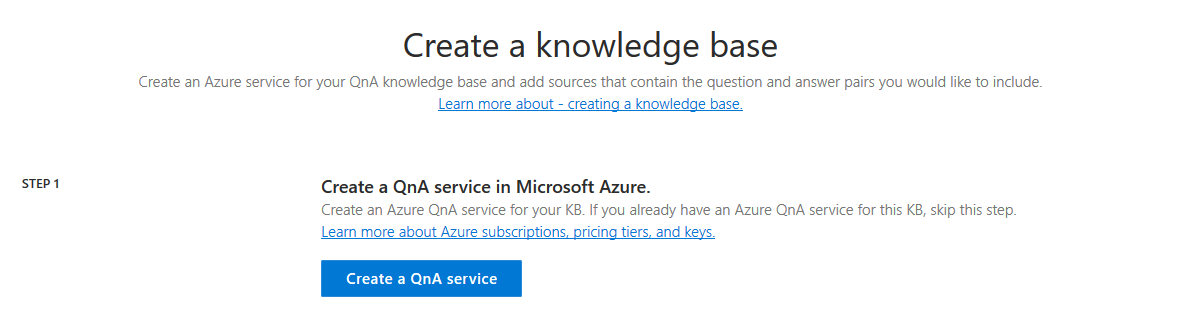

Create

Open the Bot Framework Composer and click Create new to create your new bot.

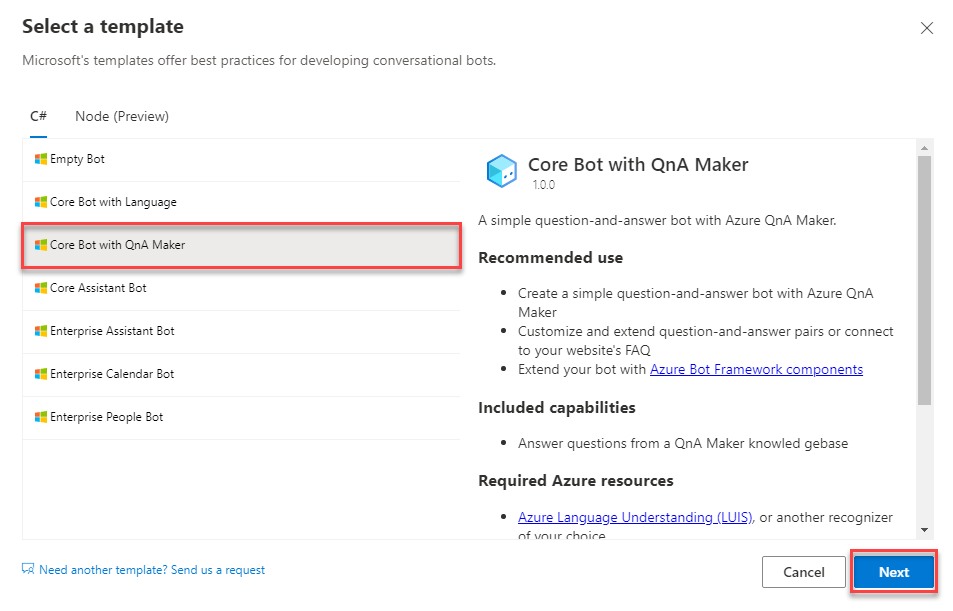

Choose the Core Bot with QnA Maker template and click Next.

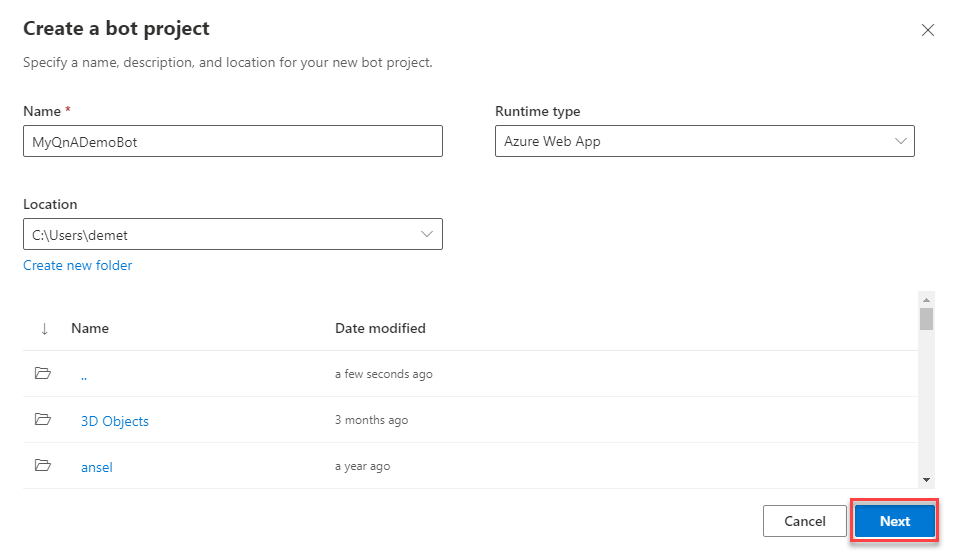

Fill in the deatails about your bot (including your bot’s name) and click Next.

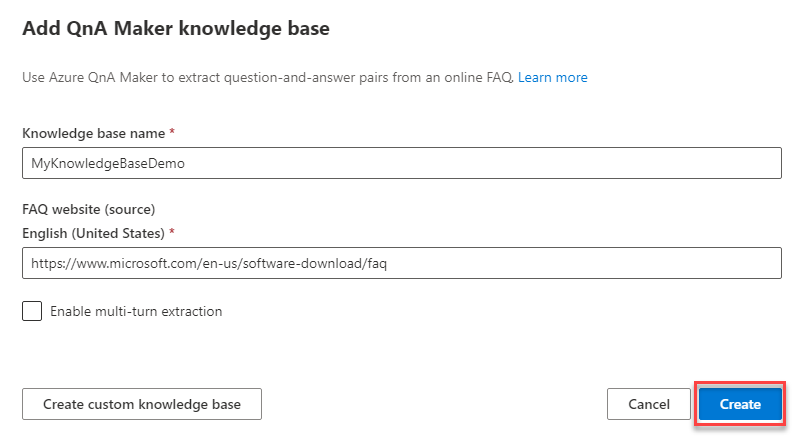

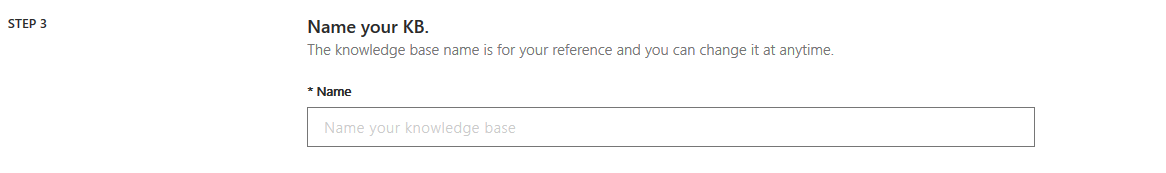

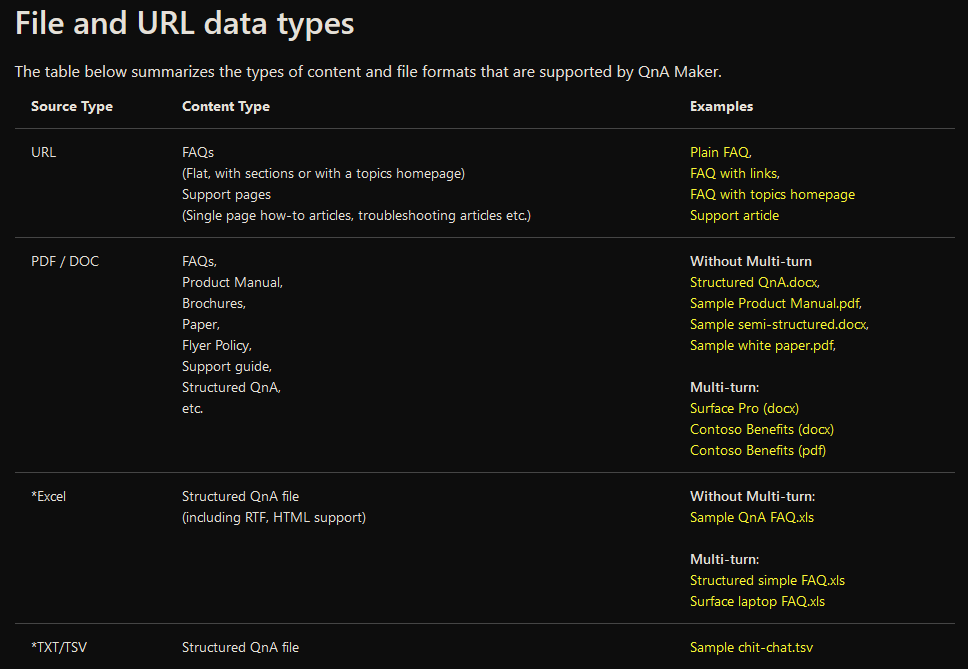

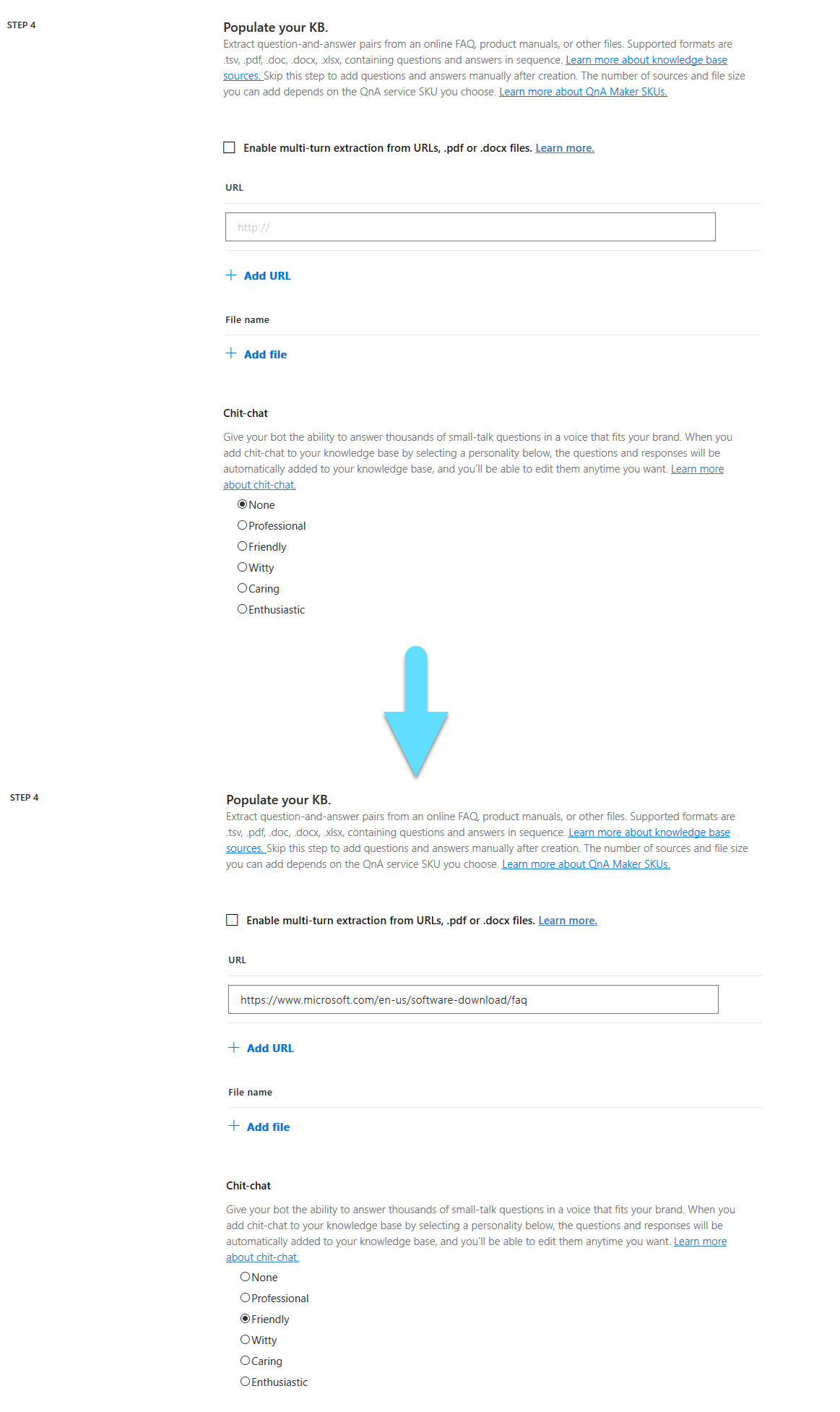

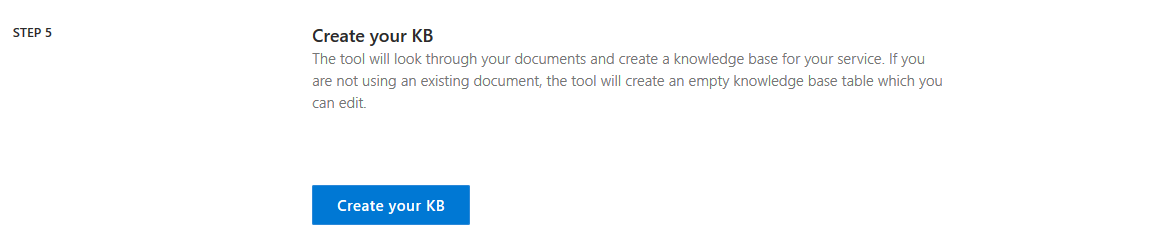

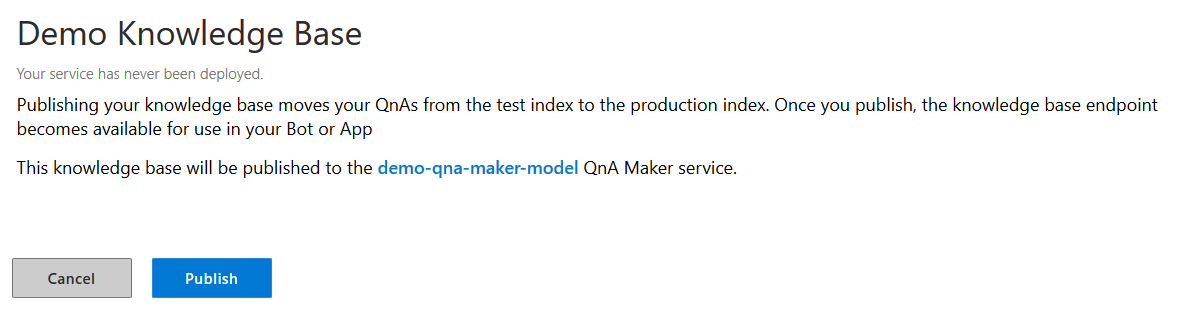

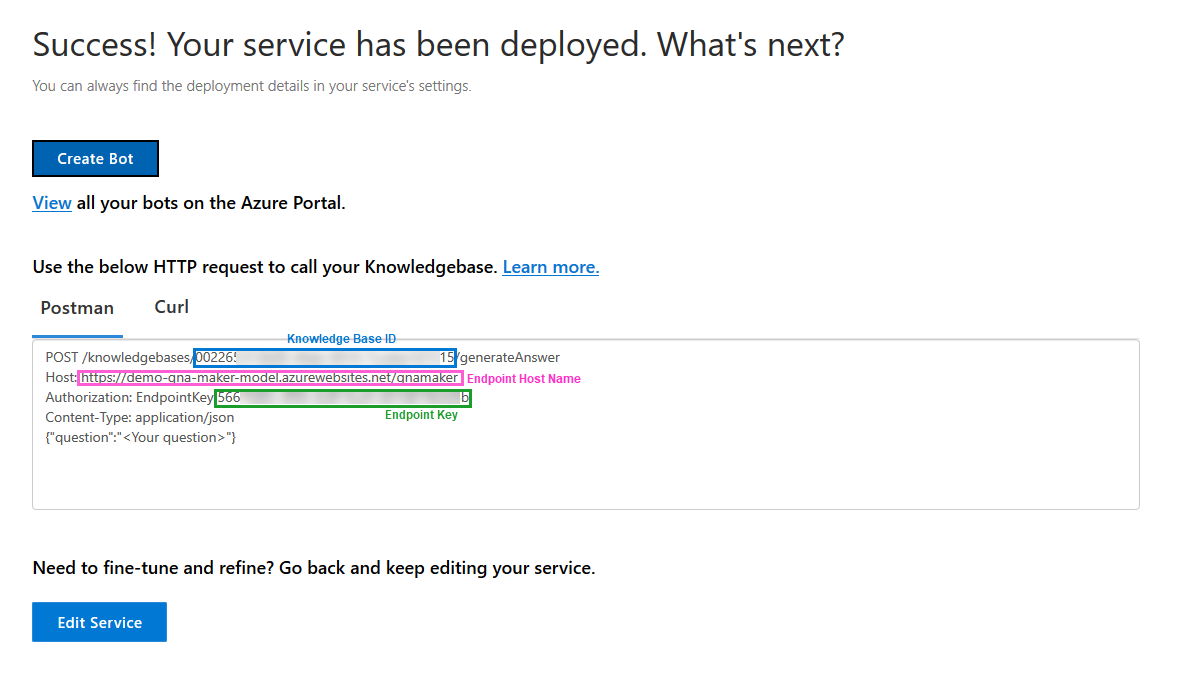

Now you will be asked to name your knowledge base and give a URL contaning all of the answered questions. We will use the same URL we used last time, and click Create.

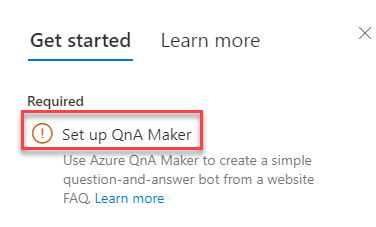

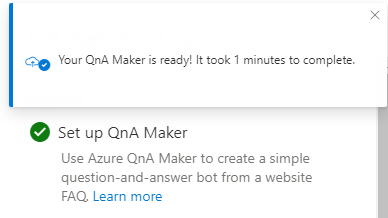

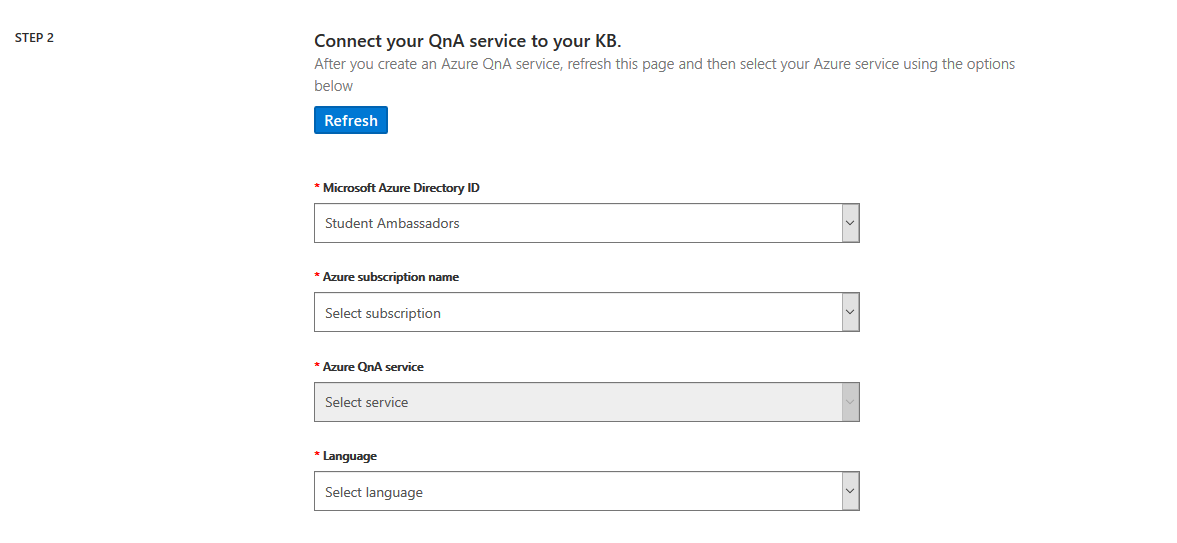

Once your bot is created, you will notice a notification requiring you to Set up QnA Maker. You can click the title of the notification as it is a link.

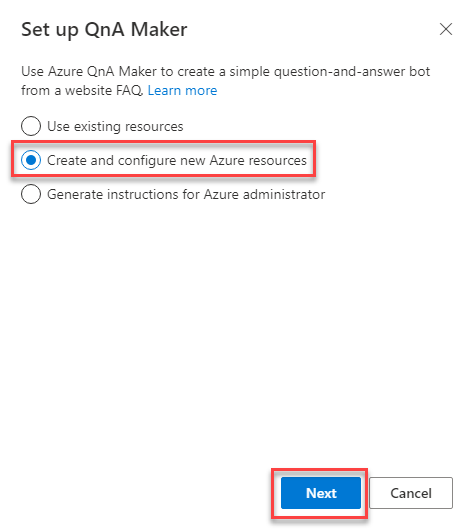

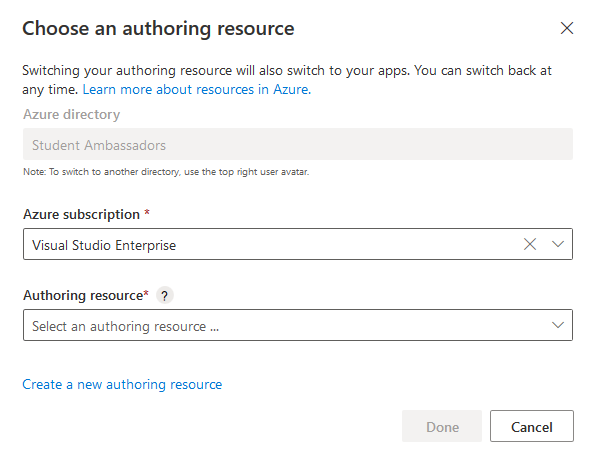

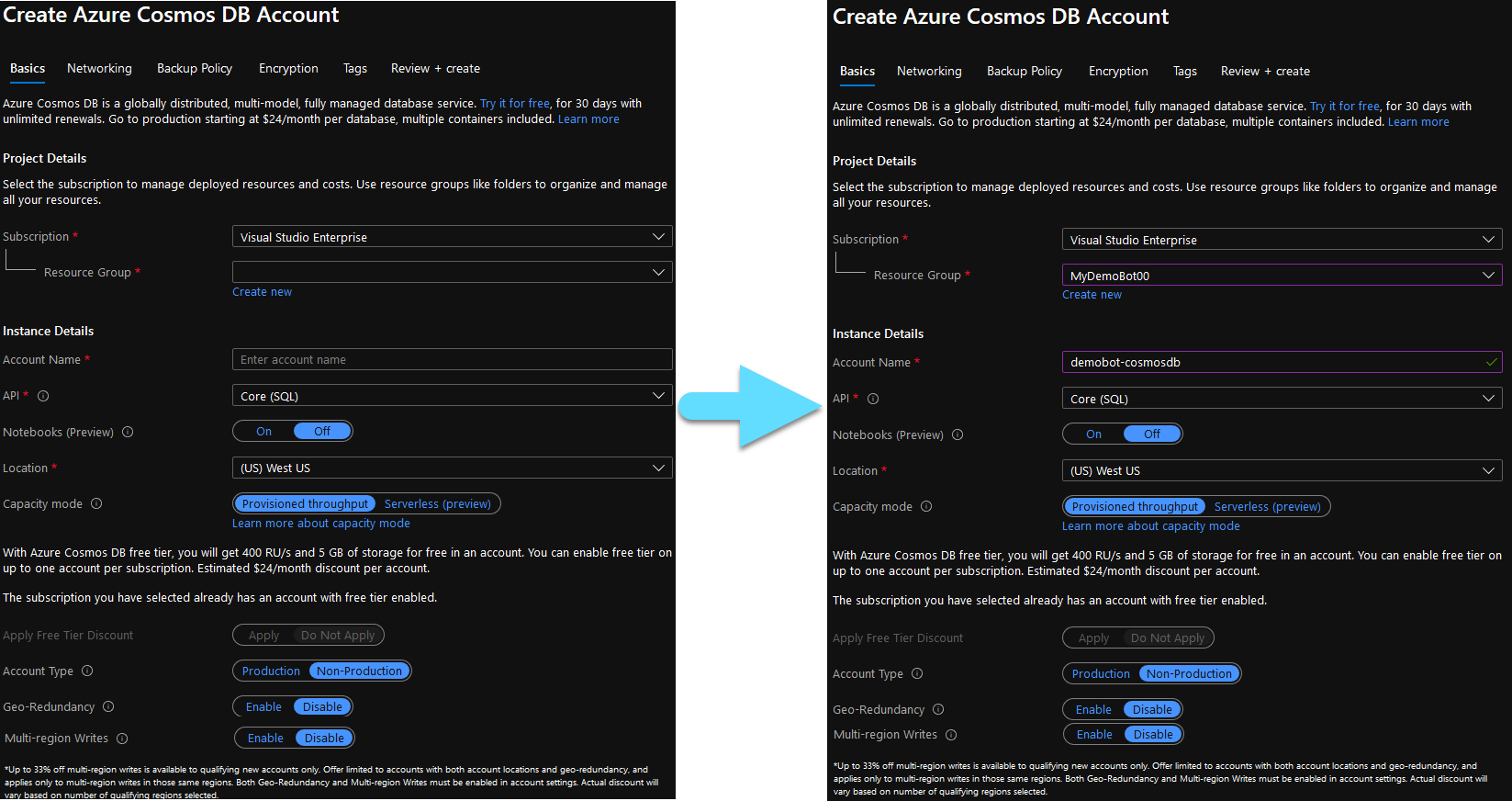

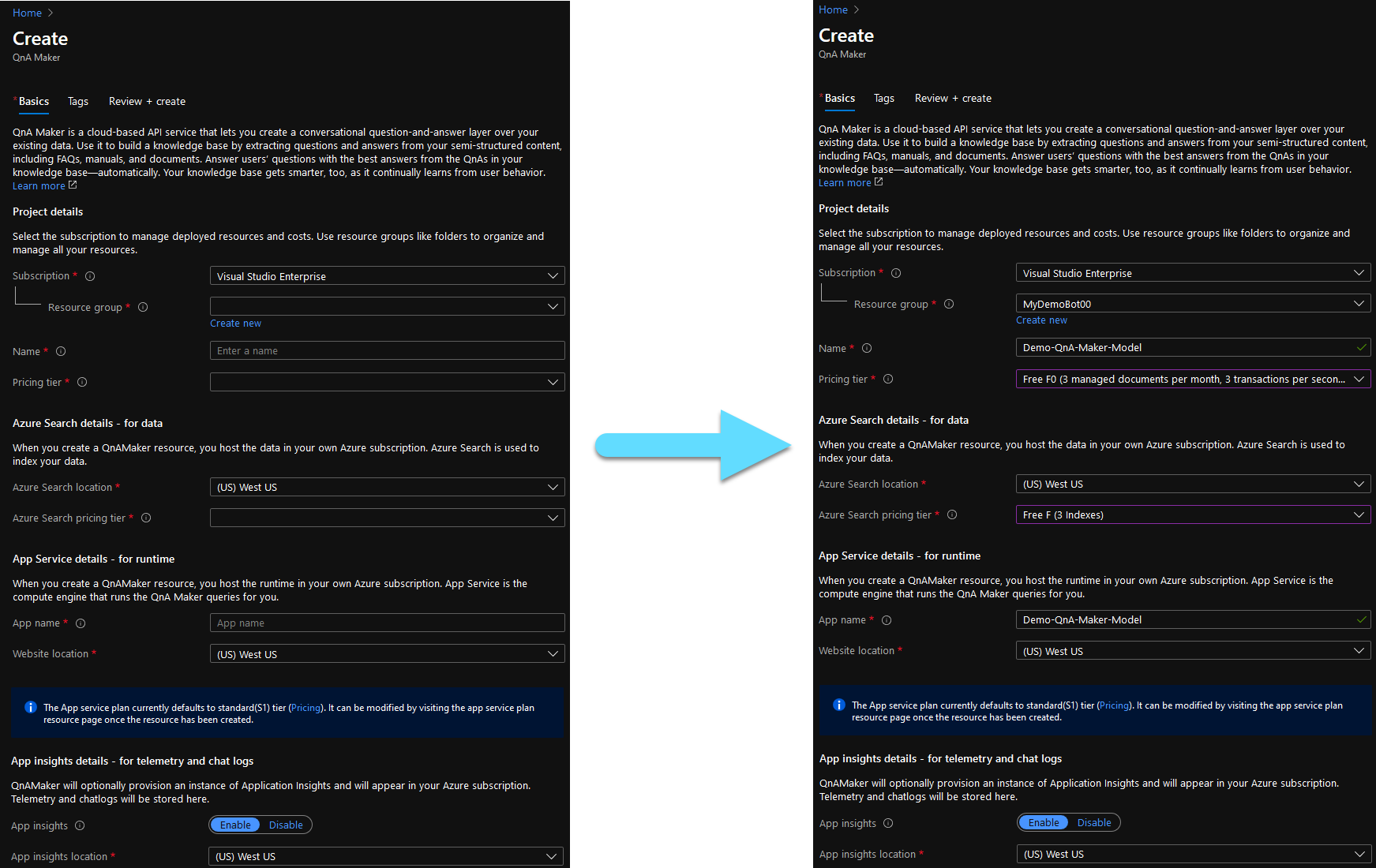

After clicking the notification, you will be prompted to Set up the QnA Maker. Select the Create and configure new Azure resources option, if you have not created any resources. Then click Next.

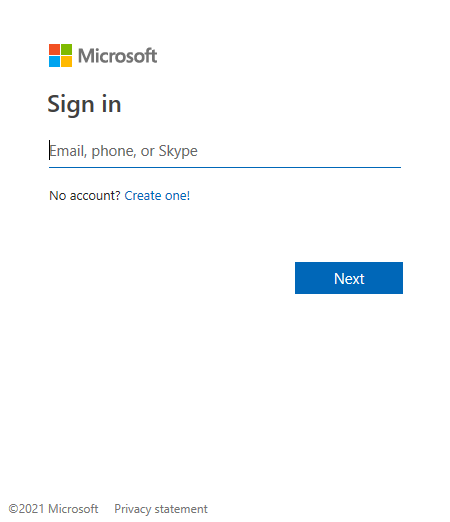

You will now need to Sign in to your Azure account.

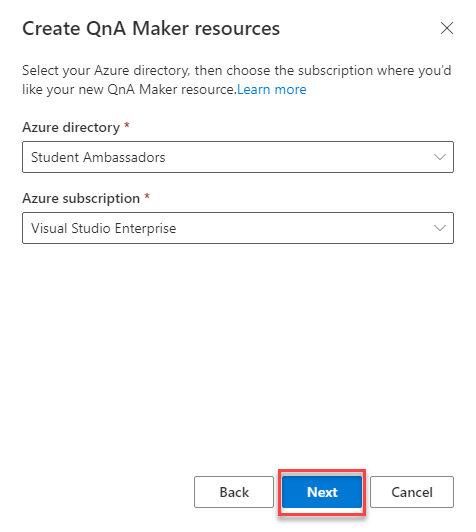

Enter the directory and subscription for the new resources and click Next.

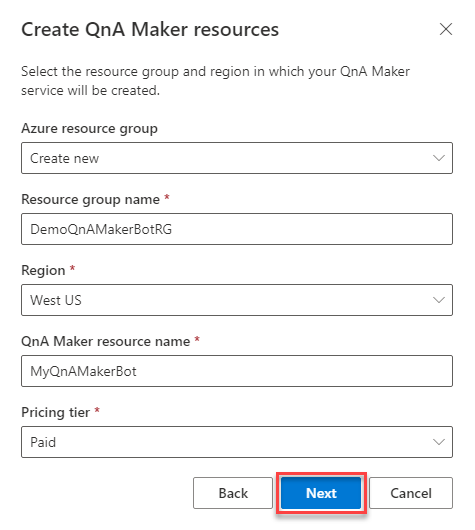

You now have the ability to create a new resource group for your new resources and choose their Pricing tier. Fill in the details and click Next.

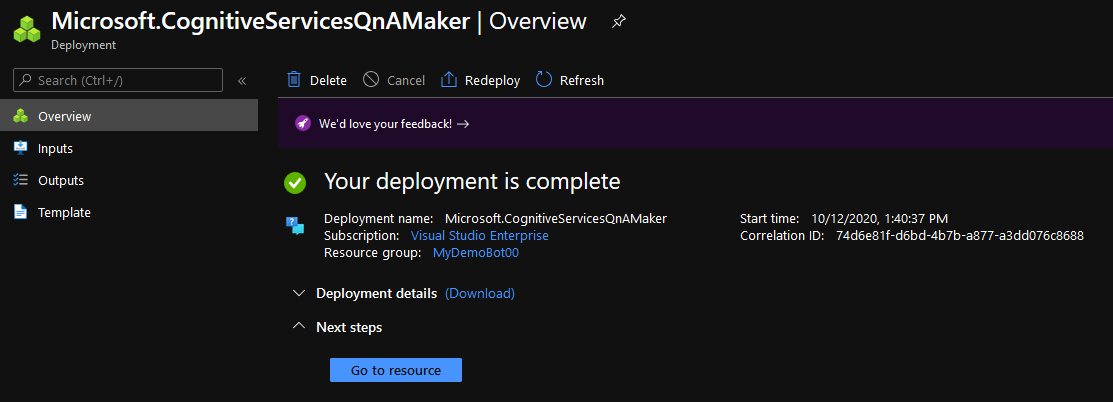

Once the process is complete you will receive a notification. Now your bot is fully working!

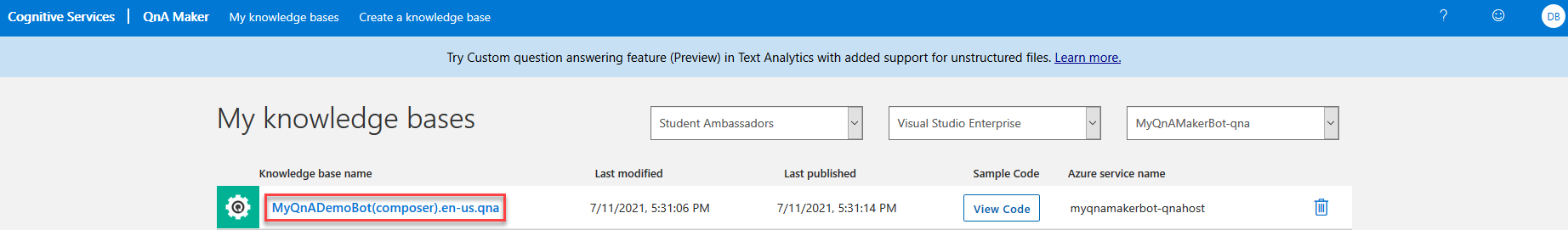

The new resources are available in your azure account. If you would like to have a look at your knowledge base and tinker with it, you can visit the QnA Maker portal and you can find everything about your knowledge base there.

Test

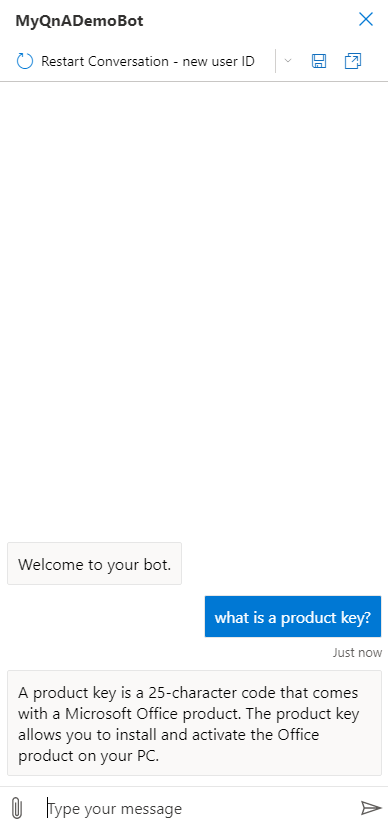

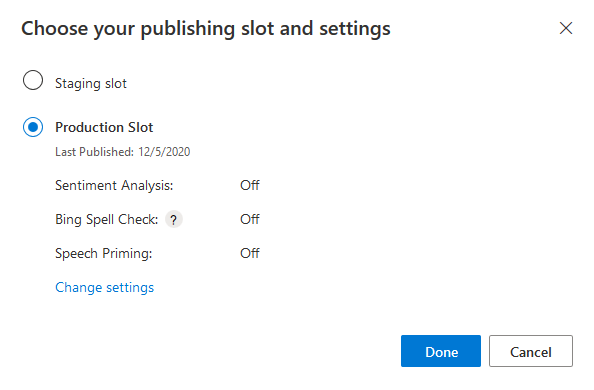

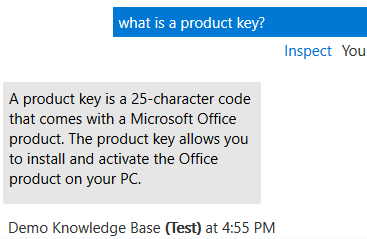

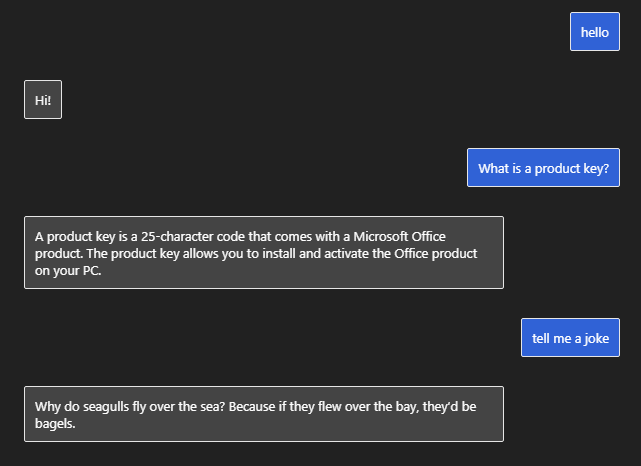

To test the capabilities of your bot, click the Start bot button in the Bot Framework Composer, and open your bot in the Web Chat.

Ask your bot a question, which is included in the site we included in our knowledge base and observe how well it responds. Within the current implimentation, it seems to be working pretty well!

And this is an easier way of connecting your bot with a knowledge base without the need for any code!

Intergrade LUIS to your Azure Bot

July 02, 2021

July 02, 2021

LUIS (Language Understanding) is a cloud-based conversational AI service that applies custom machine-learning intelligence to a user’s conversational, natural language text to predict overall meaning, and pull out relevant, detailed information. Learn more about it here.

A client application for LUIS is any conversational application that communicates with a user in natural language to complete a task. It becomes especially usefull when creating a chatbot to provide the ability to comunicate using human language. The communication between LUIS and Azure Bot is done using JSON.

Create

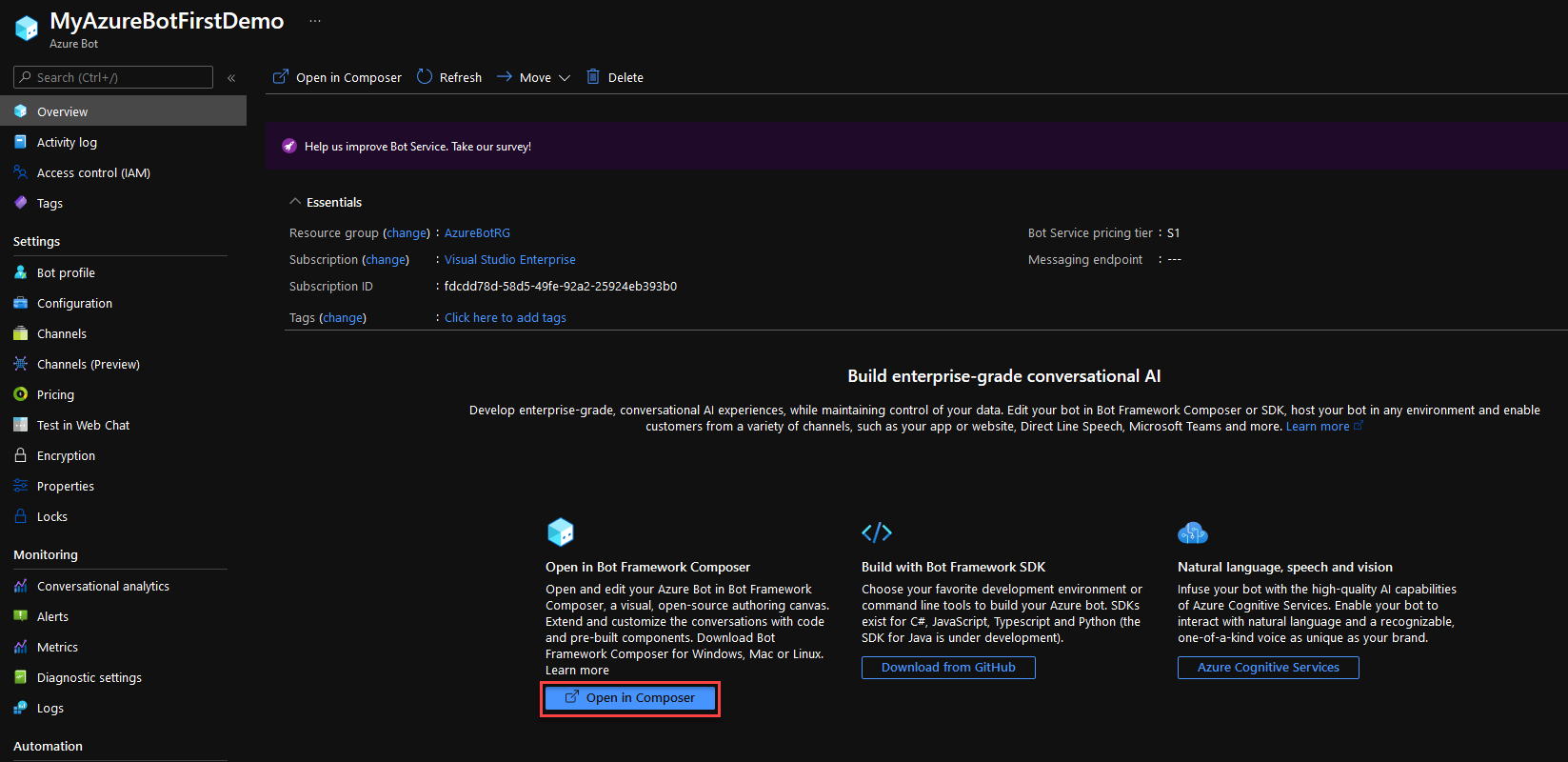

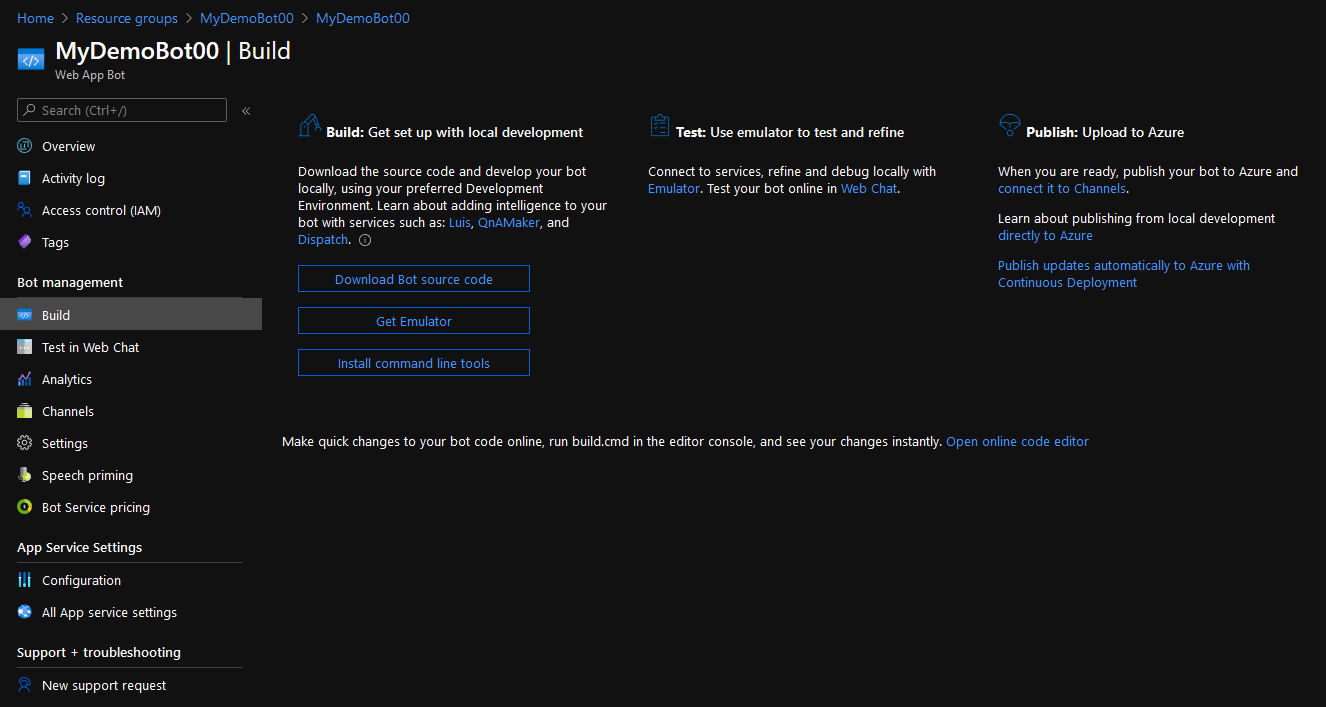

Go to the Azure Portal and create an Azure Bot. You can learn how to do it in this post. Open your newly created bot using the Bot Framework Composer.

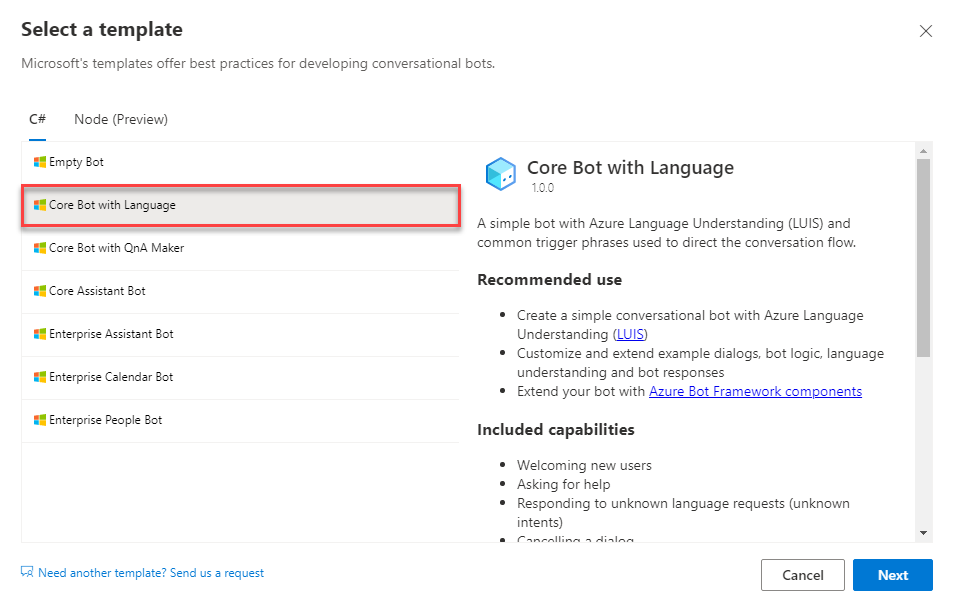

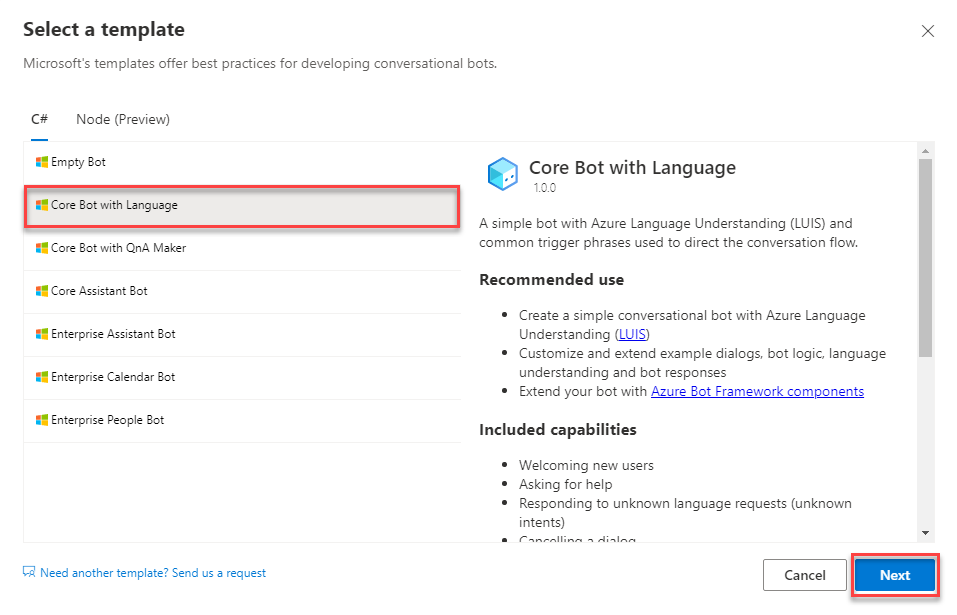

In the Bot Framework Composer select the Core Bot with Language template and click Next. This will allow you to create a sample bot with LUIS capabilities.

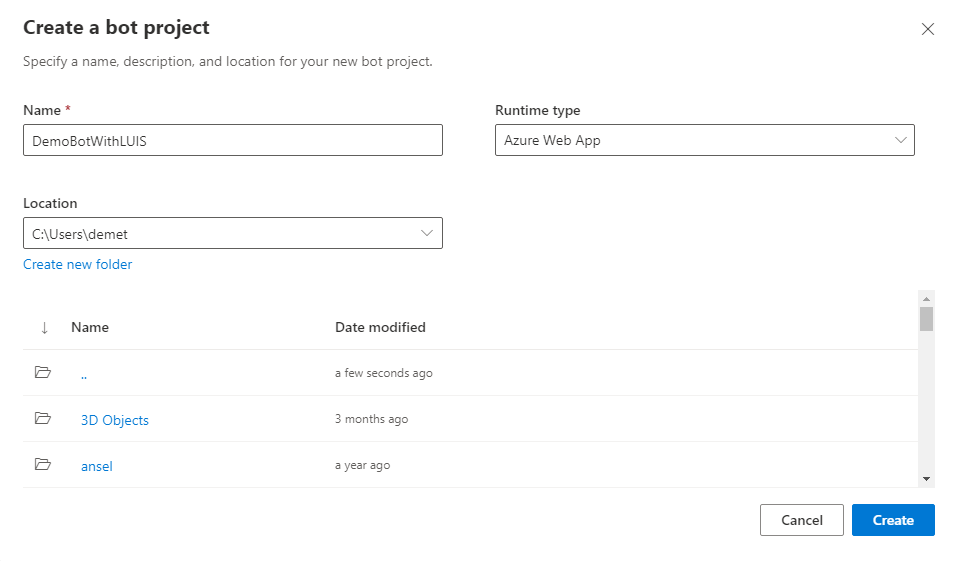

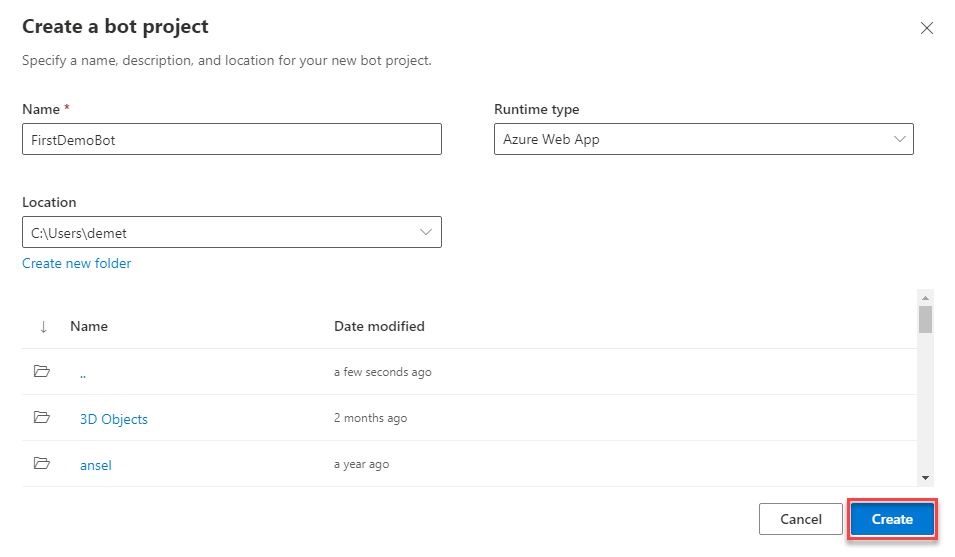

Name your bot accordingly and then click Create.

You might be asked to login using your Microsoft account.

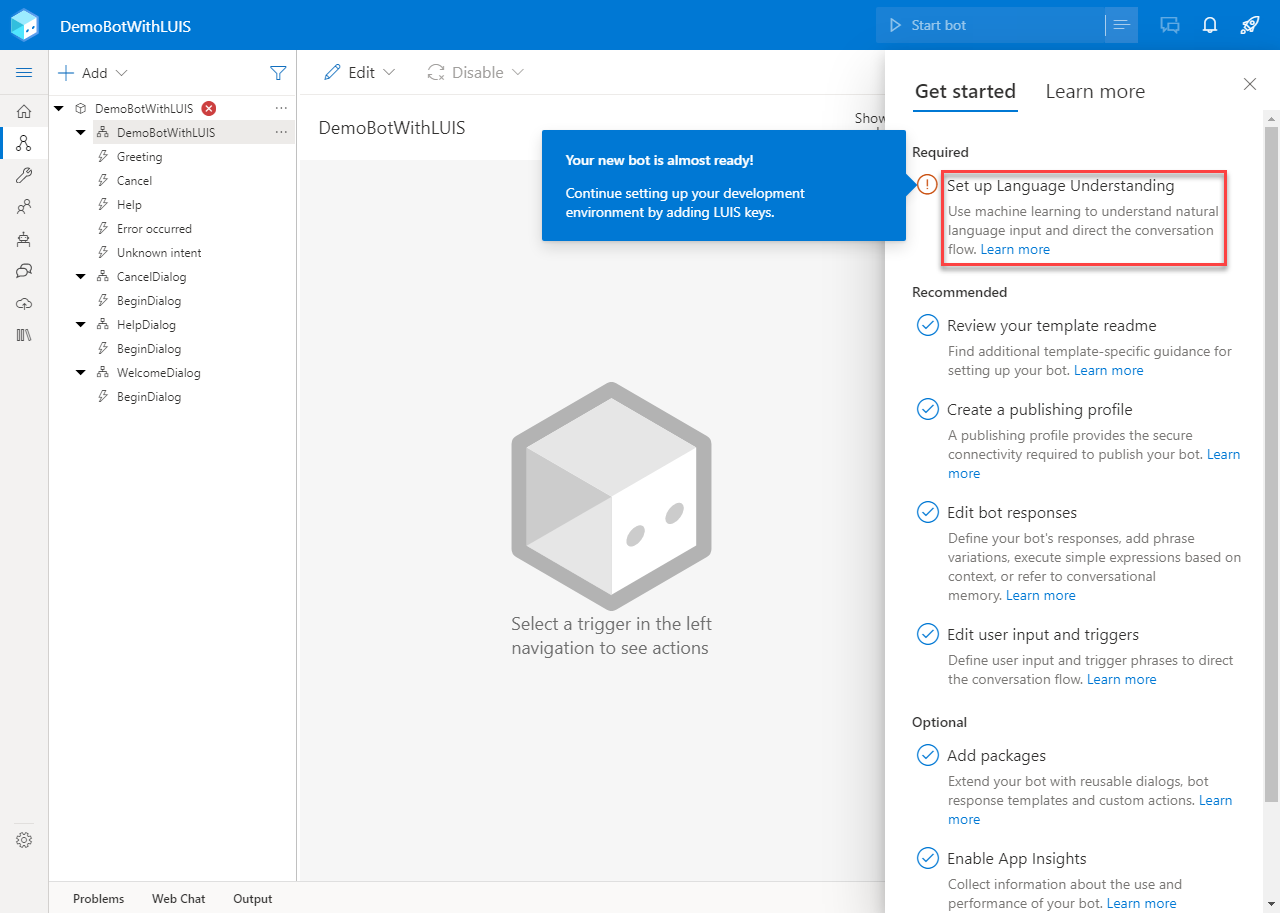

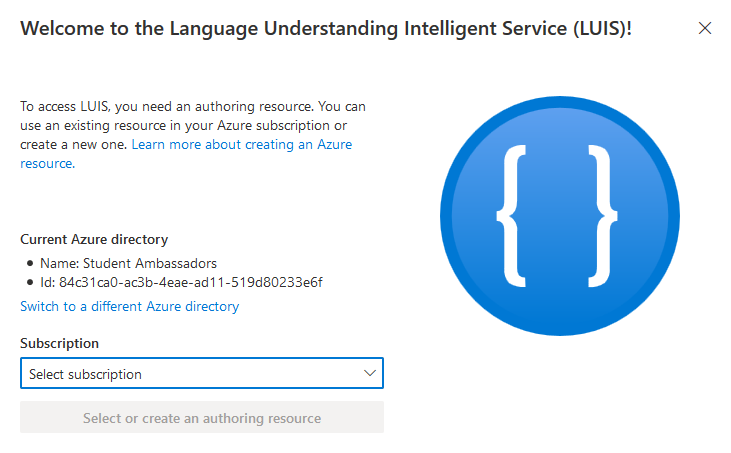

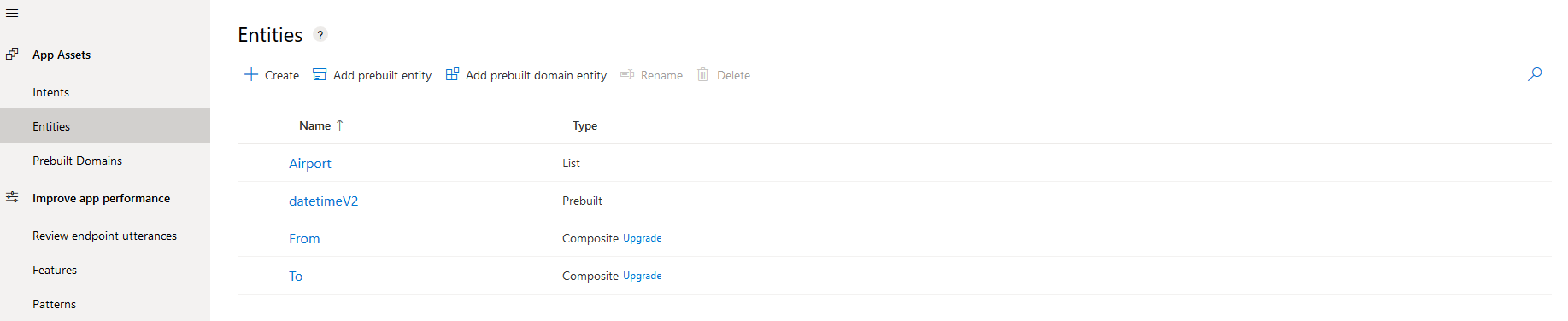

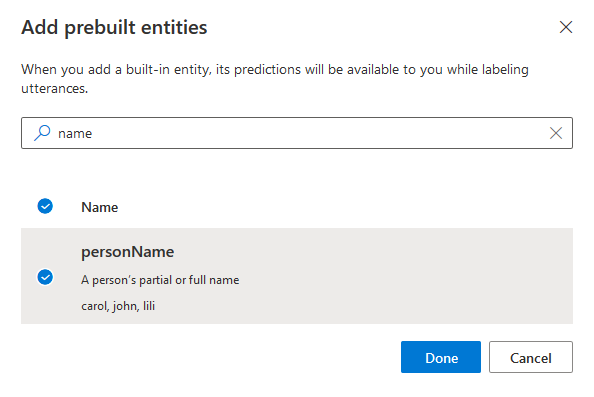

Once your bot is created, you will se an error pop up. Select the Set up Language Understanding requirement to proceed with LUIS.

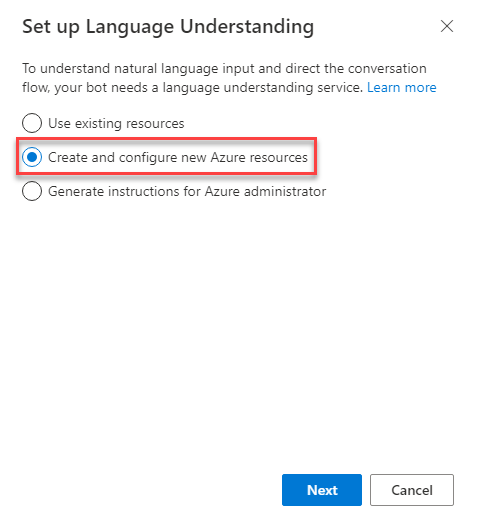

Select the Create and configure new Azure resources option to create a new LUIS resource. Then click Next.

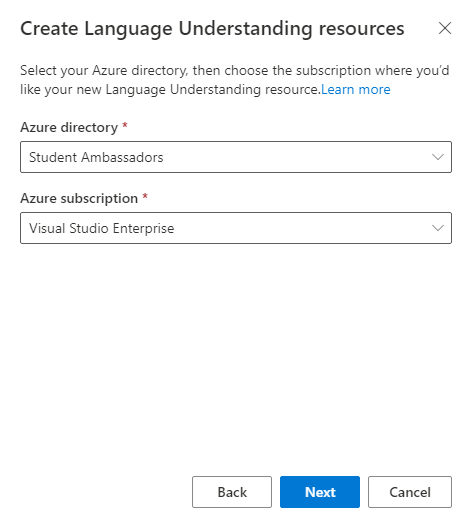

You might need to login to your Microsoft account again. Then select your Azure directory and subscription. Click Next to continue.

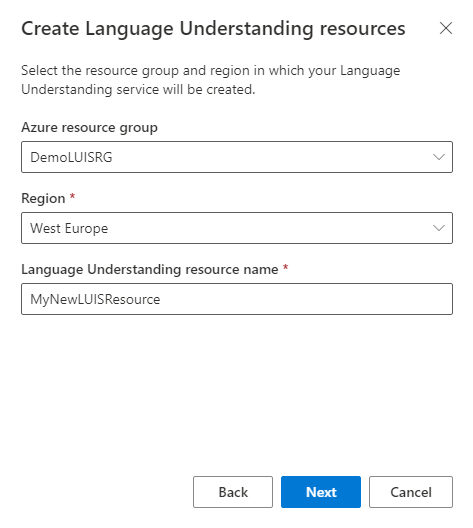

Pick a name for your LUIS resource, the resource group you want to include it and your preferred region. After that click Next to proceed.

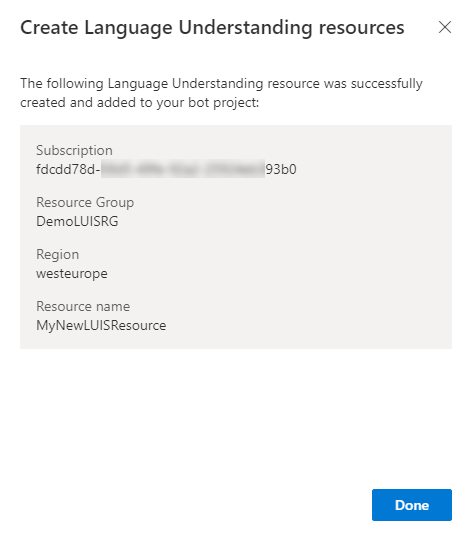

Once you hit Done, your LUIS resource has been already created!

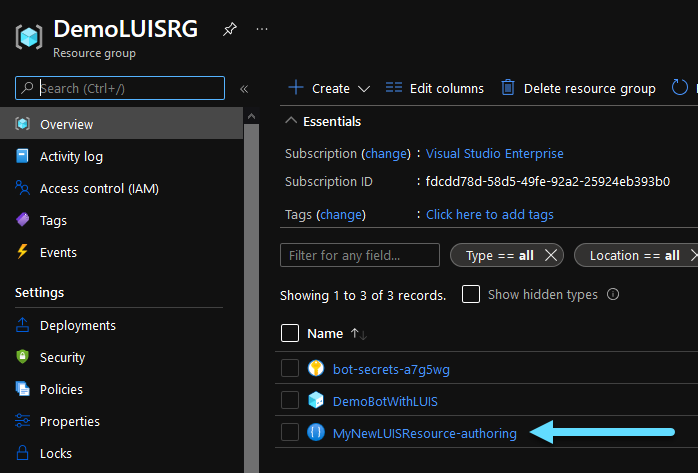

If you take a look in your resource group you will now find a new LUIS resource available.

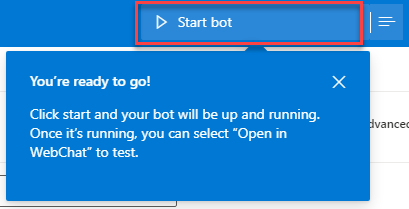

CLick Start bot in the composer to run your bot.

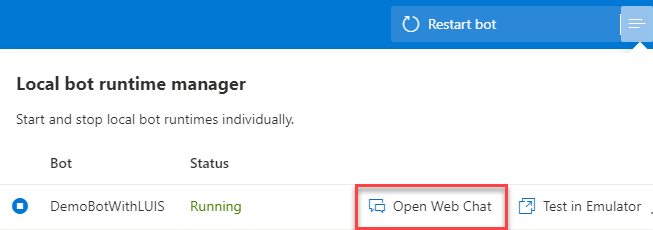

Once your bot is up and running you can test it using the emulator or the Web Chat. We will use the Web Chat for now, so you do not need to download the emulator. However for larger projects, downloading the emulator is adviced.

Add an Intent

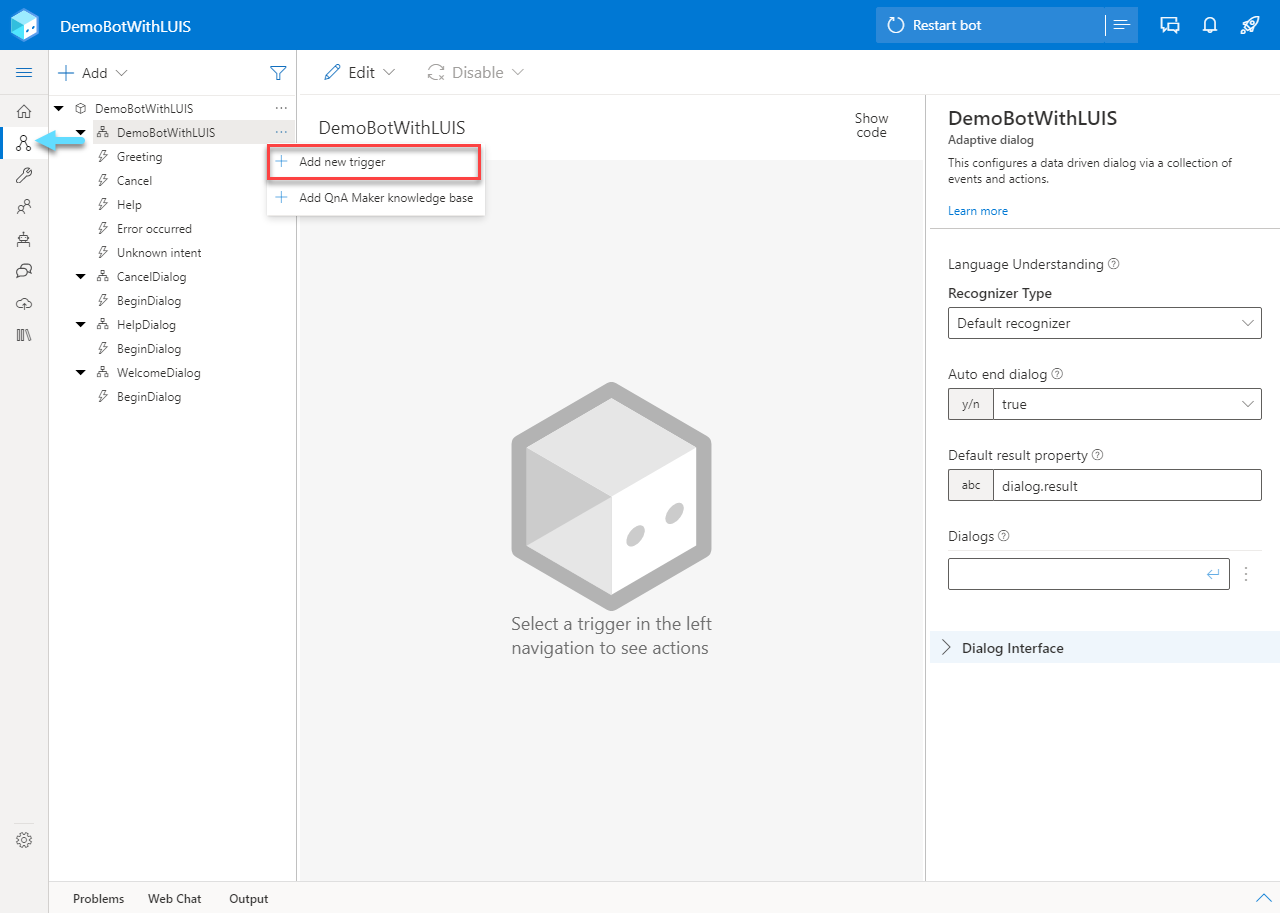

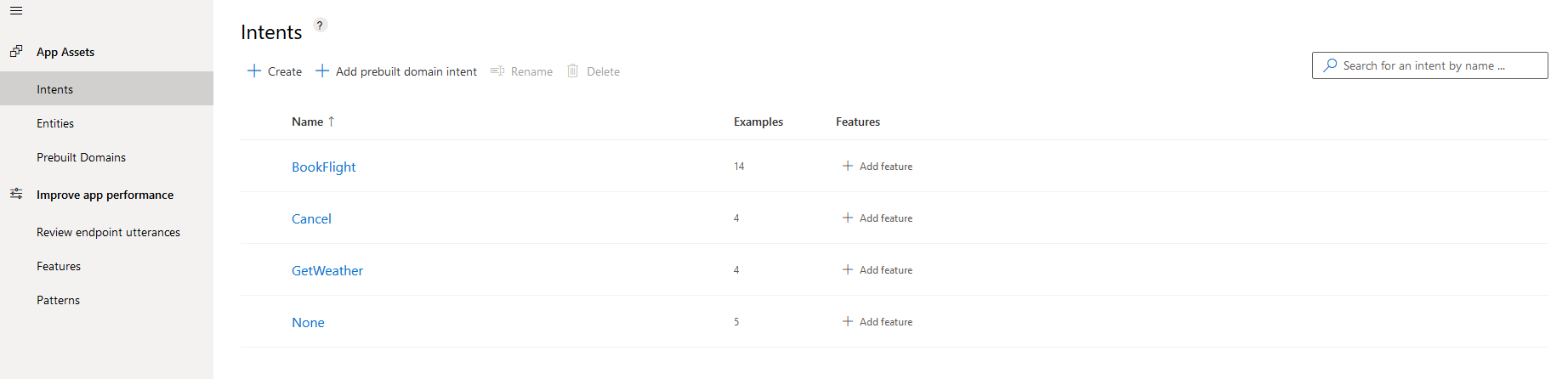

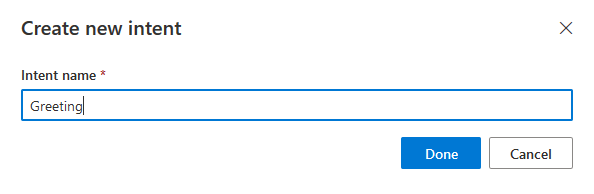

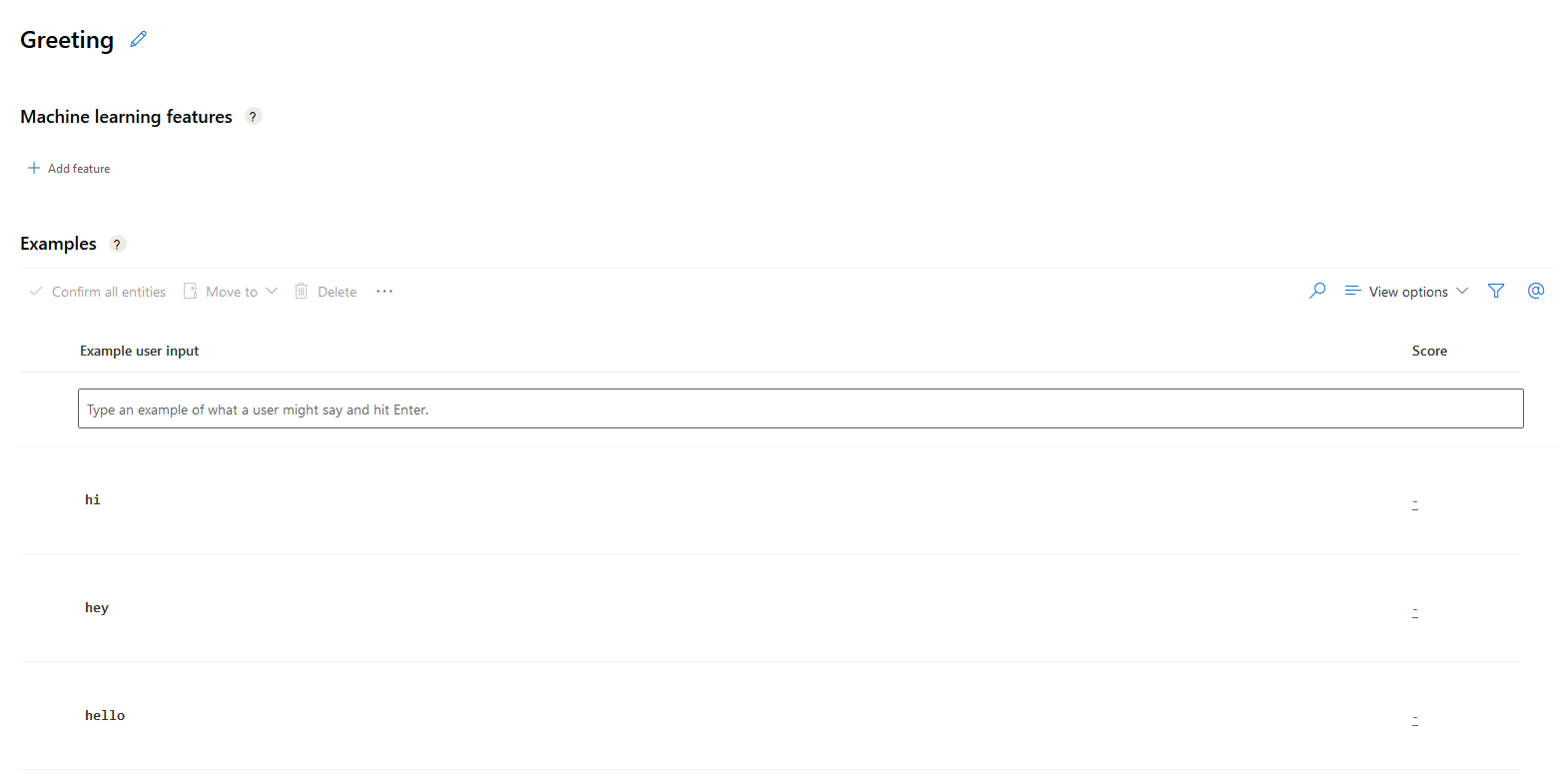

Once you are sure that your bot works as expected, you can add new intents to LUIS. If you want to learn more about intents and how LUIS works, you can read about it in this post. To add an intent, navigate to the Create tab of the composer, click the three dots next to your bot’s name and select Add New Trigger.

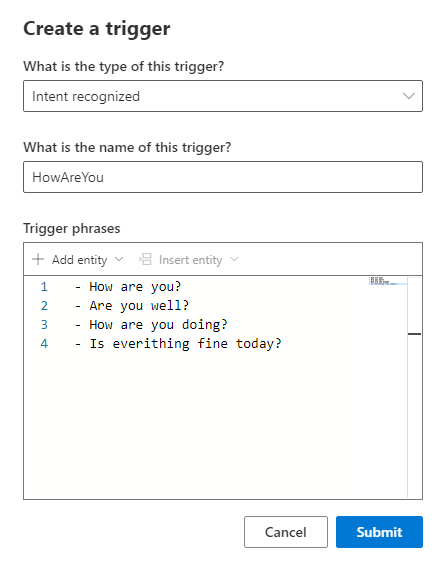

Leave the first field as Intent recognized. The second field is the name of your intent (or trigger). The Trigger phrases field should contain the utterances that correspond to your intent. It is very important to start each utterance with “- “ in a new line.

For this example we will create a How Are You intent and the utterances we will use are presented below.

- How are you?

- Are you well?

- How are you doing?

- Is everithing fine today?

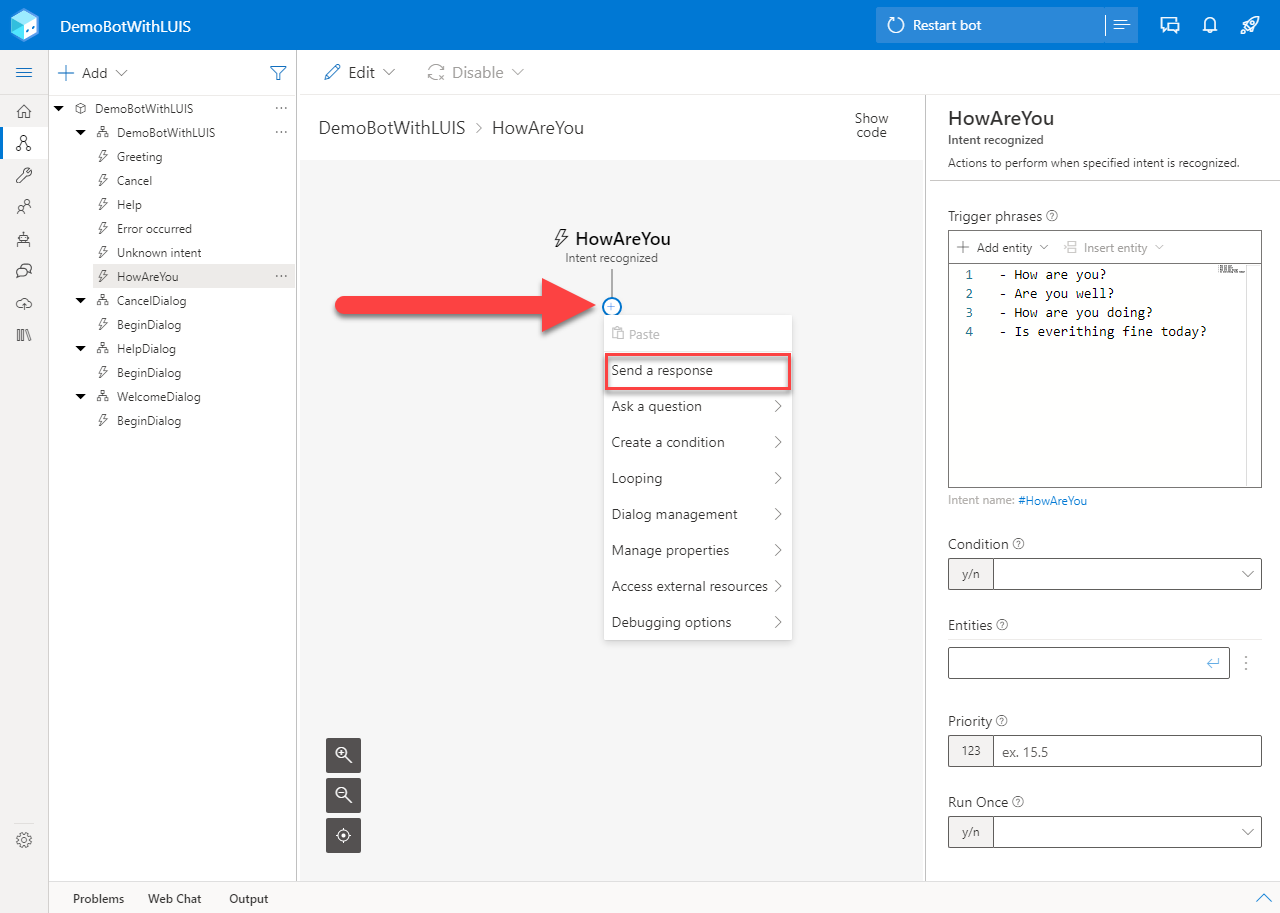

Now you can see in front of you the newly created trigger, which corresponds to one LUIS intent. Click the “+” icon and then Send a response to customize the massage that will appear after this intent is detected.

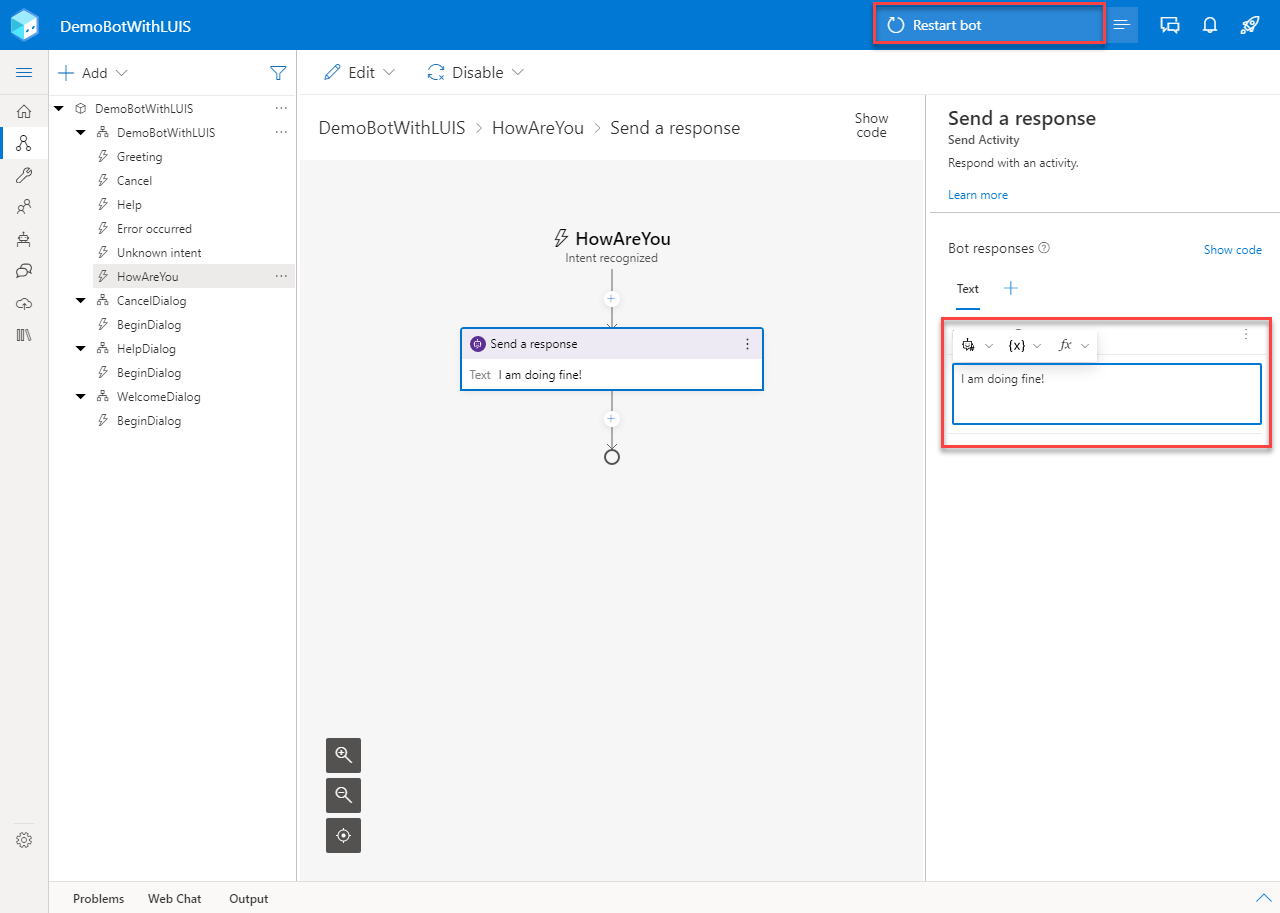

Write your bot’s response in the Text field and click Restart bot to run you bot.

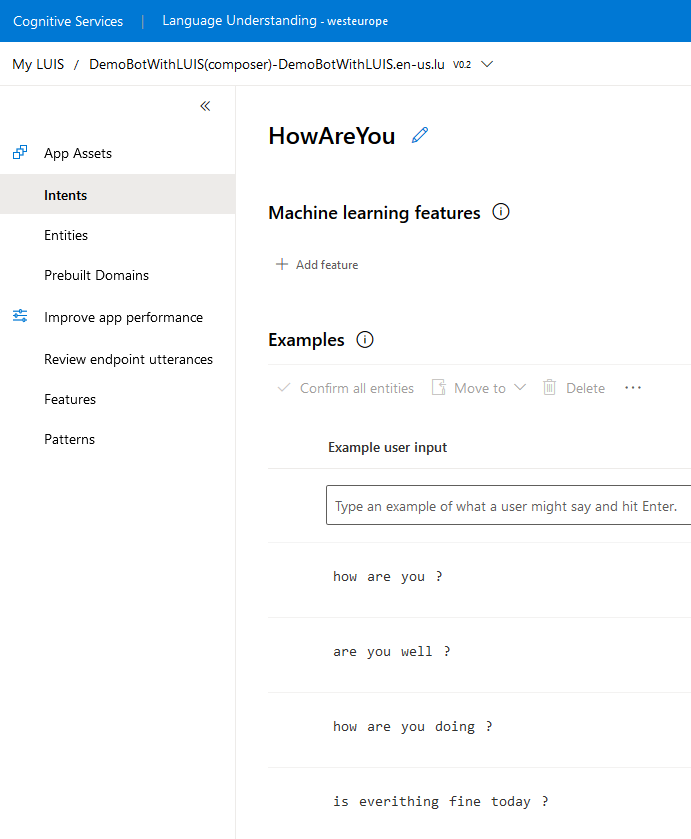

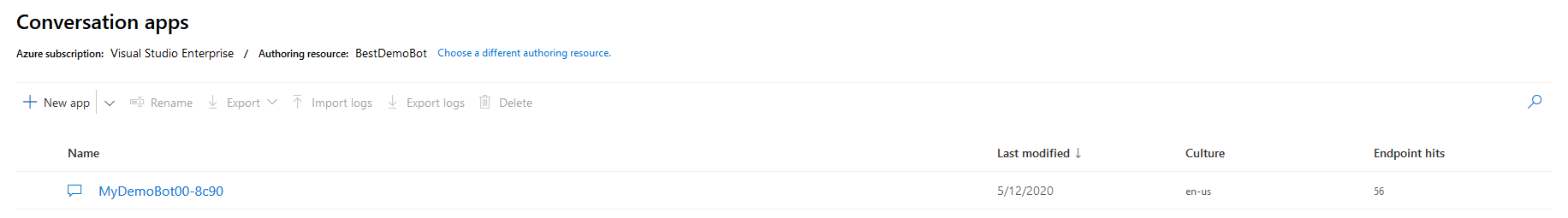

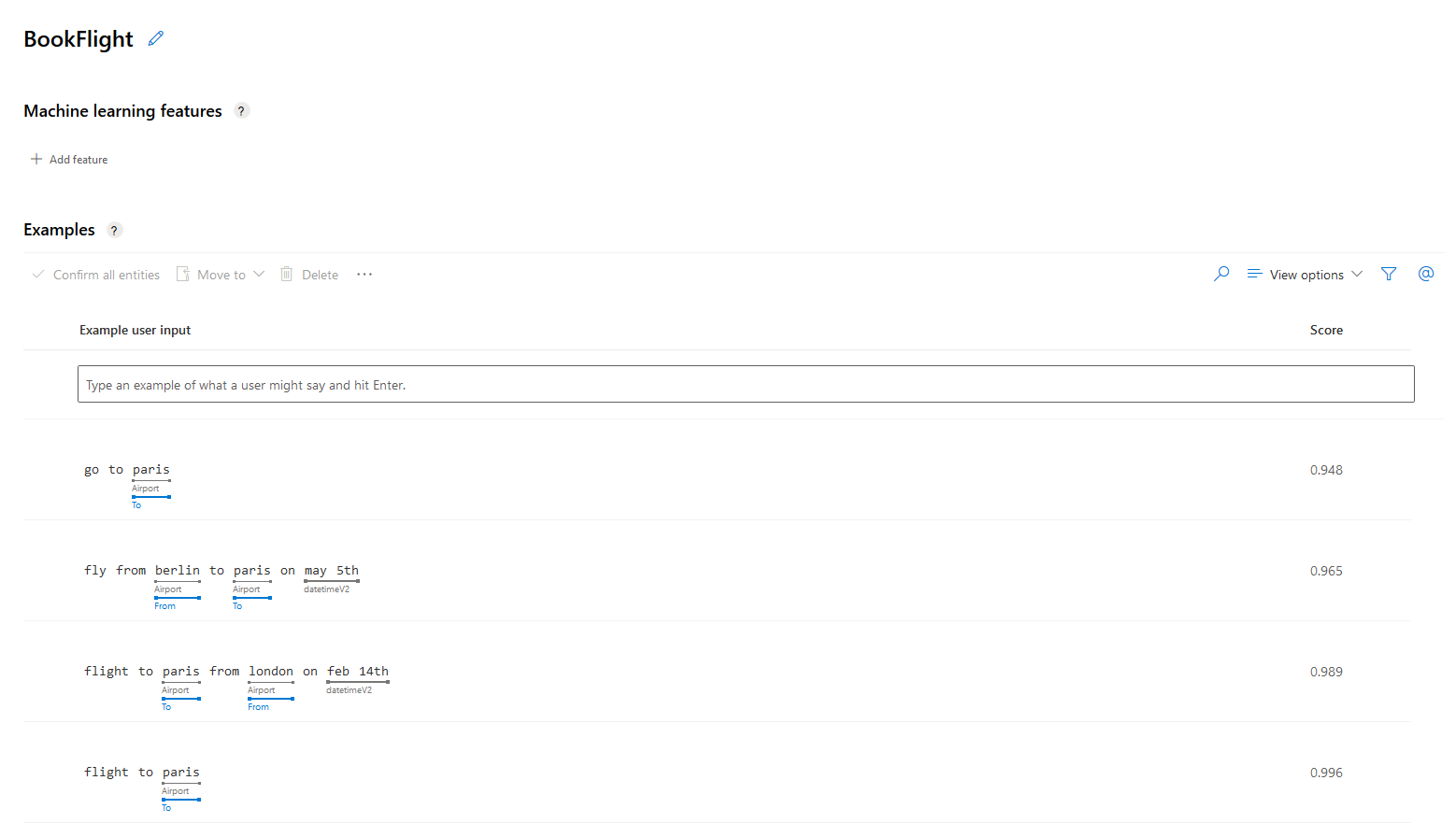

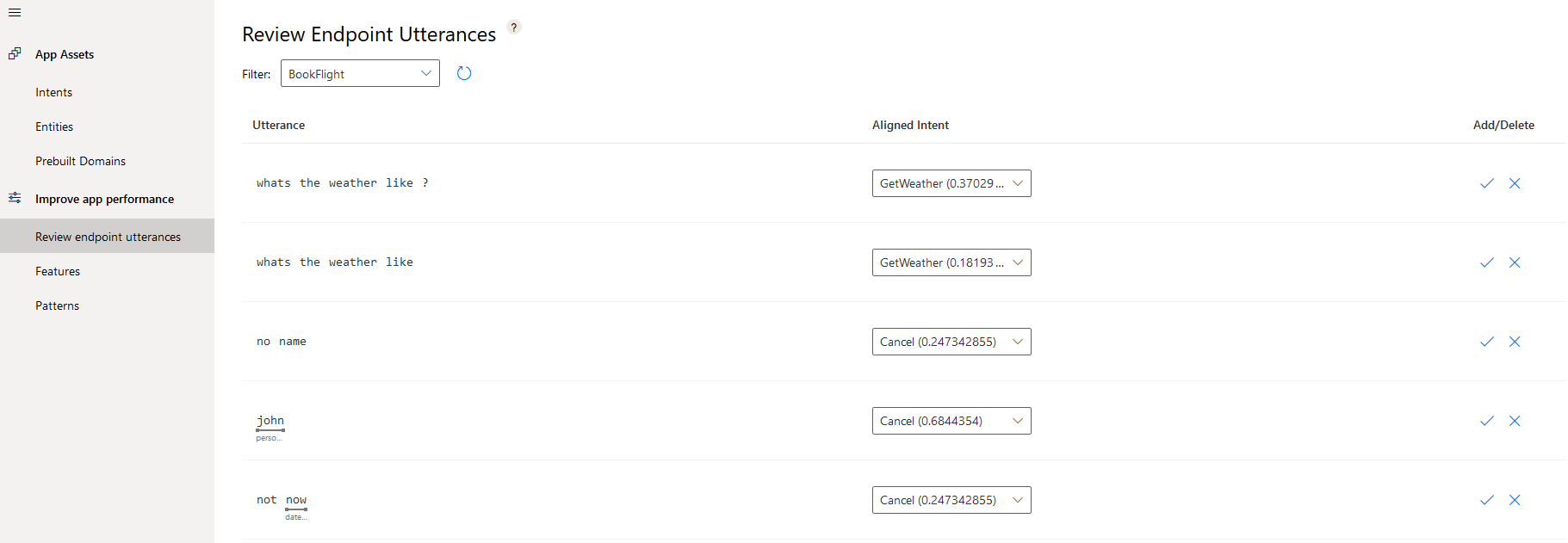

If you take a look at your new LUIS resource at https://www.luis.ai/, you will find the new intent you just created along with all the utterances synced automatically.

Test

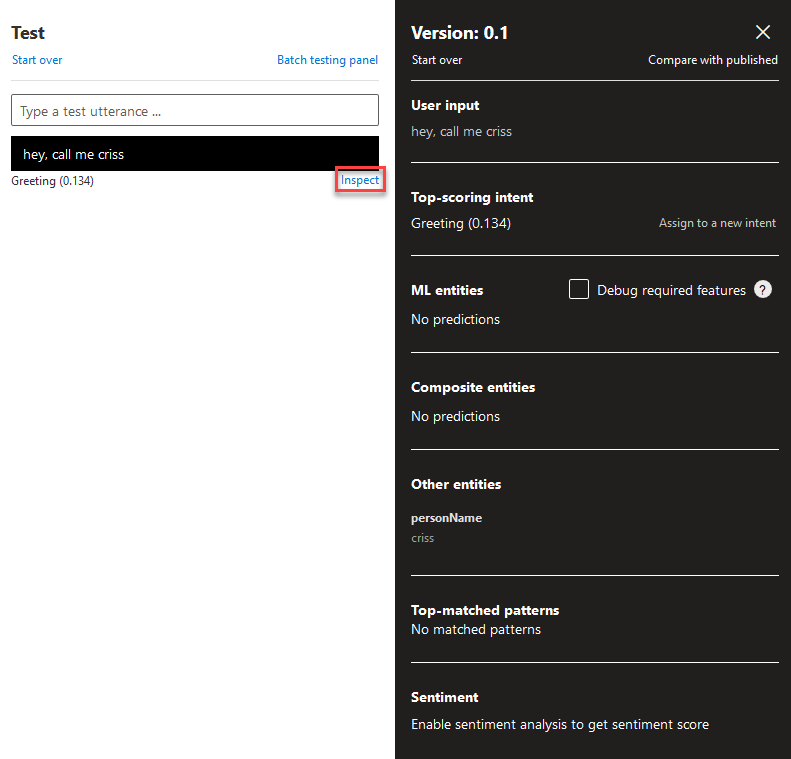

Back to the bot, while it is running open the Web Chat and try your new intent. The bot should be responding with your custom text.

And this is how to intergrade LUIS into an Azure Bot!

First look at Azure Bot resource

June 22, 2021

June 22, 2021

Azure Bot Service is a comprehensive development environment for designing and building enterprise-grade conversational AI.

Prephase

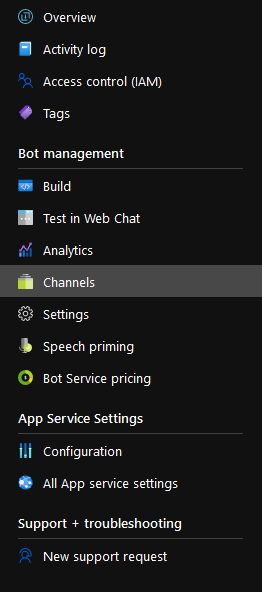

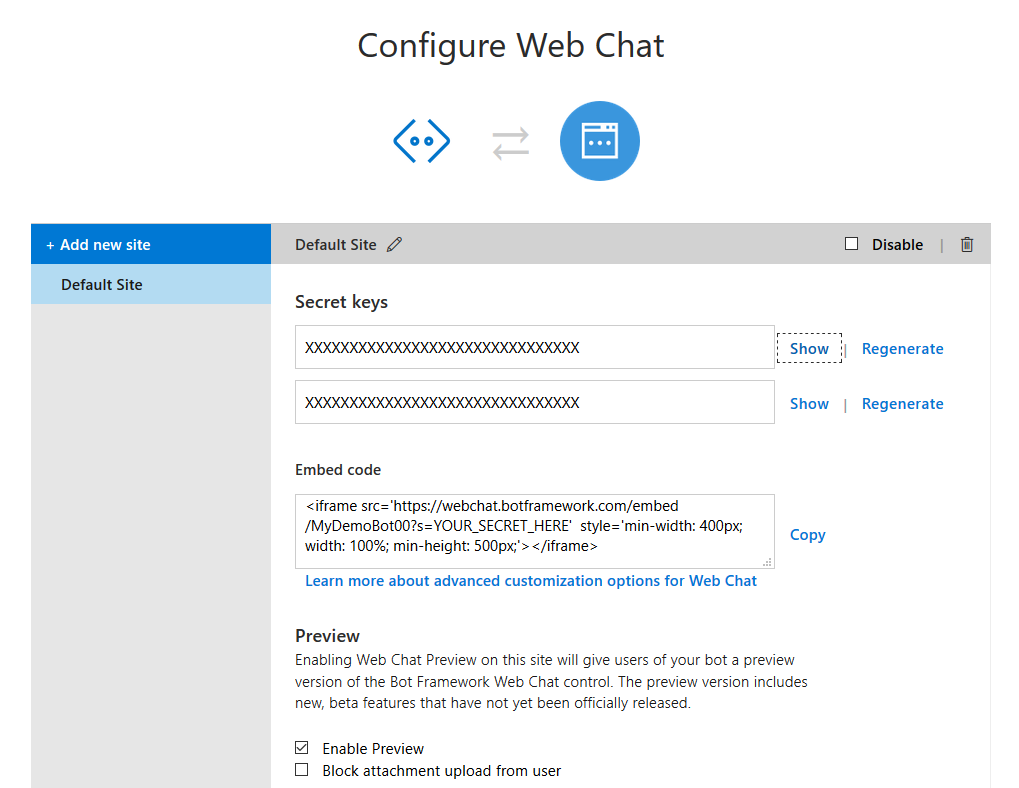

You might have seen this notification when trying to create your own Web App Bots. It prompts you to create your bots using the Azure Bot resource, as the old and trusty Web App Bot slowly starts to become deprecated. So, let’s dive in and see what’s new!

Create

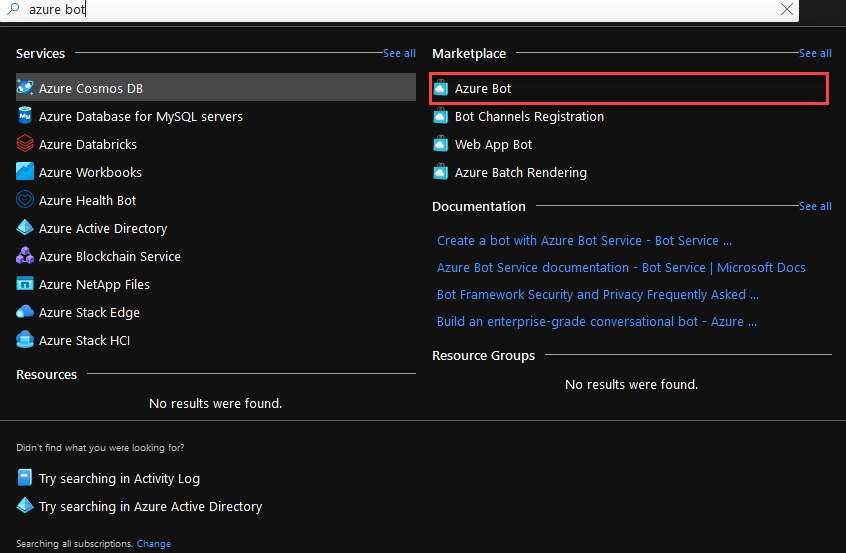

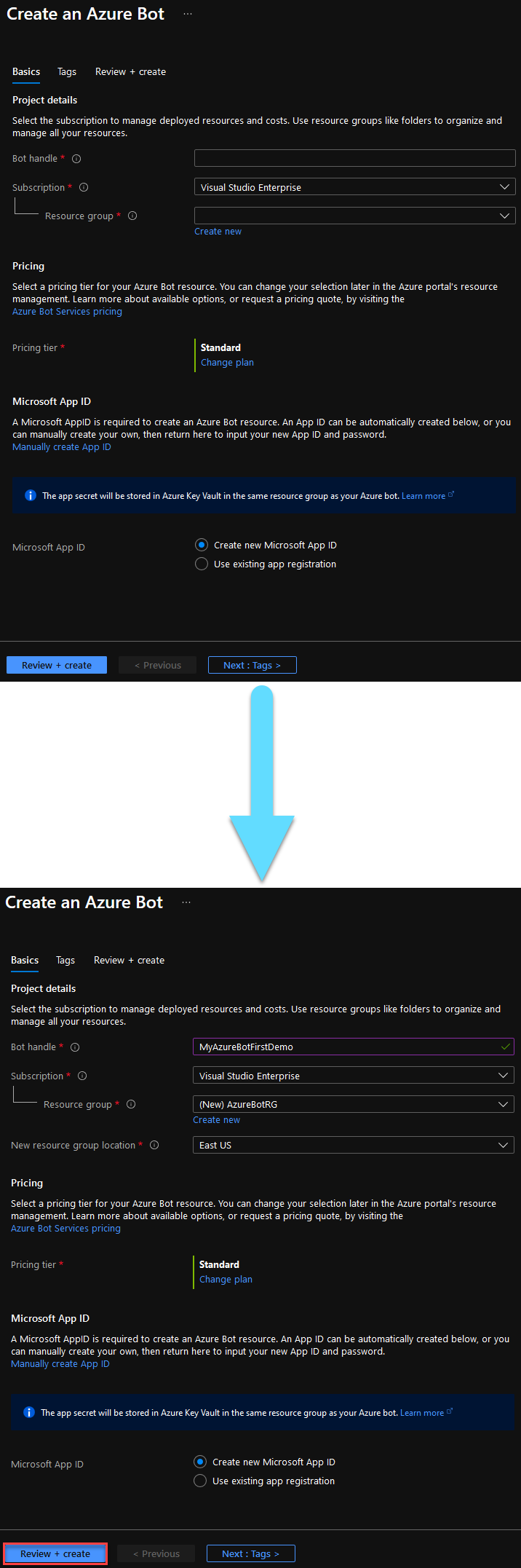

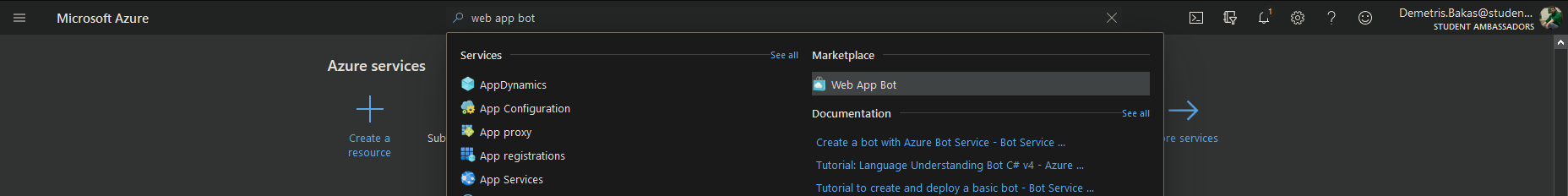

Search for the Azure Bot resource, you can easily find it in the Marketplace.

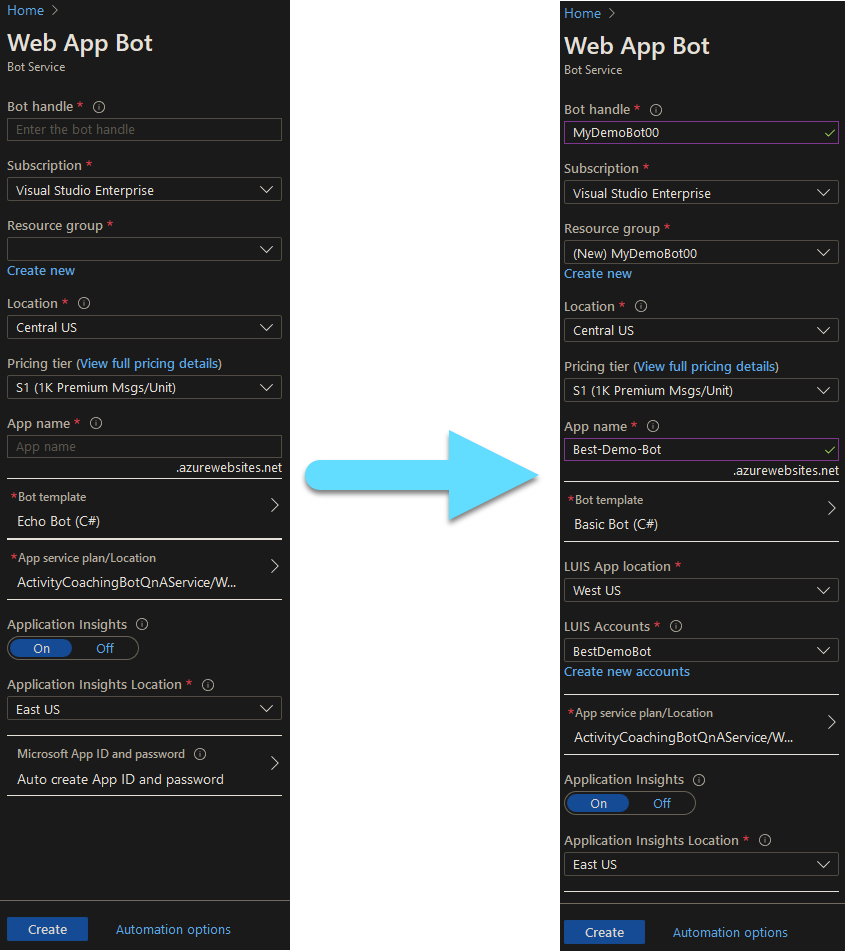

Unlike Web App Bot, in the Azure Bot resource you only need to provide the Bot handle and the Resource Group, in order to create the resource.

Let’s have a look on what each field does.

Only the fields with the ‘*’ are mandatory.

- The Bot Handle is a unique identifier to your bot.

- The Subscription field is populated by default with your default azure subscription.

- Resource Group is the group that will contain the resources you are creating now. You can either create a new one, ore use an existing one.

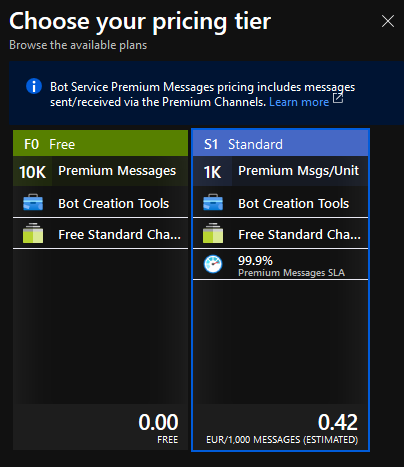

- Choose the Pricing Tier that suits the needs of your bot. It is automatically payed using your Azure credits. Here are your options:

- Lastly on Microsoft App ID you can choose your own App ID and password for your bot, or you can leave it as is, to automatically create one for you.

As you can see, a LUIS app is not created by default, so you will most propably need to create it afterwards.

When you are ready click the Review + create button.

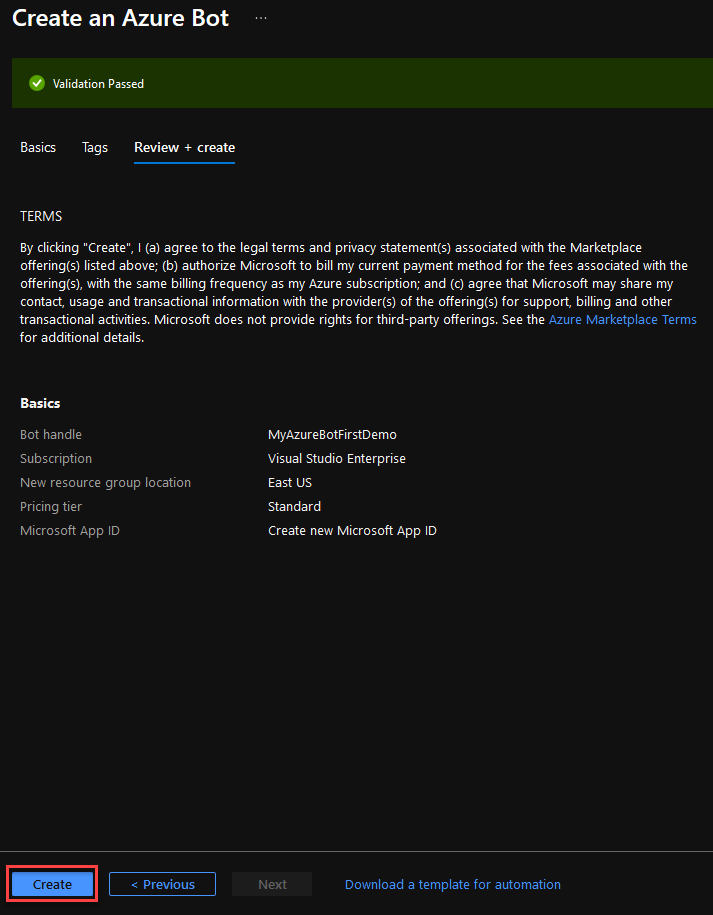

This is the final validation before you create your bot. If everithing went as planned, click Create to deploy your resource.

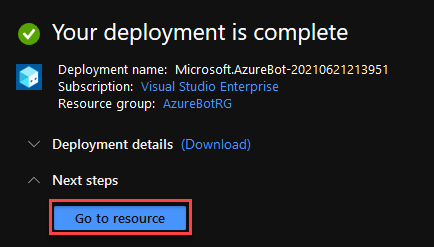

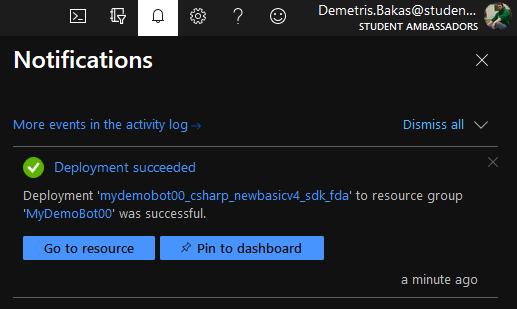

You will be notified once the deployment is complete and you can go to your newly created resource. The deployment process should not take longer than a few minutes.

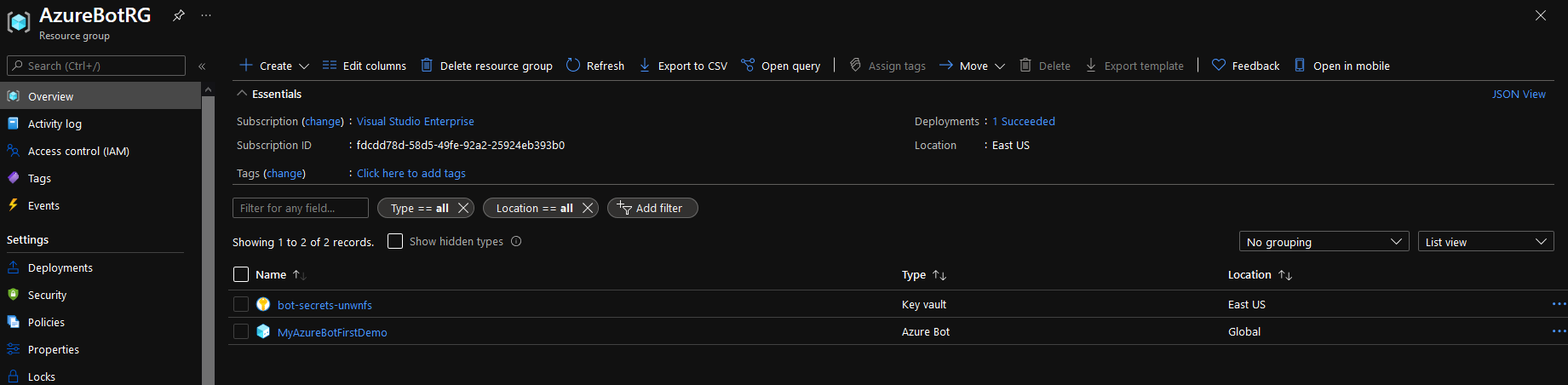

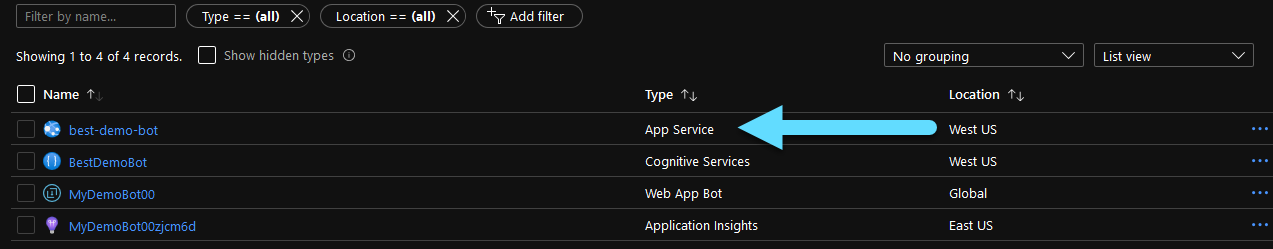

As a side note, if we take a look in our resource group we only see two resources. Our Azure Bot and a Key Vault. The Azure Bot resource handles everithing regarding our new bot, and is where we can publish our bot’s code.

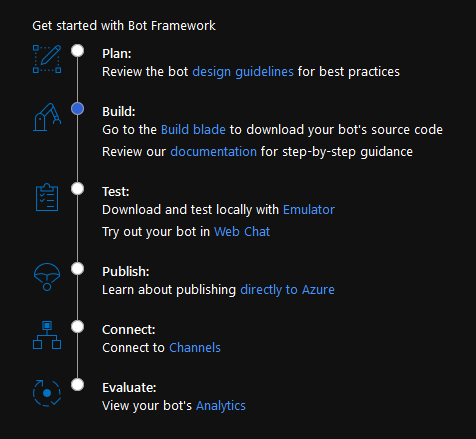

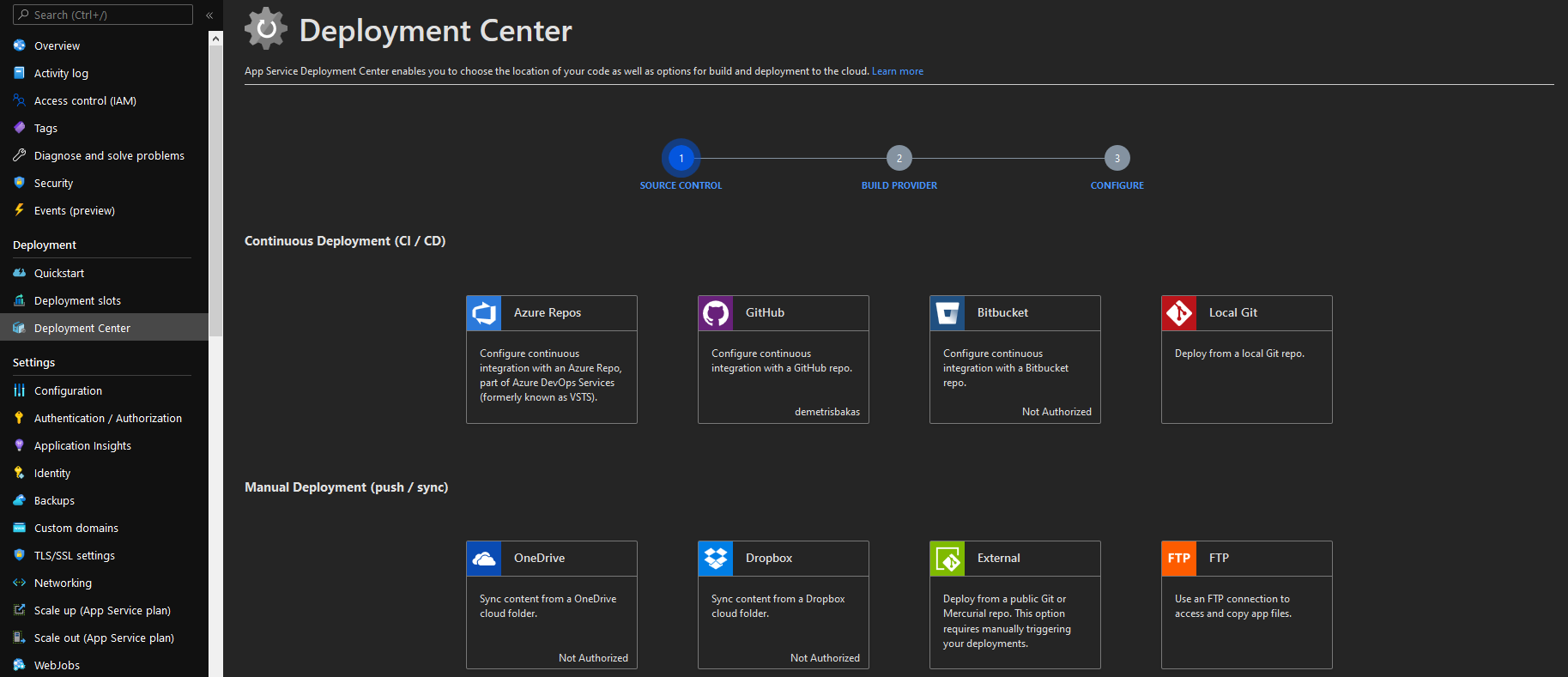

Back to our Azure Bot resource, as you can see, no code is created for us automaticaly and for a good reason. This process is now part of the Bot Framework Composer. Download the composer from here and click on the Open in Composer button to start creating your bot.

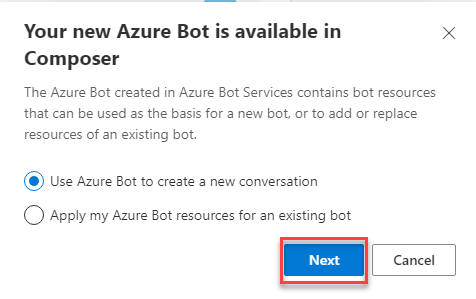

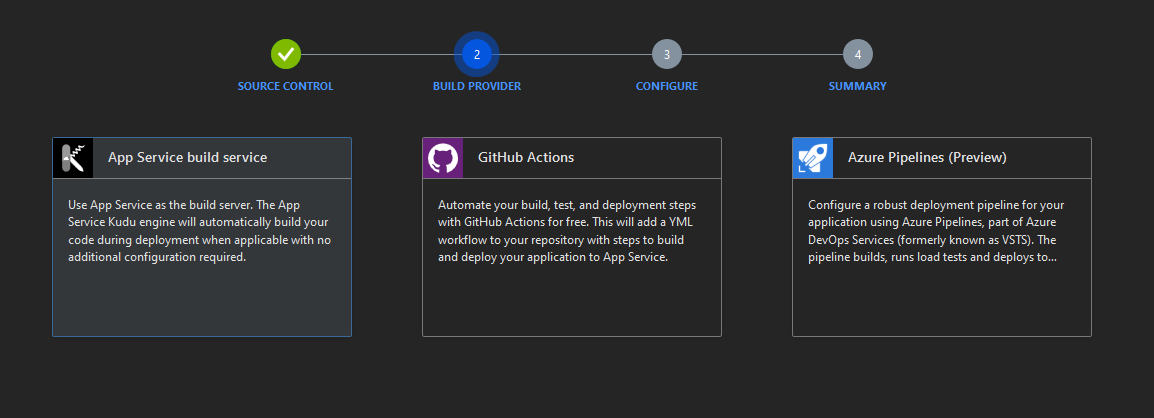

The composer should now pop up. Unless you have a bot already that you wish to publish, select the first option and click Next.

And this is where you can chose the type of your bot and have it populated with code. Keep in mind that, bots that require cognitive services like LUIS or QnA Maker will not create an app to those services automatically, so you will need to create them on your own afterwards.

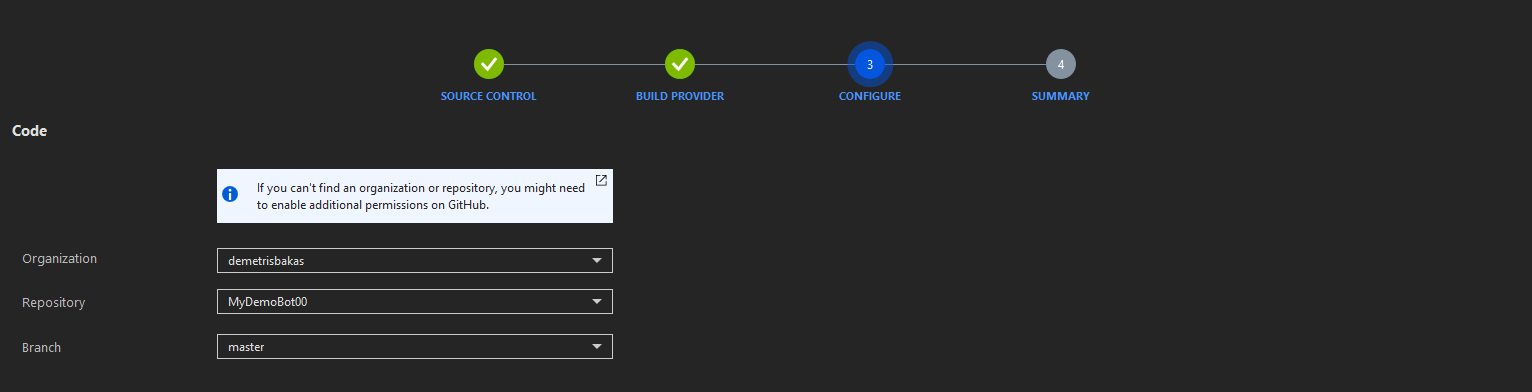

For this example we are going to use the Core Bot with Language. This requires linking with LUIS, which will be covered in another post. However it does gives us a glimpse of how the Composer works.

Give a name to your bot and click Create.

If you navigate, for example, to the Greeting trigger, you are greeted with an intuitive flowchart of how the trigger works. This aims to make the production process of the chatbot easier to understand and expand on. Here you can start the development of your bot.

And that is your first look at the Azure Bot resource!

Use Adaptive Cards as dialog in Bot Framework

June 12, 2021

June 12, 2021

Adaptive Cards are platform-agnostic snippets of UI, authored in JSON, that apps and services can openly exchange. When delivered to a specific app, the JSON is transformed into native UI that automatically adapts to its surroundings. It helps design and integrate light-weight UI for all major platforms and frameworks.

The use of Adaptive Cards as dialog, provides the ability for the developer to gather user information that is not easily conveyed through natural language, in a controlled UI.

Create

In this post we will be using a Basic Bot. To create one follow this post.

Adaptive cards in Bot Framework work in turns. That means that they can be used to give some information to the user and then continue with the rest of the dialog step, ending in a prompt. Since Adaptive Cards connot be used as prompts, the user cannot give information to the card, hit a button and then expect the dialog to continue. To fix that, we are going to create our own prompt!

Create a new class and name it AdaptiveCardPrompt.cs. Then paste the following code.

using Microsoft.Bot.Builder;

using Microsoft.Bot.Builder.Dialogs;

using Microsoft.Bot.Schema;

using Newtonsoft.Json.Linq;

using System;

using System.Collections.Generic;

using System.Threading;

using System.Threading.Tasks;

namespace Microsoft.BotBuilderSamples

{

public class AdaptiveCardPrompt : Prompt<JObject>

{

public AdaptiveCardPrompt(string dialogId, PromptValidator<JObject> validator = null)

: base(dialogId, validator)

{

}

protected override async Task OnPromptAsync(ITurnContext turnContext, IDictionary<string, object> state, PromptOptions options, bool isRetry, CancellationToken cancellationToken = default)

{

if (turnContext == null)

{

throw new ArgumentException(nameof(turnContext));

}

if (options == null)

{

throw new ArgumentException(nameof(options));

}

if (isRetry && options.Prompt != null)

{

await turnContext.SendActivityAsync(options.RetryPrompt, cancellationToken).ConfigureAwait(false);

}

else if (options.Prompt != null)

{

await turnContext.SendActivityAsync(options.Prompt, cancellationToken).ConfigureAwait(false);

}

}

protected override Task<PromptRecognizerResult<JObject>> OnRecognizeAsync(ITurnContext turnContext, IDictionary<string, object> state, PromptOptions options, CancellationToken cancellationToken = default)

{

if (turnContext == null)

{

throw new ArgumentException(nameof(turnContext));

}

if (turnContext.Activity == null)

{

throw new ArgumentException(nameof(turnContext));

}

var result = new PromptRecognizerResult<JObject>();

if (turnContext.Activity.Type == ActivityTypes.Message)

{

if (turnContext.Activity.Value != null)

{

if (turnContext.Activity.Value is JObject)

{

result.Value = turnContext.Activity.Value as JObject;

result.Succeeded = true;

}

}

}

return Task.FromResult(result);

}

}

}

We now need a class that will help us consume tha output of the card. Name this class AdaptiveCard.cs and populate it with the following code.

using System.IO;

namespace Microsoft.BotBuilderSamples

{

public static class AdaptiveCard

{

public static string ReadCard(string fileName)

{

string[] BuildPath = { ".", "Cards", fileName };

var filePath = Path.Combine(BuildPath);

var fileRead = File.ReadAllText(filePath);

return fileRead;

}

}

}

Here we are going to create our card. You can create as many cards you like this way.

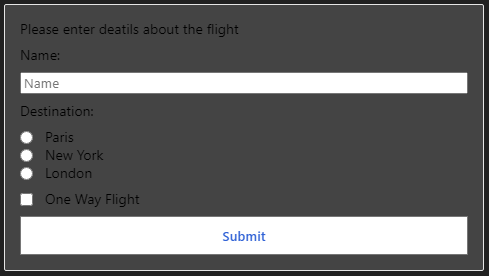

Create a file called bookingDetails.json, and populate it with the code below.

It contains a field to input text, a radio button, a checkbox, a regular button and a few text blocks.

{

"$schema": "http://adaptivecards.io/schemas/adaptive-card.json",

"type": "AdaptiveCard",

"version": "1.0",

"body": [

{

"type": "TextBlock",

"text": "Please enter deatils about the flight"

},

{

"type": "TextBlock",

"text": "Name:"

},

{

"type": "Input.Text",

"id": "Name",

"placeholder": "Name"

},

{

"type": "TextBlock",

"text": "Destination:"

},

{

"type": "Input.ChoiceSet",

"placeholder": "Destination",

"choices": [

{

"title": "Paris",

"value": "Paris"

},

{

"title": "New York",

"value": "New York"

},

{

"title": "London",

"value": "London"

}

],

"id": "Destination",

"style": "expanded"

},

{

"type": "Input.Toggle",

"id": "OneWayFlight",

"title": "One Way Flight",

"value": "false"

}

],

"actions": [

{

"type": "Action.Submit",

"title": "Submit"

}

]

}

This is how the finished card will look like.

We also need to create a class that will hold the data gathered from the card, as it will be deserialized from JSON format. Create a new class called BookingDetailsJSON and add the code bellow. We only gether three variables from the card, so we will create these variables with their respective names.

public class BookingDetailsJSON

{

public string Name { get; set; }

public string Destination { get; set; }

public bool OneWayFlight { get; set; }

}

Implement

Now go to the dialog that will use the new card prompt. You can create a new dialog, or use an existing one if you prefer.

Add the following using statement.

using Newtonsoft.Json;

Add the new prompt we created along with the rest of the prompts for the dialog.

AddDialog(new AdaptiveCardPrompt("adaptive"));

In the intro step, or the step that will show the card, add the following code.

var cardJson = AdaptiveCard.ReadCard("bookingDetails.json");

var cardAttachment = new Attachment()

{

ContentType = "application/vnd.microsoft.card.adaptive",

Content = JsonConvert.DeserializeObject(cardJson),

};

var options = new PromptOptions

{

Prompt = new Activity

{

Attachments = new List<Attachment>() { cardAttachment },

Type = ActivityTypes.Message

}

};

return await stepContext.PromptAsync("adaptive", options, cancellationToken);

In the next step, we will consume the JSON from the card, show the information to the user and end the dialog.

var result = JsonConvert.DeserializeObject<BookingDetailsJSON>(stepContext.Result.ToString());

var messageText = $"Thank you for providing your data.\n\nName: {result.Name}\n\nDestination: {result.Destination}\n\nOne way flight: {result.OneWayFlight}";

var promptMessage = MessageFactory.Text(messageText, messageText, InputHints.ExpectingInput);

await stepContext.PromptAsync(nameof(TextPrompt), new PromptOptions { Prompt = promptMessage }, cancellationToken);

return await stepContext.EndDialogAsync(result, cancellationToken);

Lastly, a tricky part is that the Basic Bot has a class called CancelAndHelpDialog that handles the input text from the user. However since we are using the card to proceed in the dialog, the input text will be null, and CancelAndHelpDialog does not like that…

To fix this, go to the CancelAndHelpDialog.cs file and navigate to this line. It should be in line 35.

if (innerDc.Context.Activity.Type == ActivityTypes.Message)

Then replace it with the following line, to let it ignore null values.

if (innerDc.Context.Activity.Type == ActivityTypes.Message && innerDc.Context.Activity.Text != null)

And we are done! Let’s test our bot.

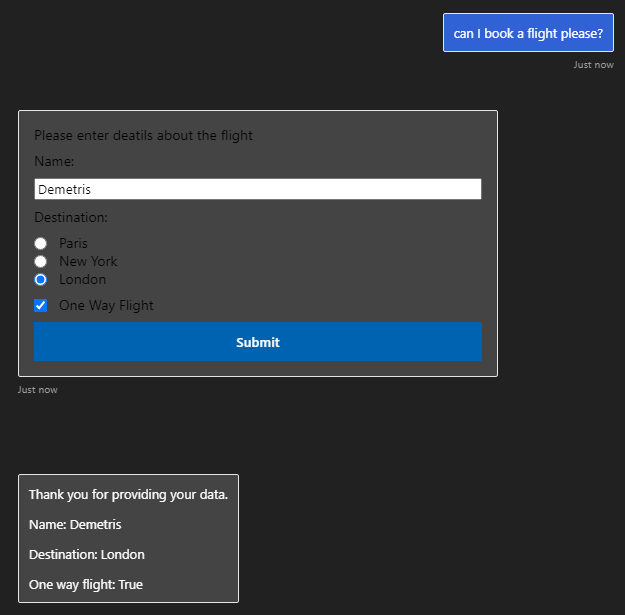

Test

The card appears normally, I enter my data, click Submit, the dialog continues and the bot gathers my information correctly!

That is how you can use Adaptive Cards to follow dialog and gather data from the user!

Value prediction using ML.NET

June 02, 2021

June 02, 2021

ML.Net is an open source and cross-platform framework created by Microsoft that utilizes Machine Learning to give the user the ability to effortlessly manipulate data in their own volition. Using the available model creation, data can be transformed into a prediction in seconds. ML.NET runs on Windows, Linux, and macOS using .NET Core, or Windows using .NET Framework. 64 bit is supported on all platforms. 32 bit is supported on Windows, except for TensorFlow, LightGBM, and ONNX-related functionality. You can learn more here.

Value prediction uses machine learning to predict values based on the rest of the data given in a dataset.

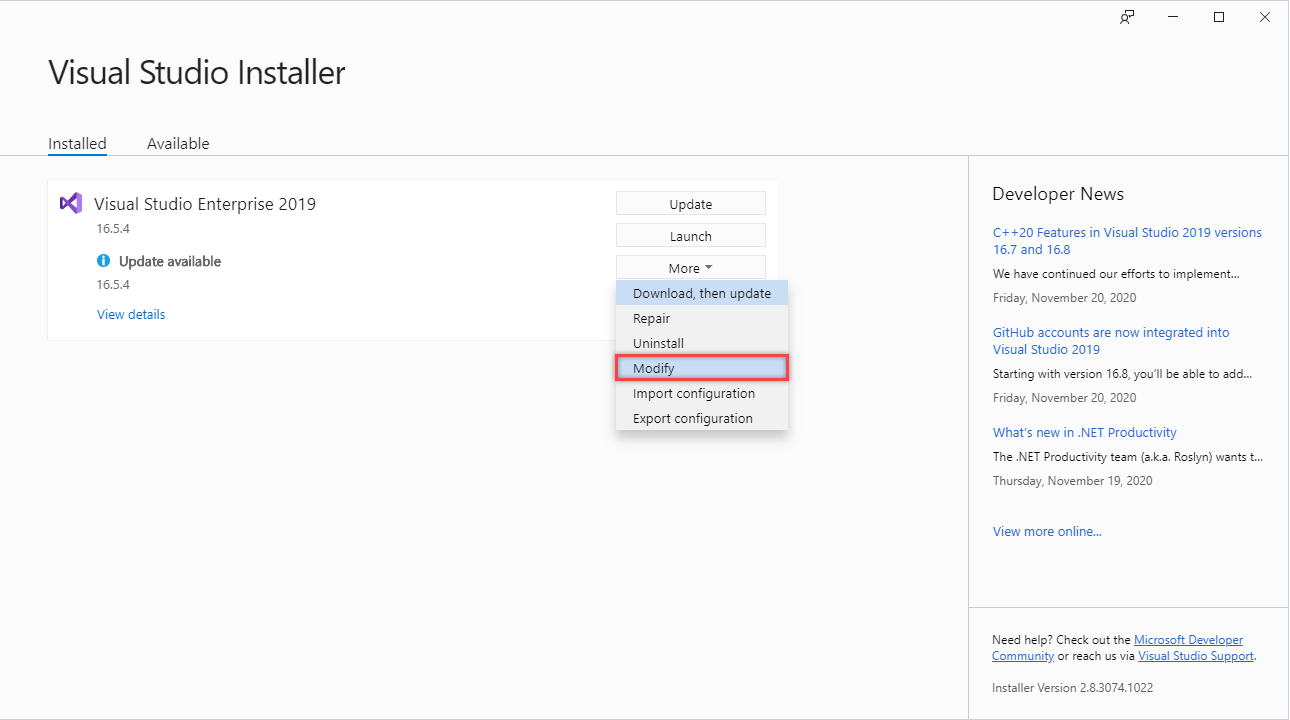

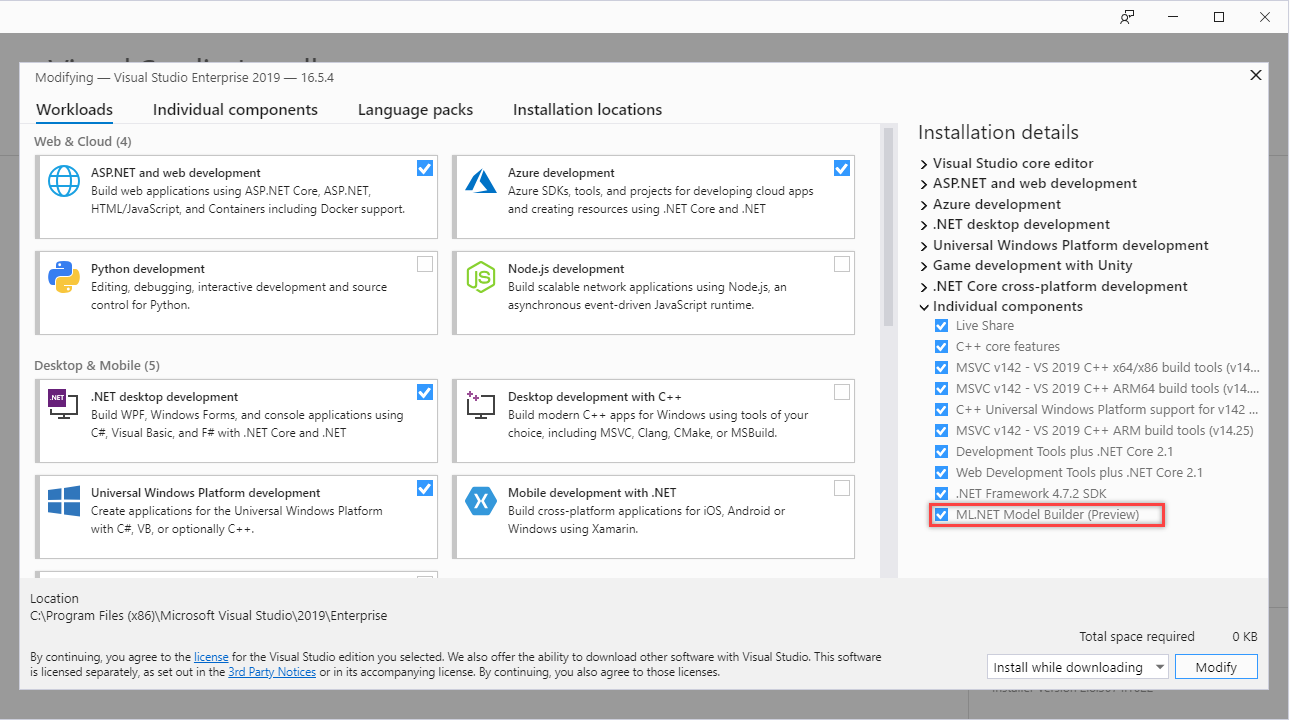

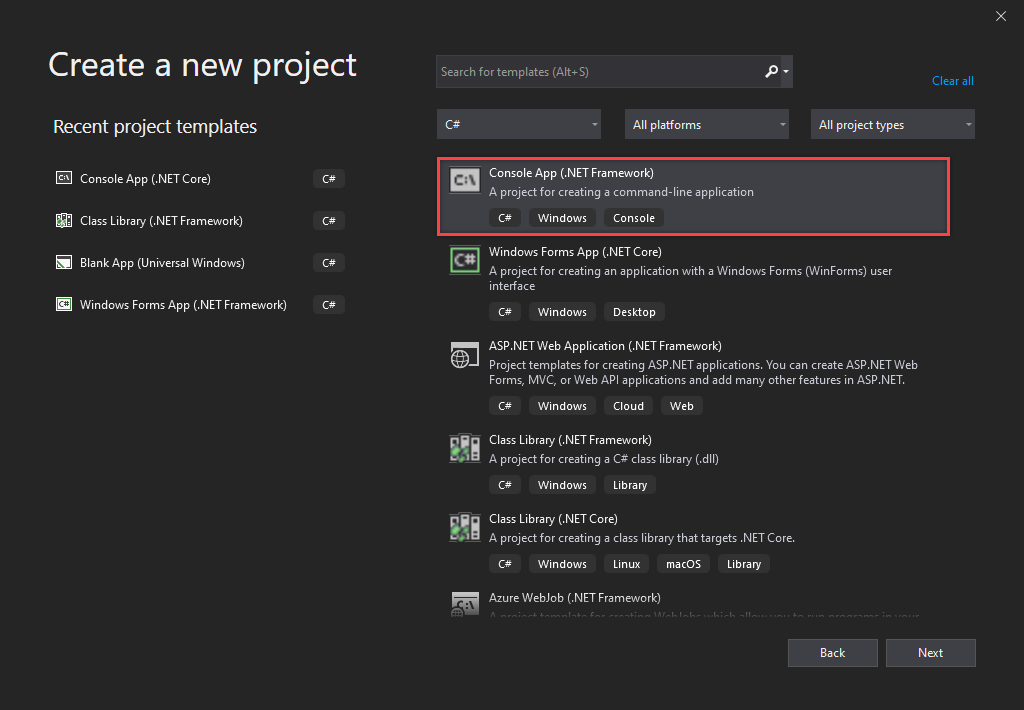

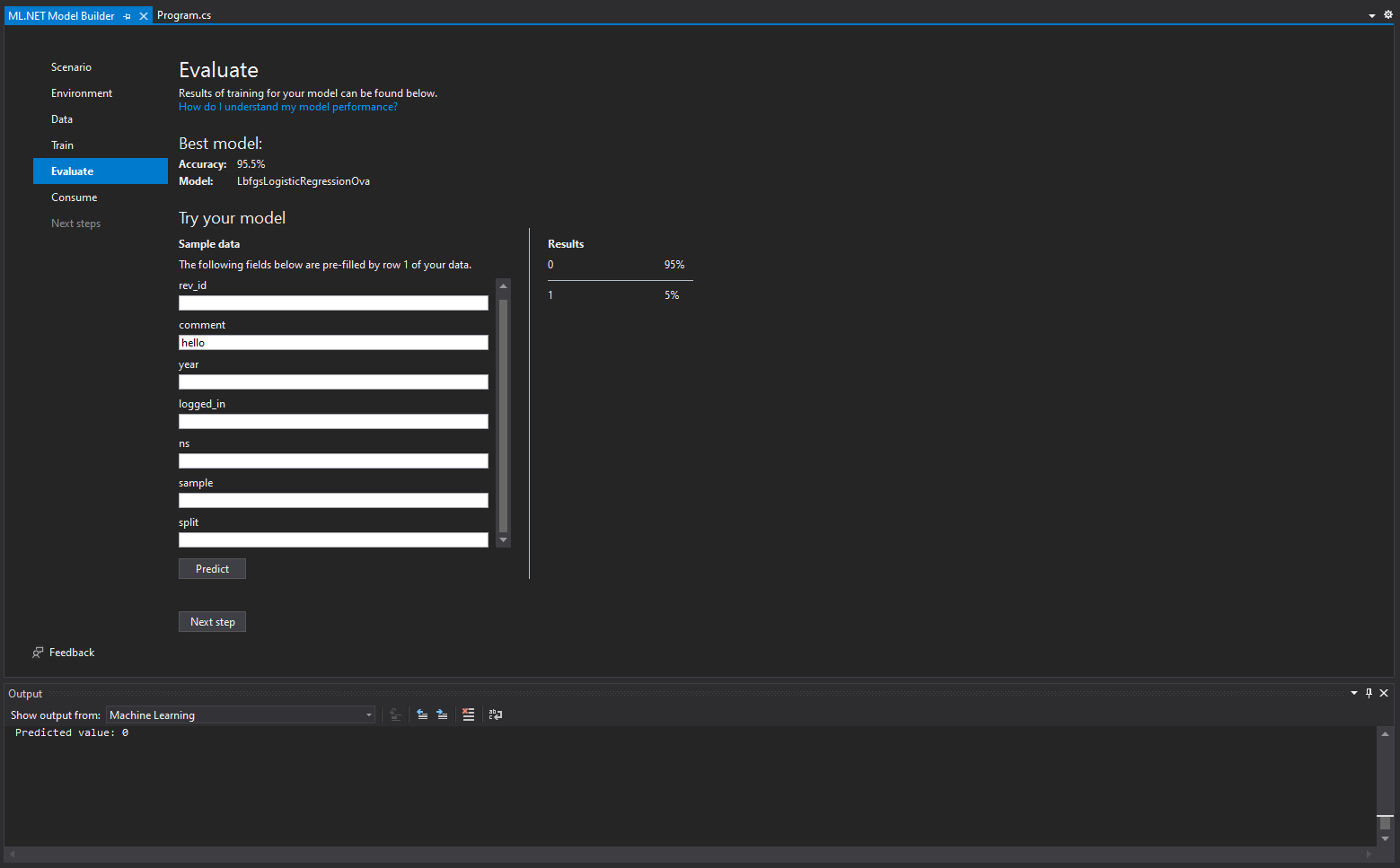

Create Model

To setup ML.NET in your machine follow this post.

After you are done, launch Visual Studio and create a new Console App (.NET Core).

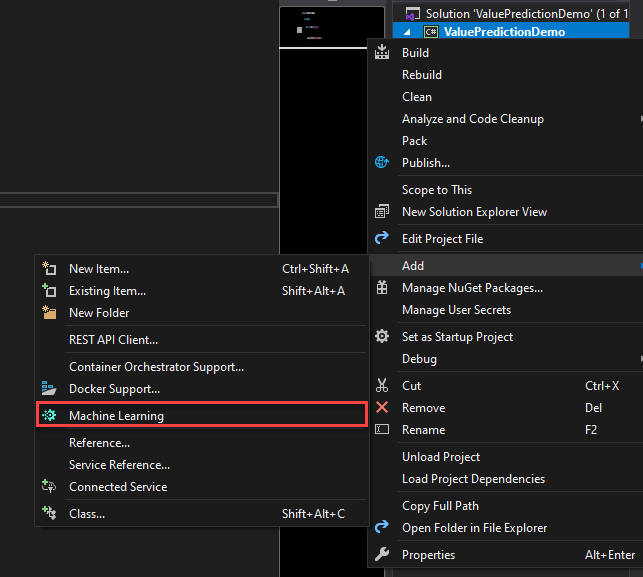

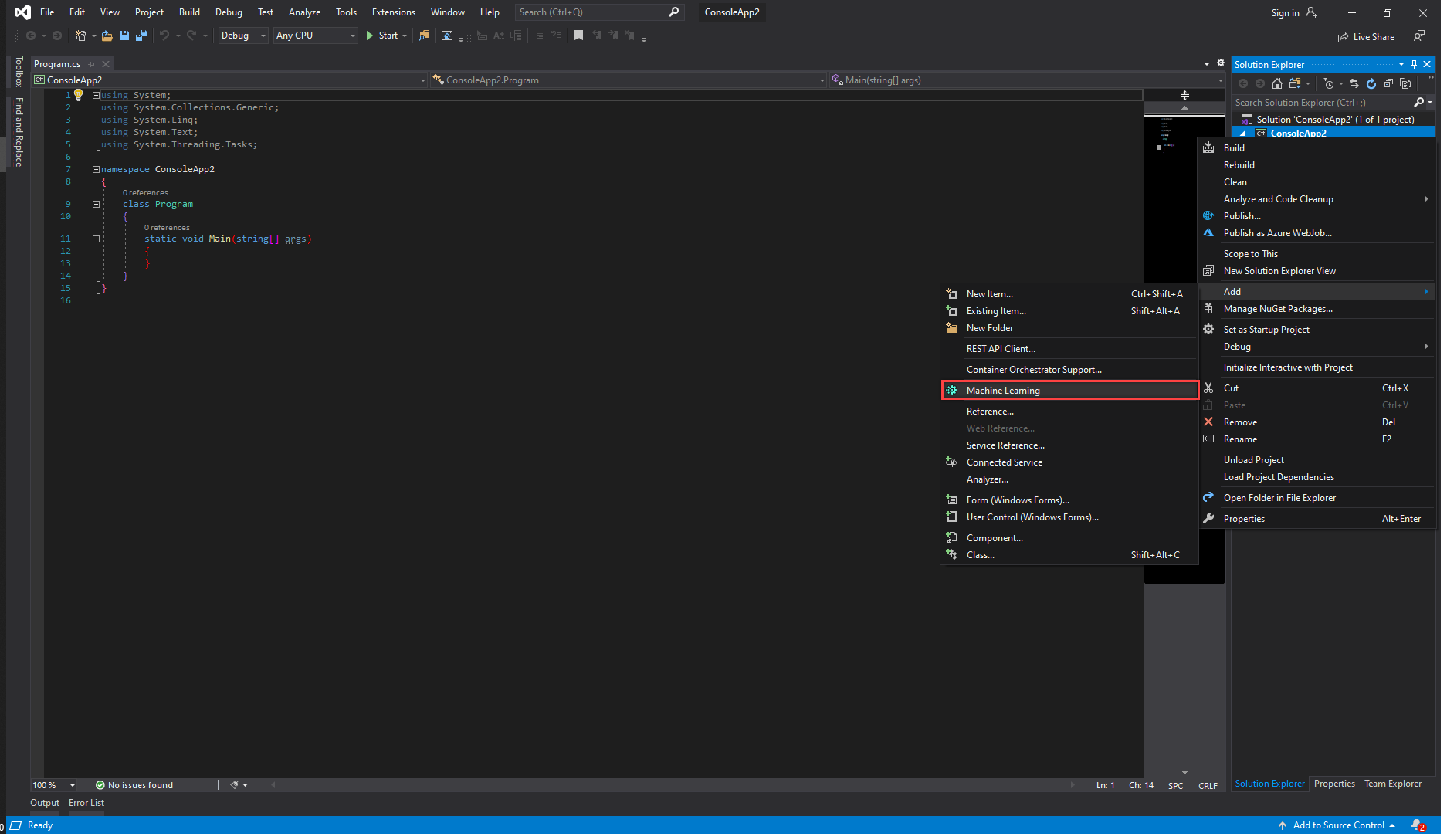

Then right click on your project in the Solution Explorer and select Add -> Machine Learning.

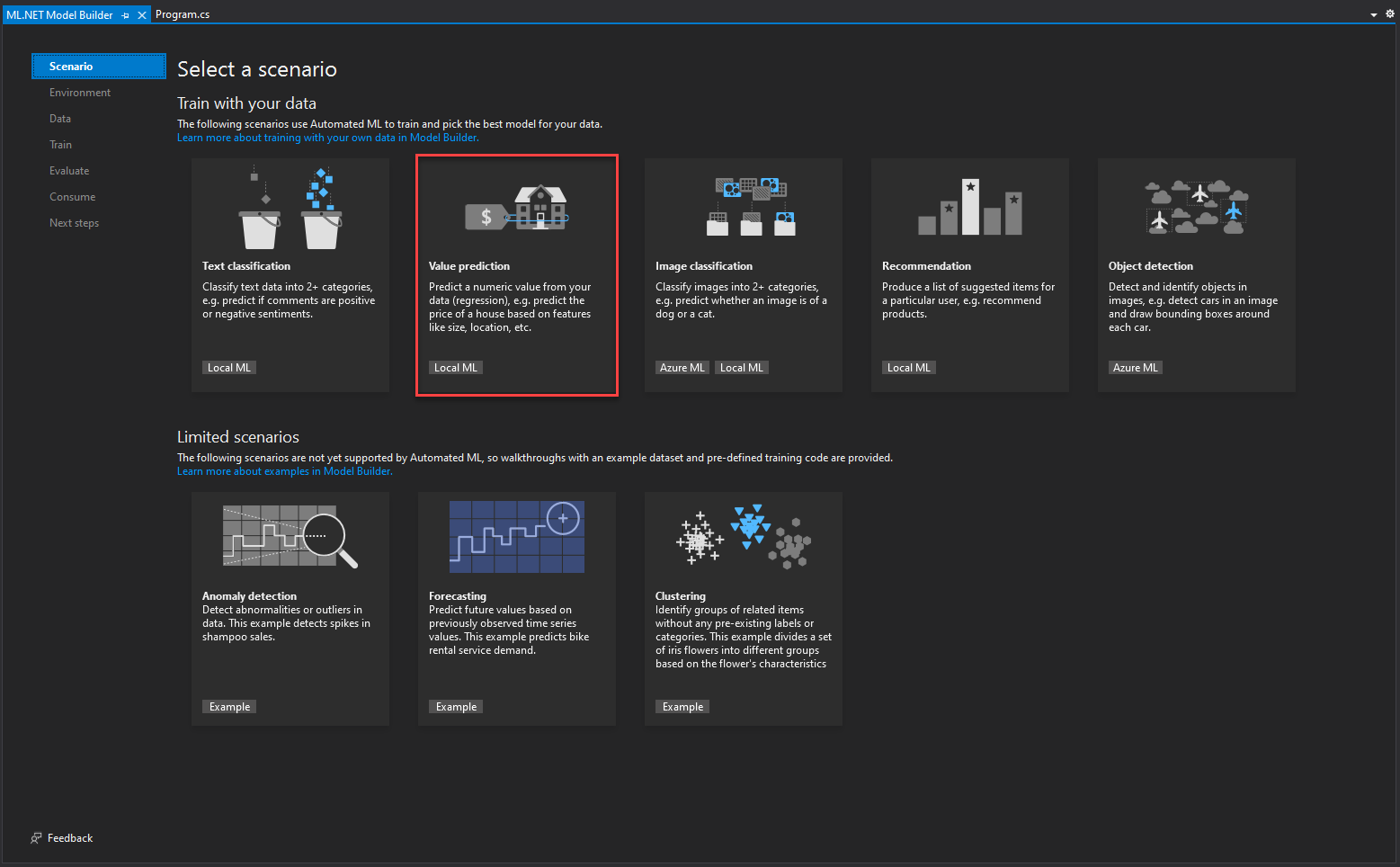

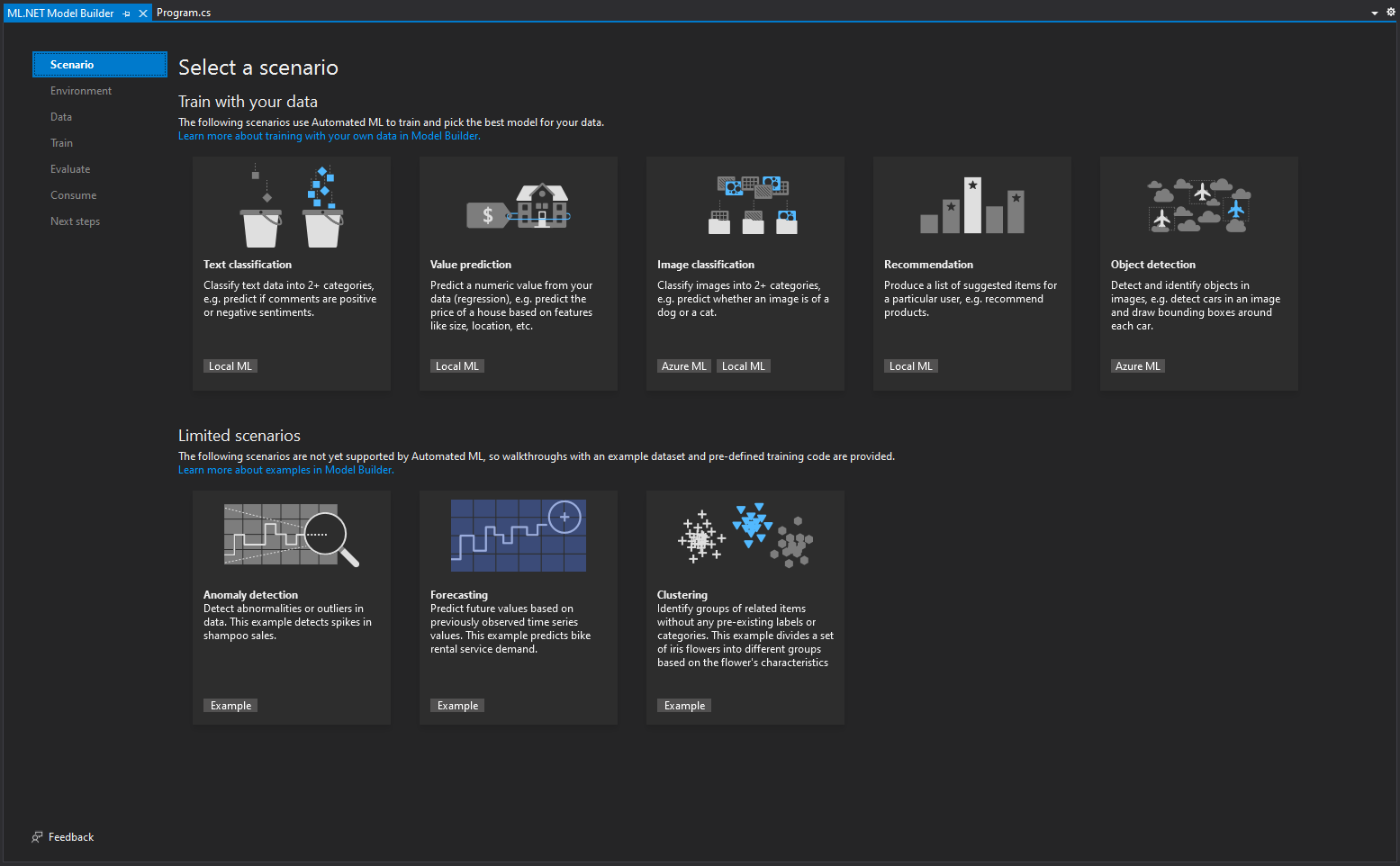

You will be presented with the ML.NET Model Builder. Select the Value prediction scenario.

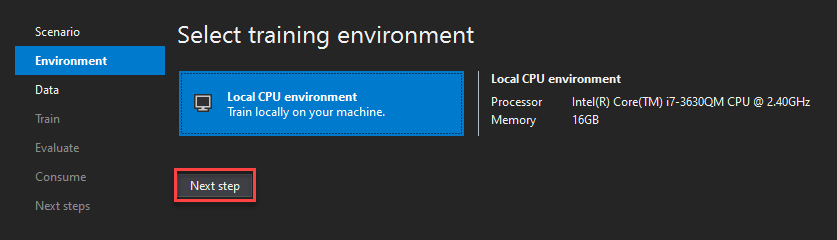

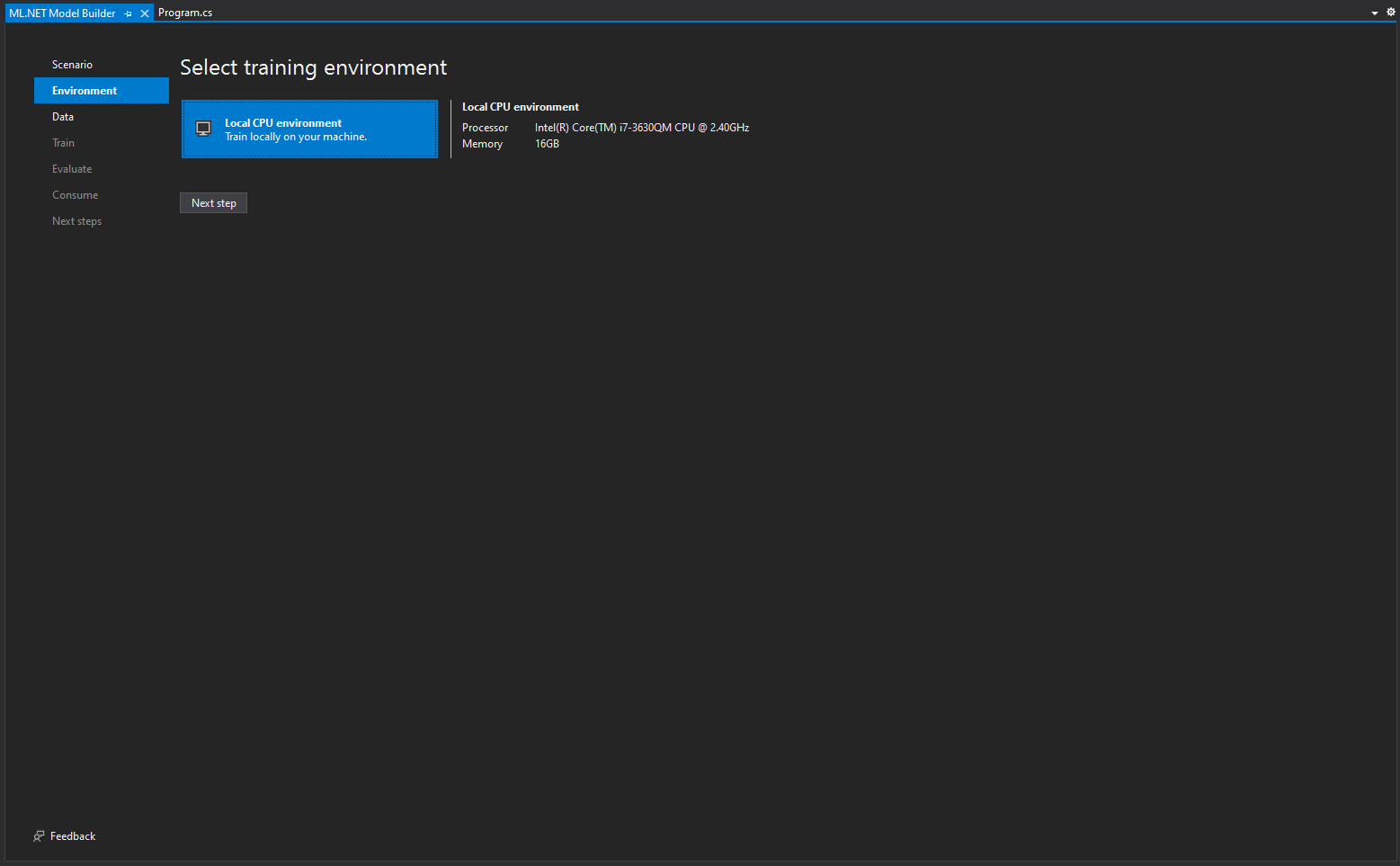

In this step you can choose where to run the model. You can choose your local machine and click Next step.

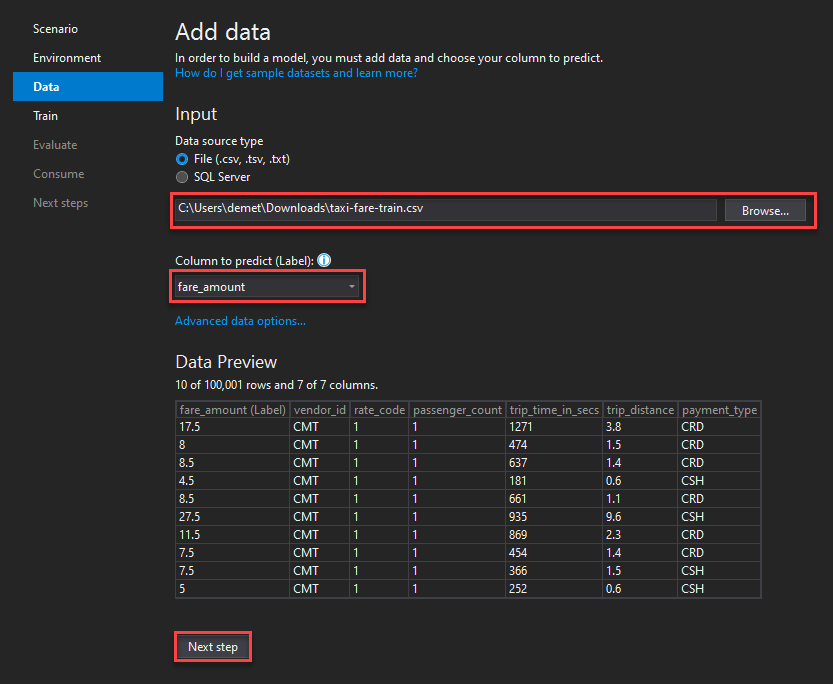

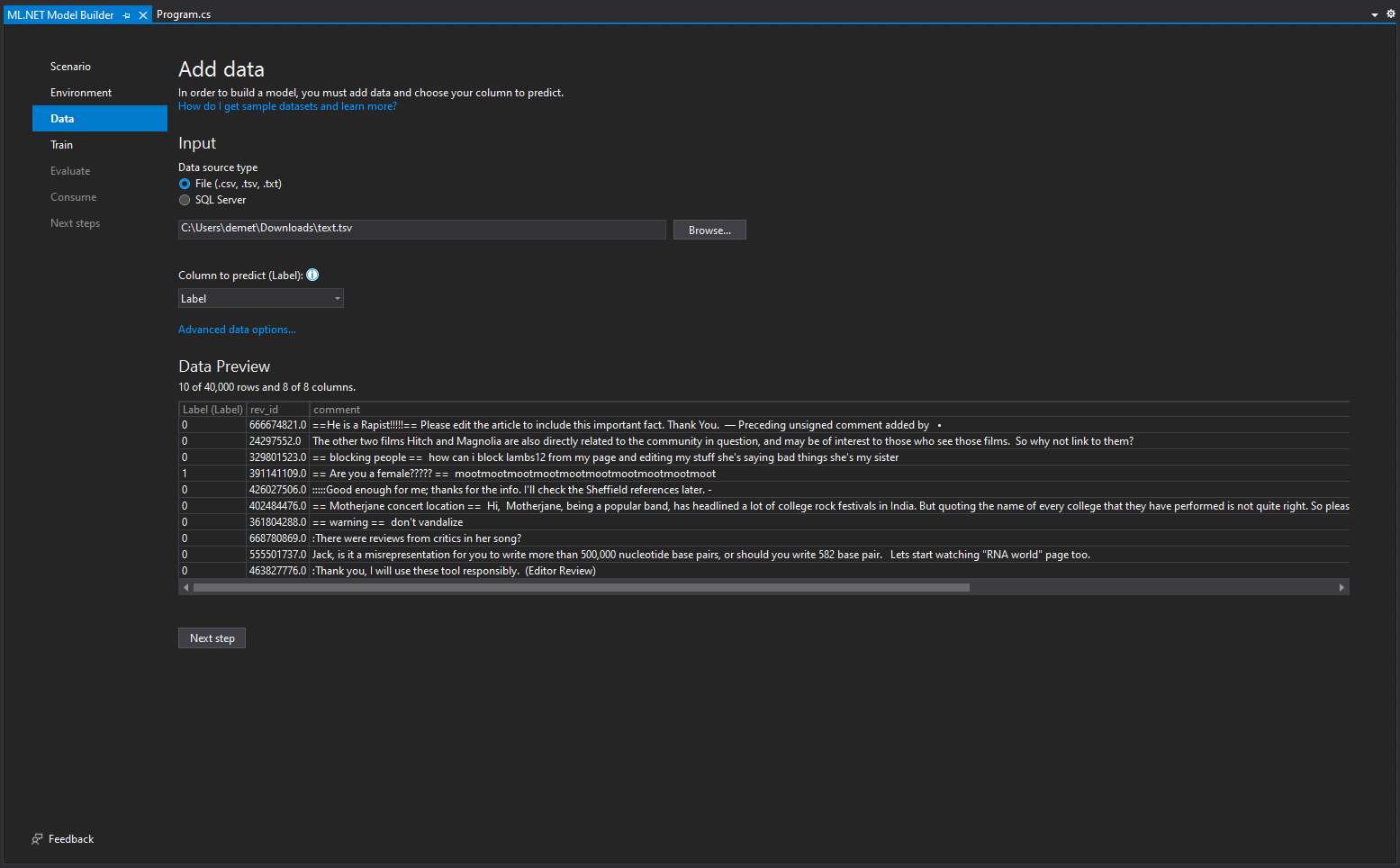

Here you can input your dataset. Ensure that you have the File option selected, but you can choose the SQL Server option if you happen to have one.

Click Browse and enter your dataset, We are using this sample dataset for this demo.

Next, in Column to predict you are choosing the column of the dataset that contains the values that are going to get predicted by the model. For this dataset you can choose the fare_amount column. Then click Next step.

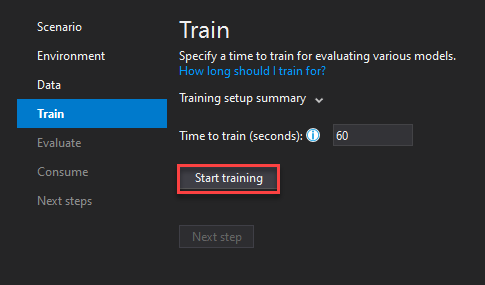

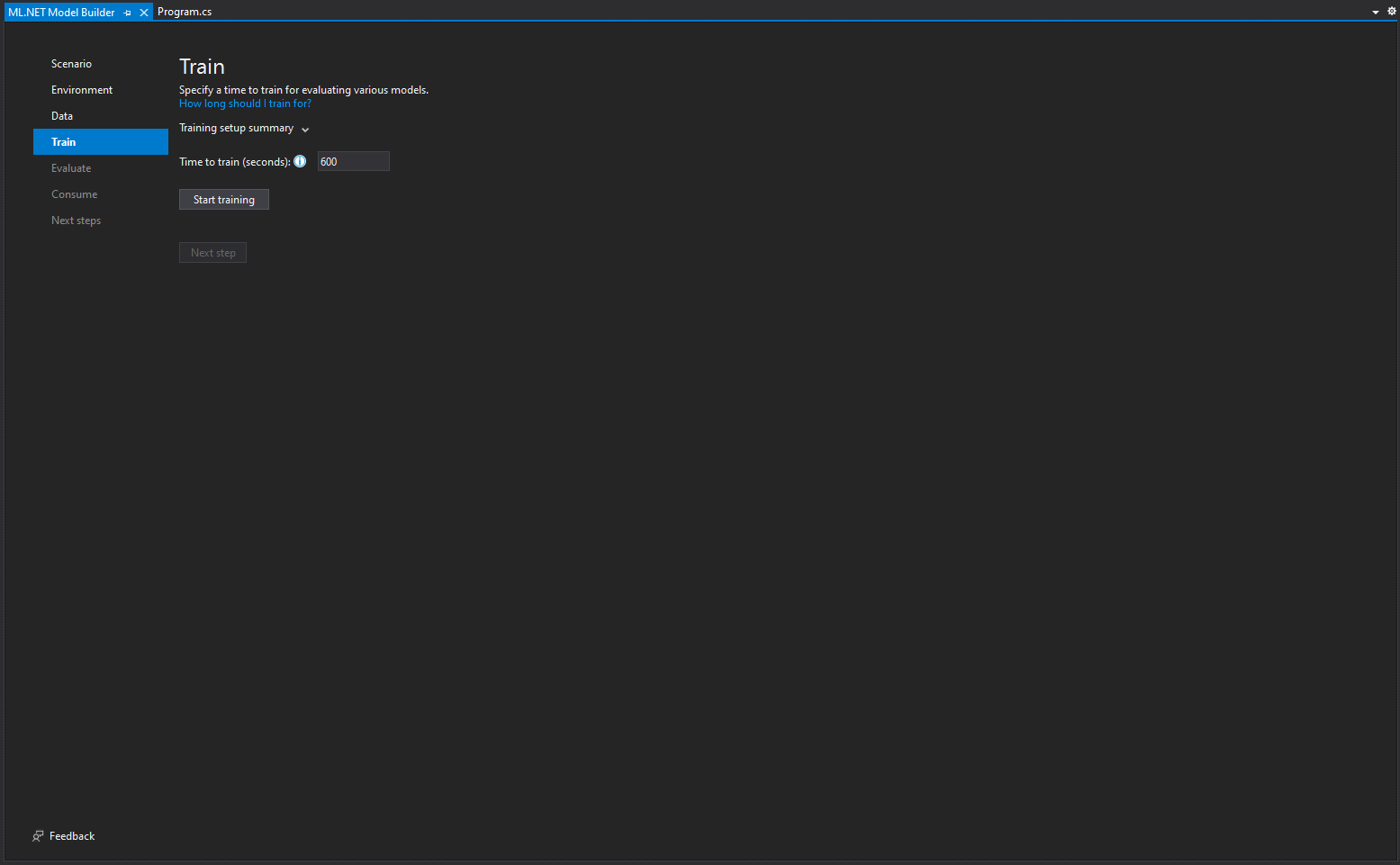

Here you can choose your training time, this depents on many variables such as your dataset size and your processor. You can find some estimates here. The default value is 10 seconds, but you can step it up to 60 to have a bit more time to train. Then click Start training.

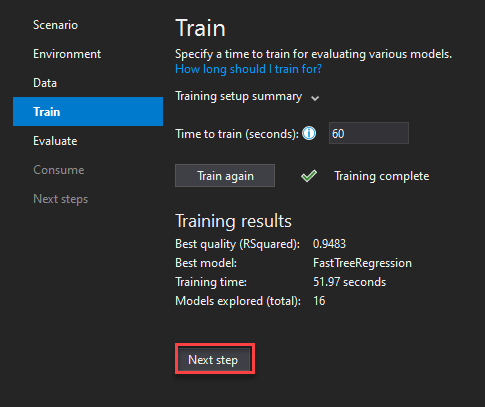

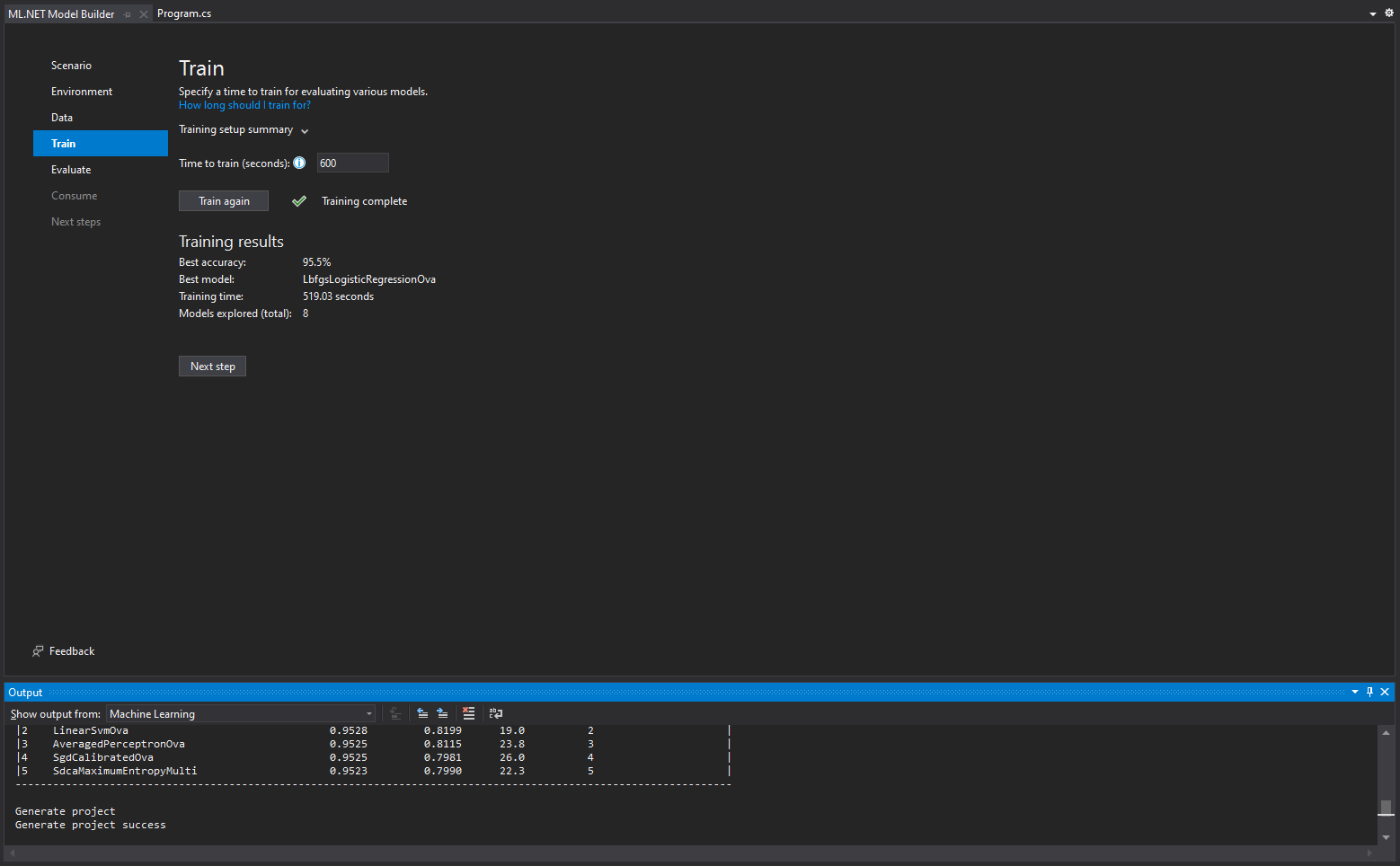

After the training is complete you can see which is the best model for your use case. You do not need to do anything, just click Next step.

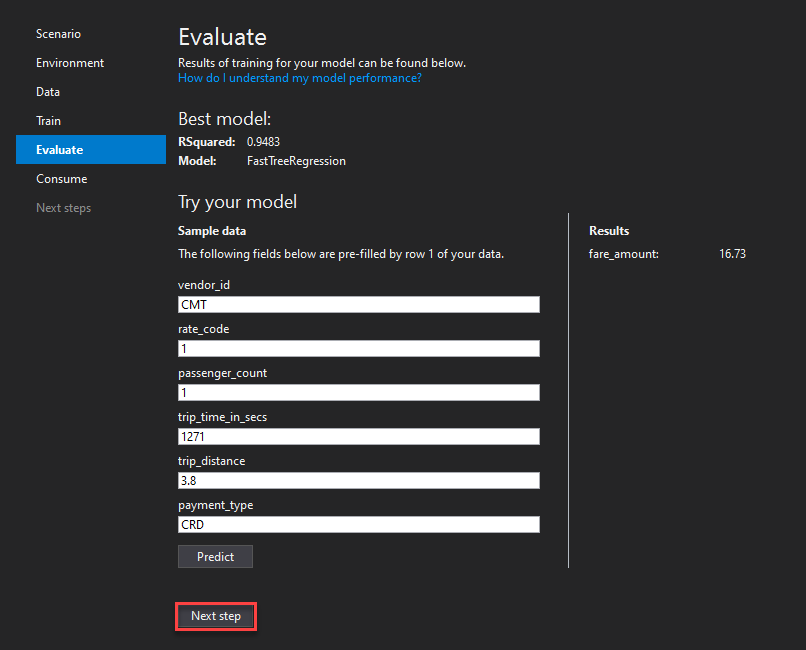

In the Evaluate step you can try some predictions to see if you are satisfied with your model. After that click Next step.

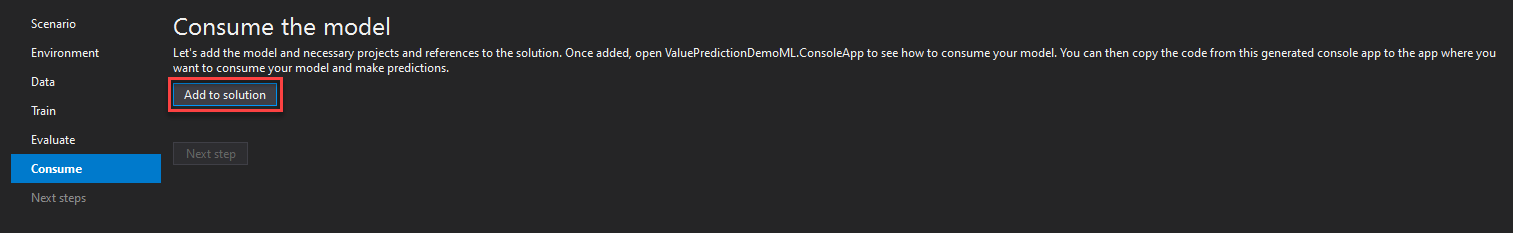

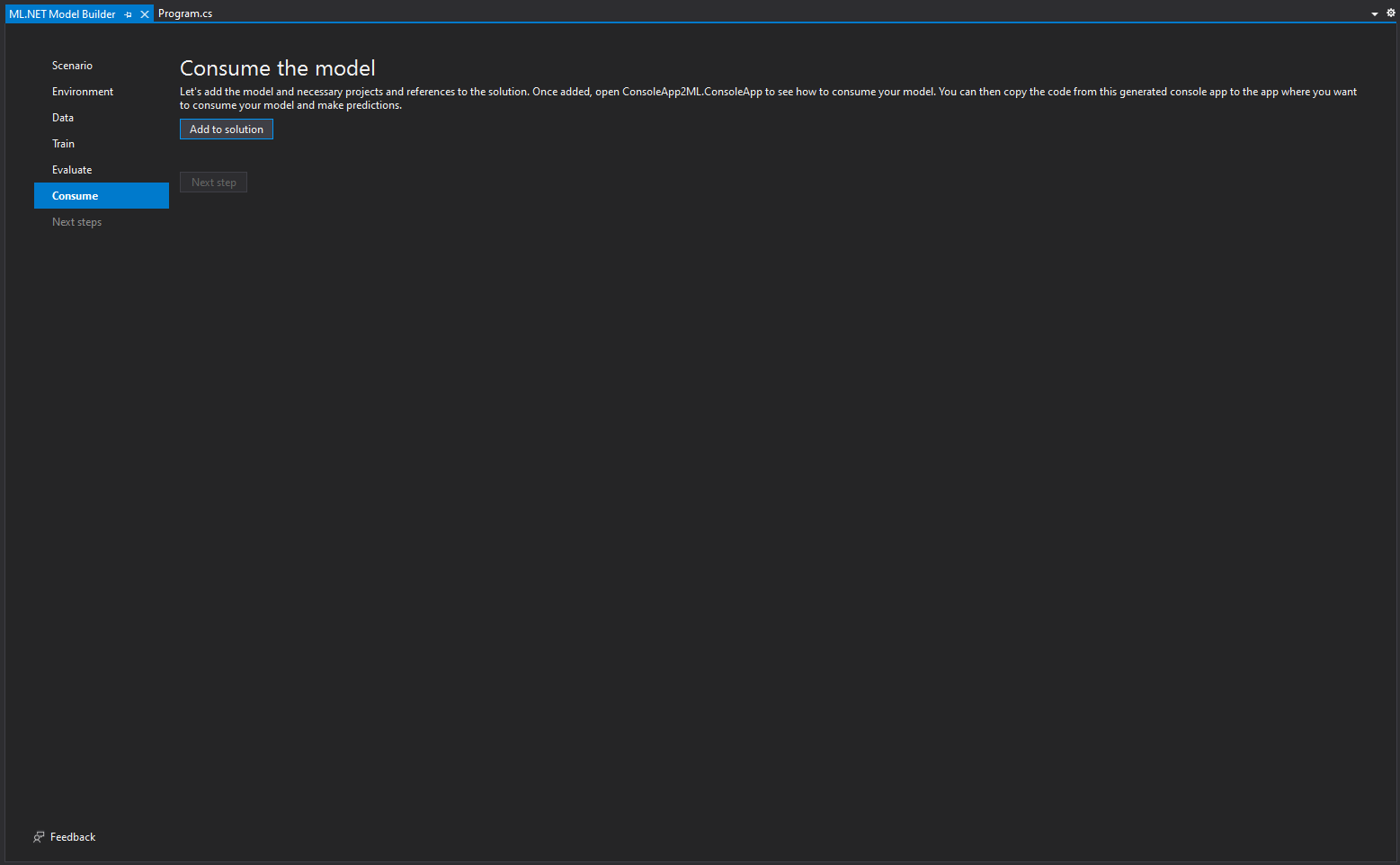

And you are ready to consume your model. Just click the Add to solution button to import it into your Console App.

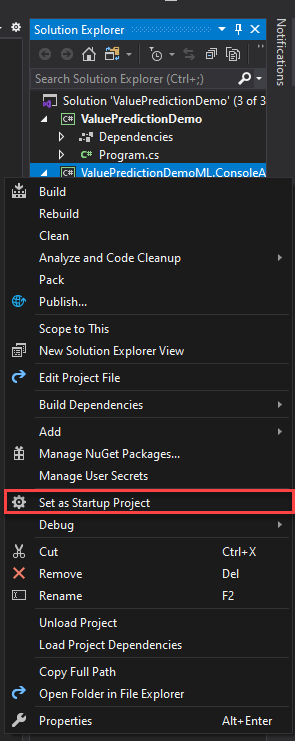

Go to the Solution Explorer, right click on the newly created Console App (Not the Model) and select Set as Startup Project.

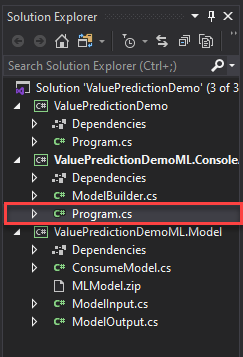

Now navigate to Program.cs to make any changes you want to your project.

And you are all set. Your value prediction model is good to go. The only thing left to do, is to test it.

Test

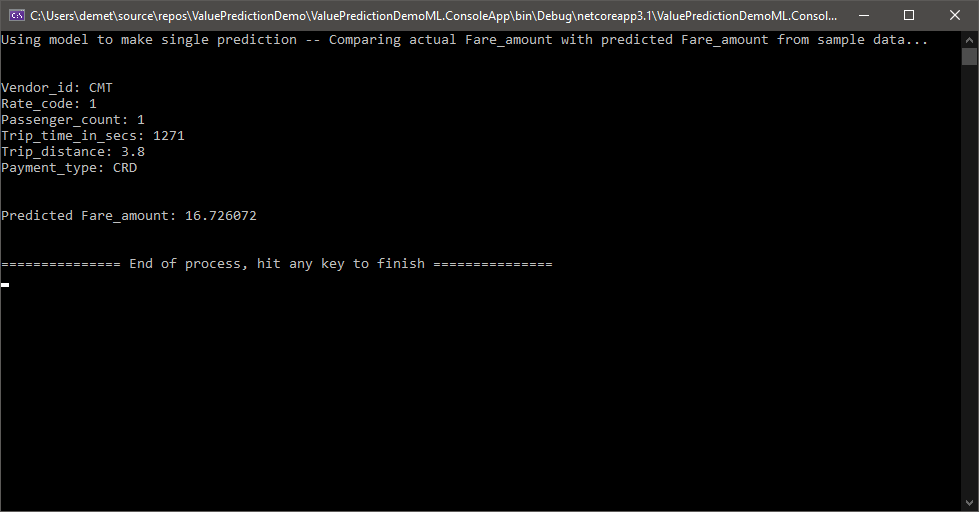

Navigate to the Main() function of your newly created Program.cs file. The following code gives the input based on which the model will make the prediction. You can change the data here as you like.

ModelInput sampleData = new ModelInput()

{

Vendor_id = @"CMT",

Rate_code = 1F,

Passenger_count = 1F,

Trip_time_in_secs = 1271F,

Trip_distance = 3.8F,

Payment_type = @"CRD",

};

We run the solution and we get the results for the default input values.

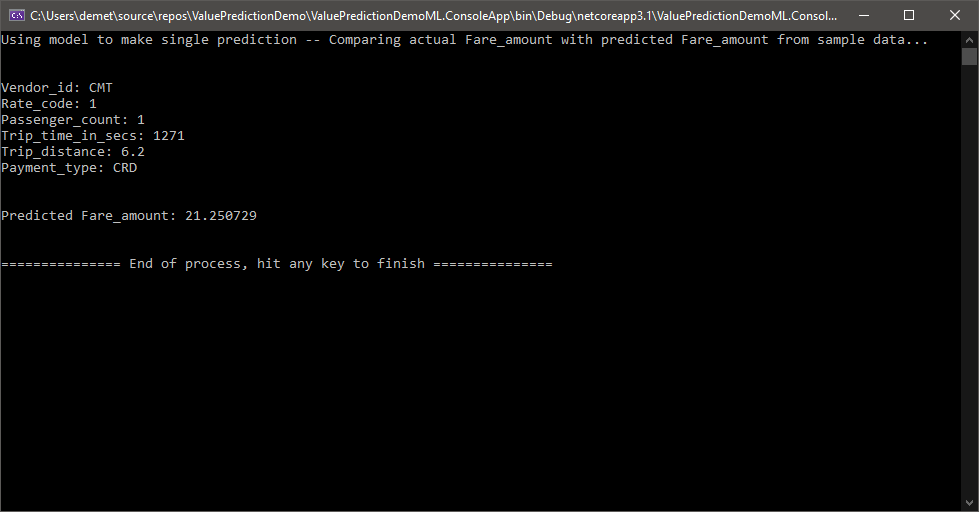

For the second test we changed the trip distance from 3.8 to 6.2 and as expected the predicted fare amount is significantly higher.

This is how you can use ML.NET to predict values in a fast and easy way!

Proactive Messages in Microsoft Teams

May 22, 2021

May 22, 2021

Proactive messaging provides your bot the ability to notify the user with messages that can be written and modified by the developer. The ability to use them with any channel can bring confusion due to how each channel handles proactive messages and dialogs.

Preface

As you propably already know, not every piece of code will behave similarly in any supported channel, proactive messages being one of them. There are many implementations out there, but while it might work in the emulator and in the Web Chat, many major channels like Microsoft Teams might need some changes to get them to work correctly. This post aims to give you an implementation that will work in many major channels, including Microsoft Teams and will still allow you to have the full functionality of your bot in the web chat and emulator.

You can find the official proactive messages sample here and although it will work out of the box with Teams, it might not be suitable for every use case. For example you might need to create a dialog bot that has a slightly different structure and that’s what we are going to do in this post. Here is an older post showing proactive messages working in a dialog bot, and while this works fine with the Web Chat, Teams does not support it.

Microsoft Teams and many other channels never call the OnConversationUpdateActivityAsync() function, which is the function used to capture the converstation reference. In order to capture it we need to find a function that is called often enough (at every message) to collect the conversation reference, if we need to use our bot in these channels. That function is OnMessageActivityAsync().

Create

In this post we will be using a Basic Bot created by Azure Bot Service. You can find out how to create one in this post.

This is the controller that will handle the proactive messages. Create a new class named NotifyController.cs and paste in it the following code.

using System;

using System.Collections.Concurrent;

using System.Net;

using System.Threading;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Bot.Builder;

using Microsoft.Bot.Builder.Integration.AspNet.Core;

using Microsoft.Bot.Schema;

using Microsoft.Extensions.Configuration;

namespace ProactiveBot.Controllers

{

[Route("api/notify")]

[ApiController]

public class NotifyController : ControllerBase

{

private readonly IBotFrameworkHttpAdapter _adapter;

private readonly string _appId;

private readonly ConcurrentDictionary<string, ConversationReference> _conversationReferences;

public NotifyController(IBotFrameworkHttpAdapter adapter, IConfiguration configuration, ConcurrentDictionary<string, ConversationReference> conversationReferences)

{

_adapter = adapter;

_conversationReferences = conversationReferences;

_appId = configuration["MicrosoftAppId"] ?? string.Empty;

if (string.IsNullOrEmpty(_appId))

{

_appId = Guid.NewGuid().ToString(); //if no AppId, use a random Guid

}

}

public async Task<IActionResult> Get()

{

foreach (var conversationReference in _conversationReferences.Values)

{

await ((BotAdapter)_adapter).ContinueConversationAsync(_appId, conversationReference, BotCallback, default(CancellationToken));

}

// Let the caller know proactive messages have been sent

return new ContentResult()

{

Content = "<html><body><h1>Proactive messages have been sent.</h1></body></html>",

ContentType = "text/html",

StatusCode = (int)HttpStatusCode.OK,

};

}

private async Task BotCallback(ITurnContext turnContext, CancellationToken cancellationToken)

{

await turnContext.SendActivityAsync("This is a proactive message");

}

}

}

Implement

Find the file named DialogBot.cs and insert the following using statement.

using System.Collections.Concurrent;

Add the ConcurrentDictionary inside the class.

protected readonly ConcurrentDictionary<string, ConversationReference> _conversationReferences;

Replace the class’s constructor with this one which passes the conversationReferences in lines 1 and 7.

public DialogBot(ConversationState conversationState, UserState userState, T dialog, ILogger<DialogBot<T>> logger, ConcurrentDictionary<string, ConversationReference> conversationReferences)

{

ConversationState = conversationState;

UserState = userState;

Dialog = dialog;

Logger = logger;

_conversationReferences = conversationReferences;

}

Add the AddConversationReference function later in the file to collect the conversation reference.

private void AddConversationReference(Activity activity)

{

var conversationReference = activity.GetConversationReference();

_conversationReferences.AddOrUpdate(conversationReference.User.Id, conversationReference, (key, newValue) => conversationReference);

}

In the OnMessageActivityAsync() function, add the following line.

AddConversationReference(turnContext.Activity as Activity);

Next, open the DialogAndWelcomeBot.cs class and insert this using statement.

using System.Collections.Concurrent;

Change the constructor to the following one to account for the conversation reference in lines 1 and 2.

public DialogAndWelcomeBot(ConversationState conversationState, UserState userState, T dialog, ILogger<DialogBot<T>> logger, ConcurrentDictionary<string, ConversationReference> conversationReferences)

: base(conversationState, userState, dialog, logger, conversationReferences)

{

}

Lastly, open the Startup.cs file and add these two using statements.

using Microsoft.Bot.Schema; using System.Collections.Concurrent;

Include the ConcurrentDictionary service by pasting the following line inside the class.

services.AddSingleton<ConcurrentDictionary<string, ConversationReference>>();

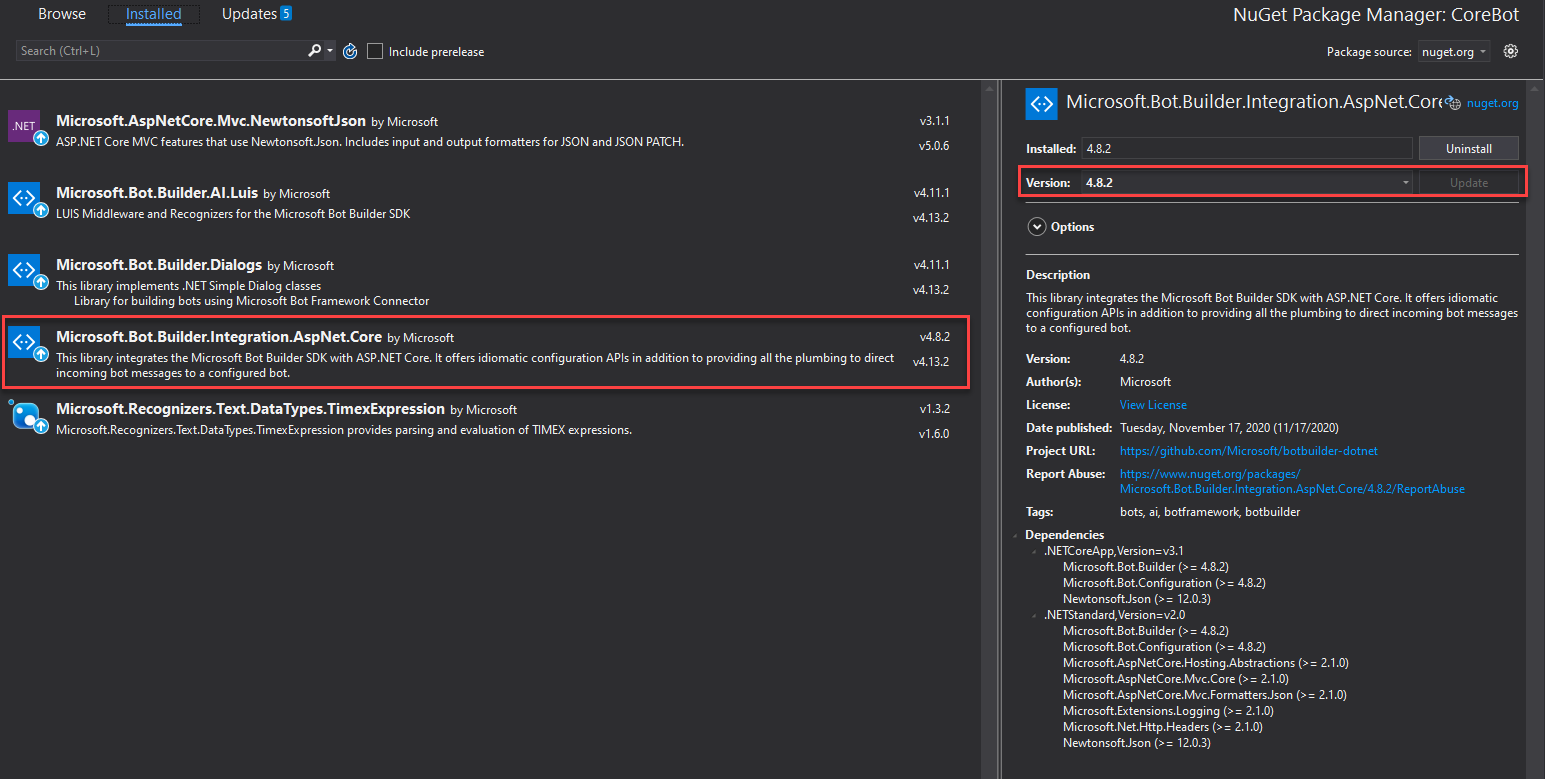

Now functionality may vary depending on the NuGet packages you use, and more specifically on the Microsoft.Bot.Builder.Integration.AspNet.Core package. I am using the 4.8.2 version. I you are having difficulties with getting your proactive messages to appear consider changing the version to the one we are using in this post.

You are now ready to go. Publish your bot in Azure and test it using Microsoft Teams.

Test

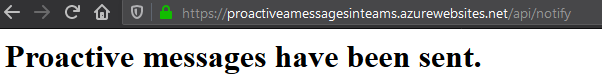

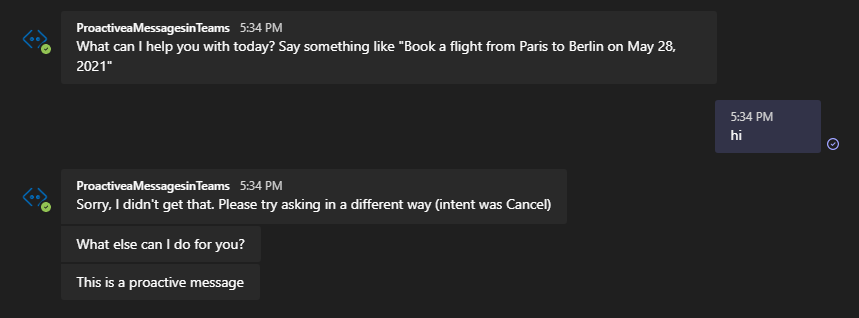

Once you have published your bot start talking to it using Teams to capture the new conversation reference. After that call the notify endpoint to get your proactive messages. Your endpoint will look like this: BOT_NAME.azurewebsites.net/api/notify with BOT_NAME being the name of your web app bot.

Here is the bot working in Microsoft Teams!

This way you can have proactive messages appear in your Teams chat! This post may also work in other channels that the previous implementation was not supported.

Get started with Speech Studio

May 12, 2021

May 12, 2021

Speech Studio serves as a customization portal for the Azure Speech resource. It provides all the tools you need to transcribe spoken audio to text, perform translations and convert text to lifelike speech.

Create

The aim of this post is to get you familiar with the interface and capabilities of Speech Studio.

Let’s dive in!

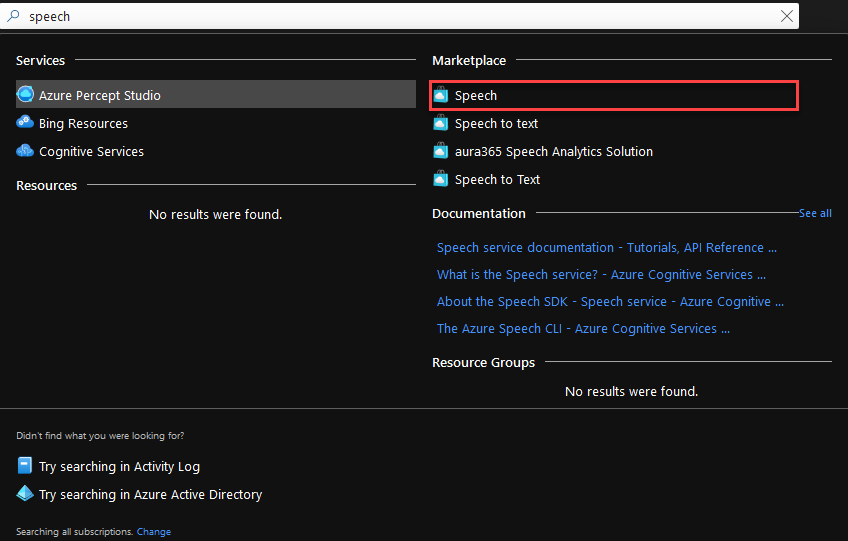

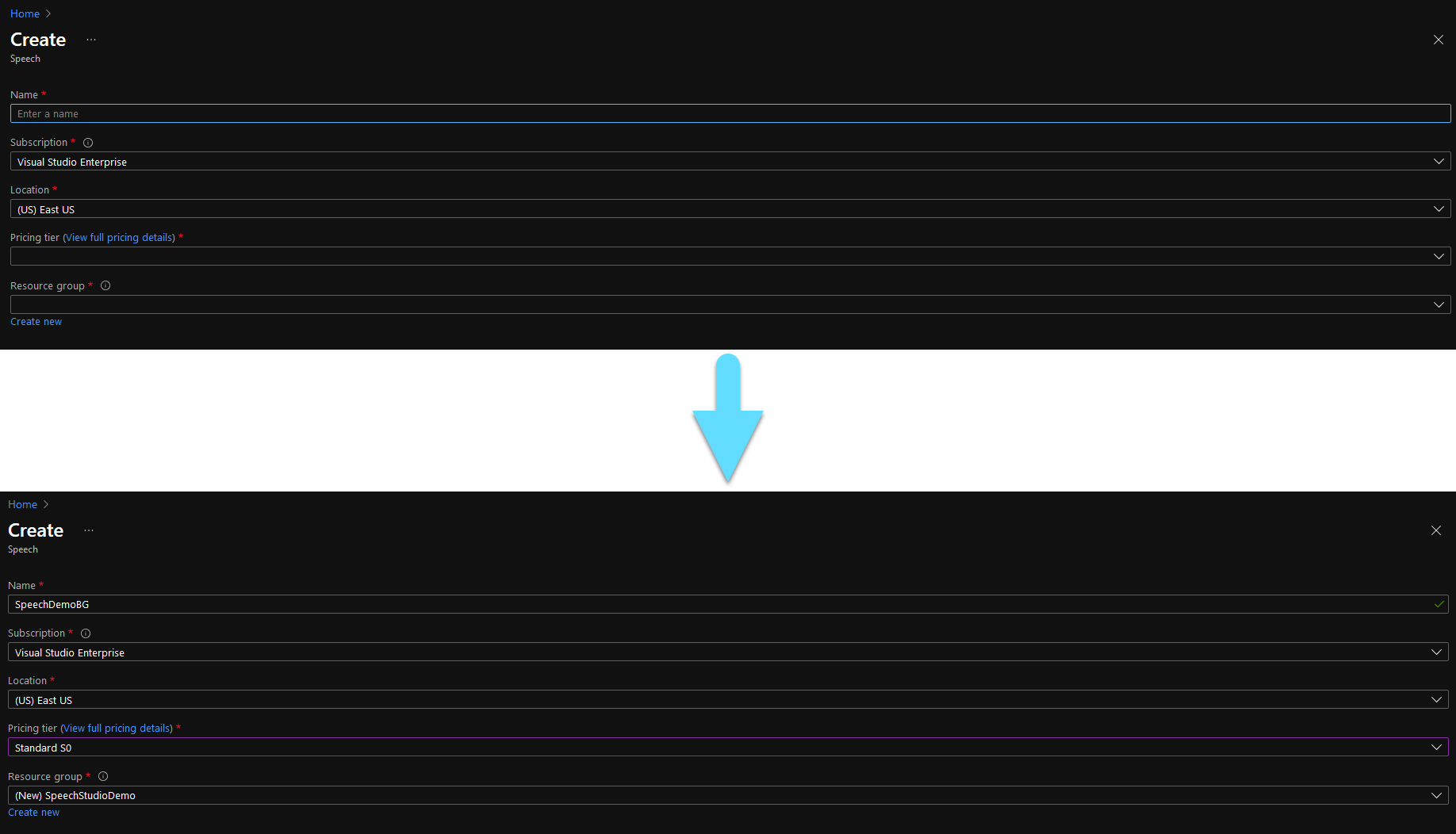

Go to the Azure Portal and find the Speech resource from the marketplace.

Fill the form. The fields with the ‘*’ are mandatory.

- Name, is the name you need to give to your speech resource.

- Subscription should already be filled in with your default subscription.

- Location is preoccupied by the default location, but you can change it if you like.

- Choose the Pricing tier that best meets your needs.

- For Resource Group, you can use an existing one, or create a new one.

Then click Create.

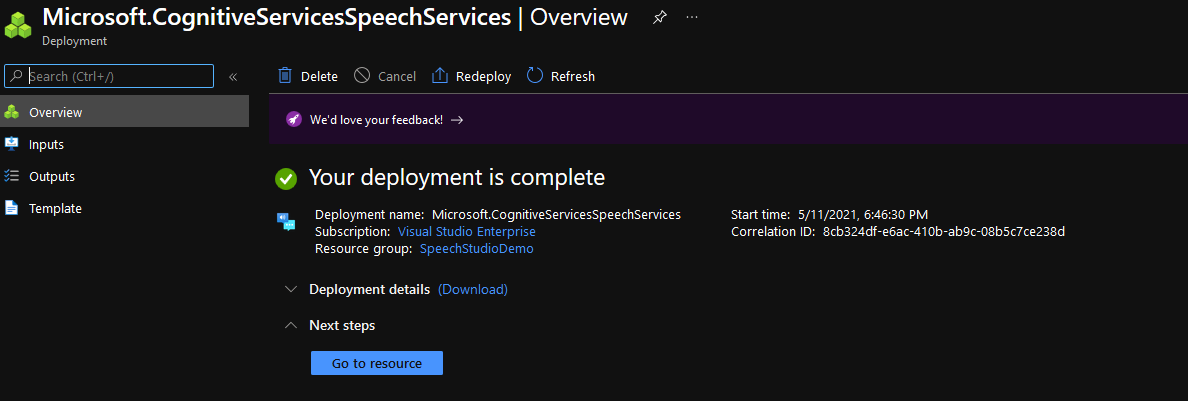

Wait a few minutes for your resource to get deployed.

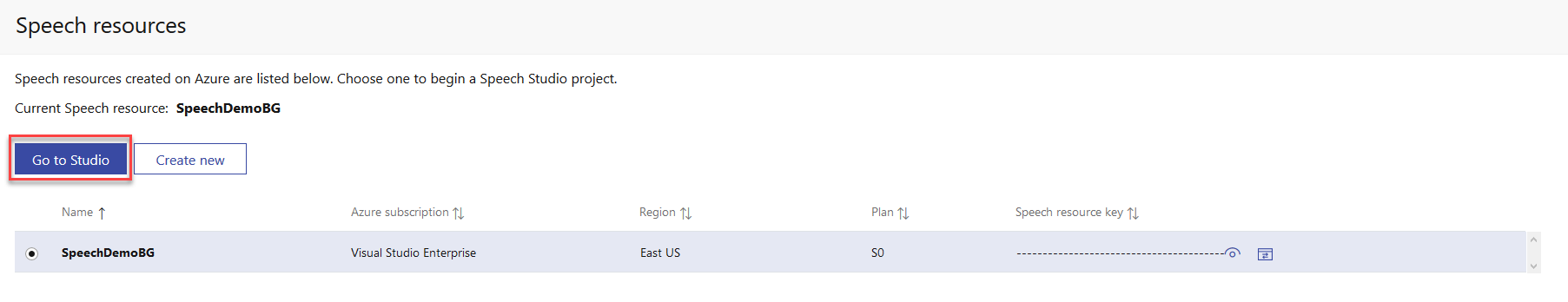

Now visit the Speech Studio portal, select the Speech resource you just created and click Go to Studio.

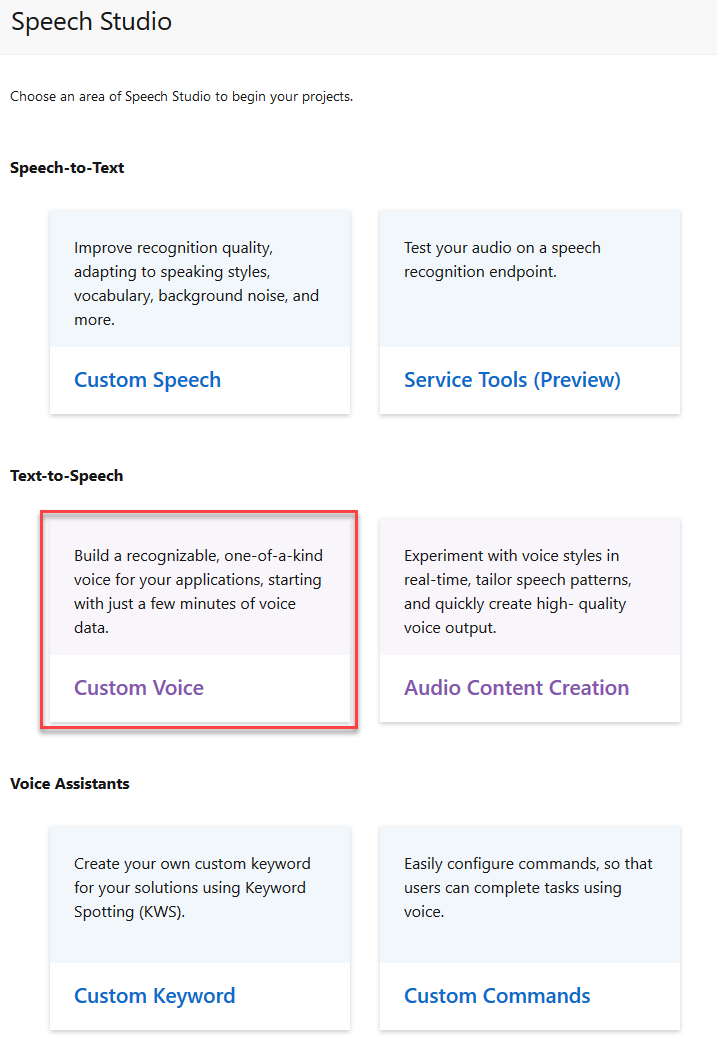

Here you can see all the capabilities of the Speech Studio.

You can create a model that transcripes audio to words, you can configure custom commands for your voice assistant and even create your own text to speech models that can read aloud the text given to them.

We will go with Custom Voice for this demo.

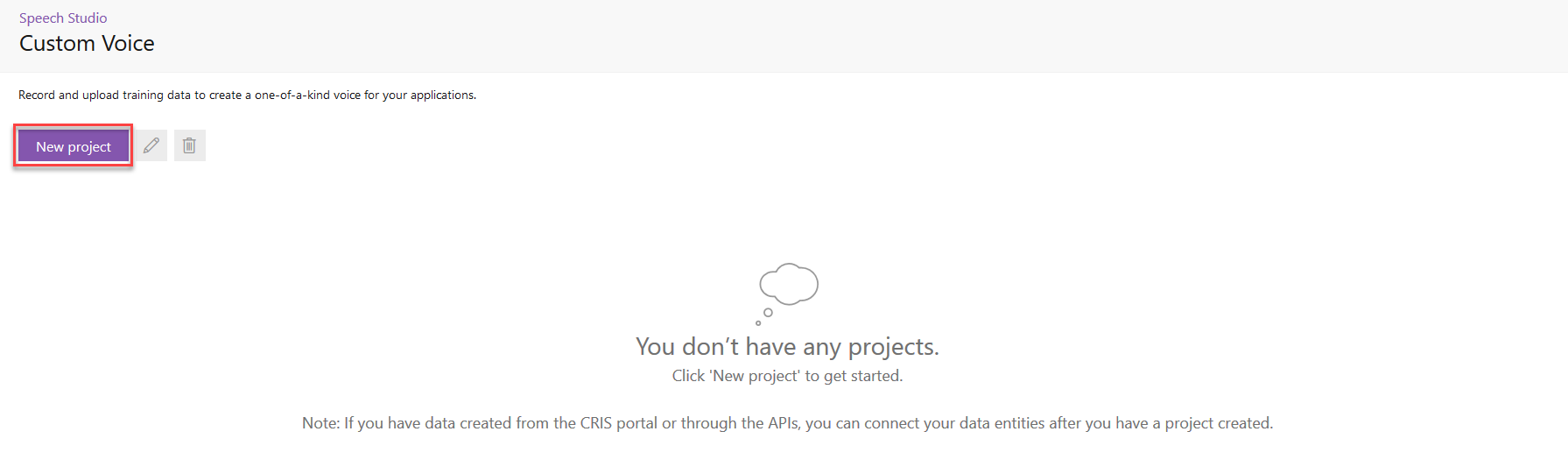

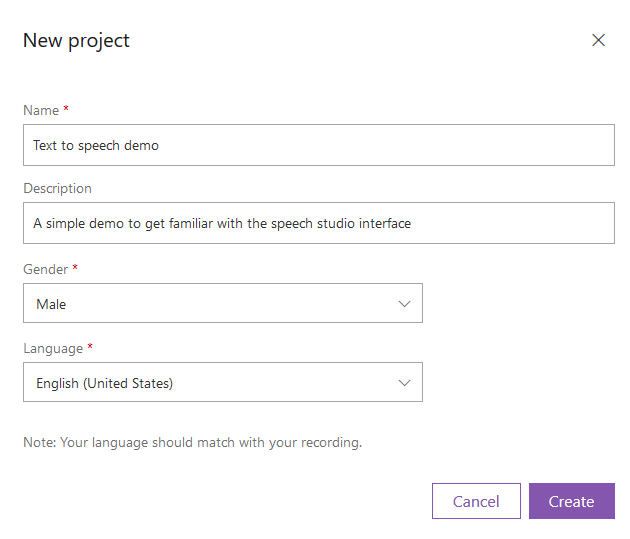

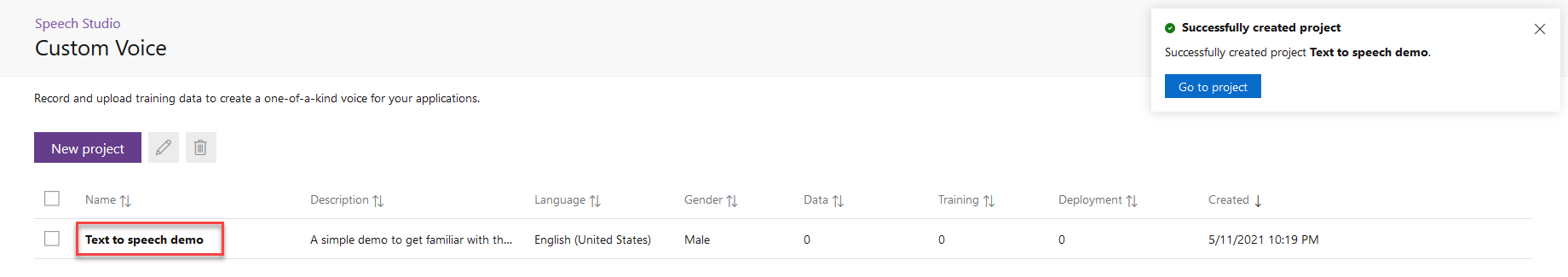

Click on New project to create your project.

Give your project a name and description. The gender field covers the gender of the person that your model will represent and the language, is the language that will be supported from your model.

Click on your newly created project and upload data.

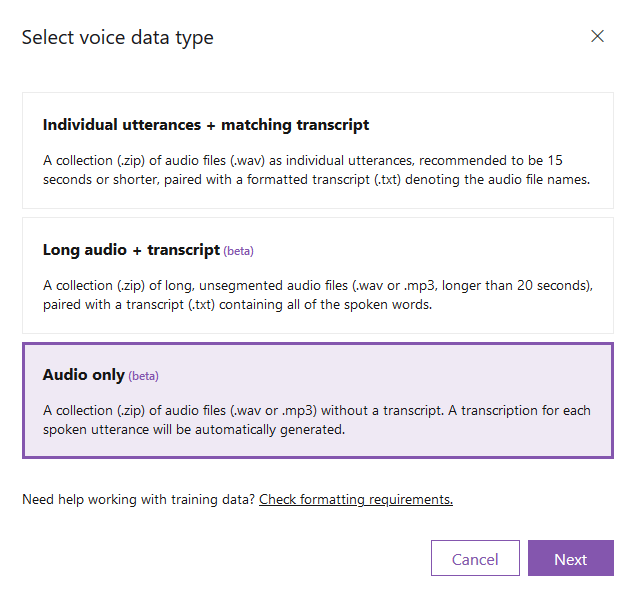

You can find some sample datasets in kaggle and zenodo. However it is preferred to find a relatively big dataset with transcribed text from only one person. Once you find your dataset click on the option that best fits your data and click Next.

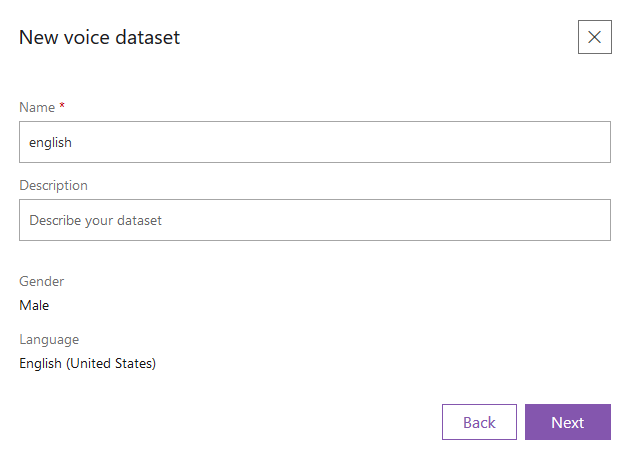

Give a name to your new dataset and click Next.

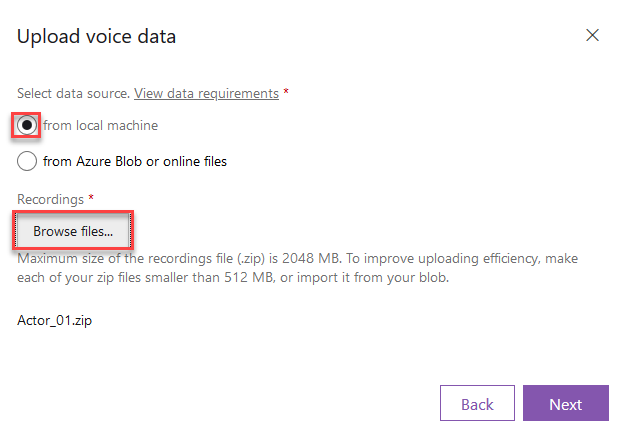

Here you have the option to import a dataset from Azure Blob Storage. If you have your dataset locally, just pick the from local machine option and then click Browse files… to choose which files to upload. Then click Next.

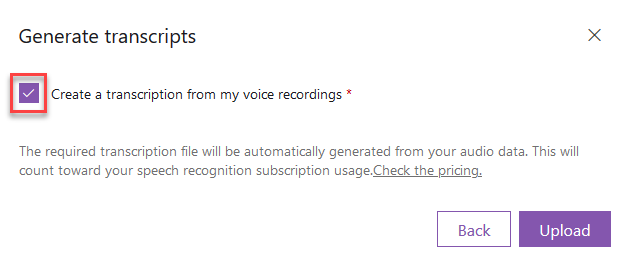

Check the Create a transcription from my voice recordings checkbox and click upload to start uploading your dataset.

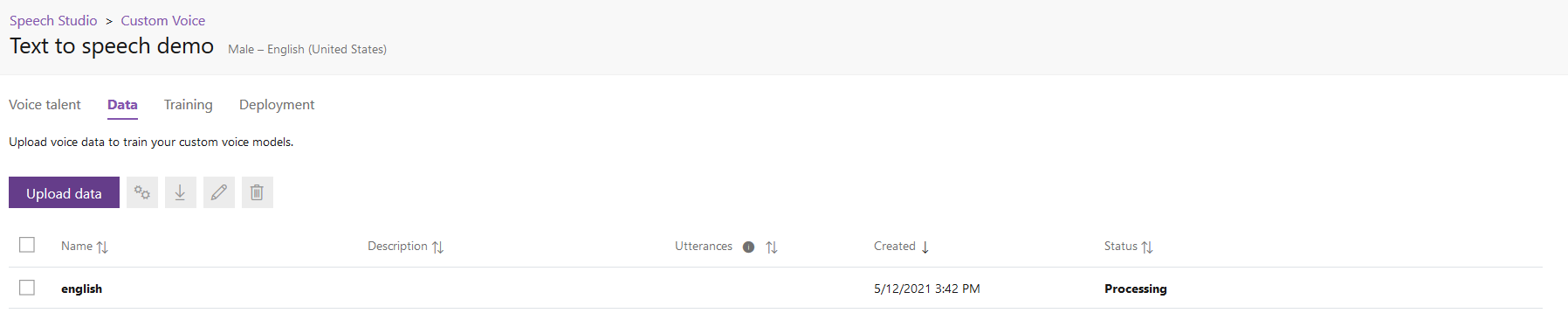

Once your dataset is uploads you will begin to see it’s Status as Processing and you might need to wait a few minutes.

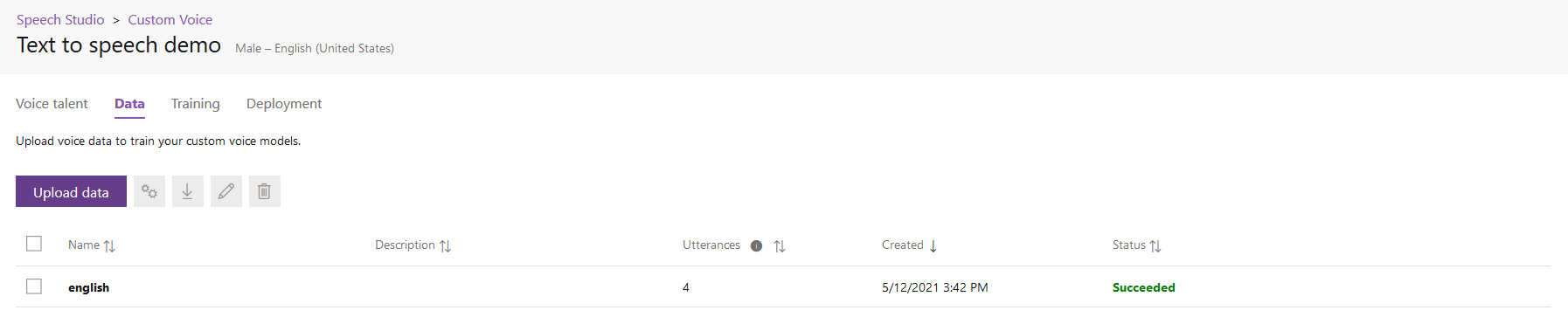

If everything worked out correctly you should see a Succeeded Status for your dataset. After that you are good to go.

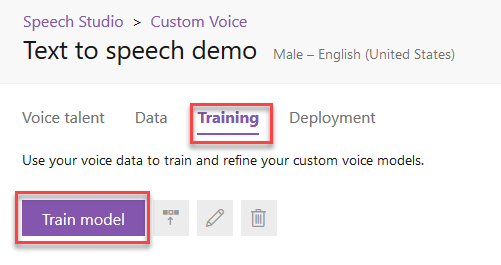

Go to the Training tab and click Train model.

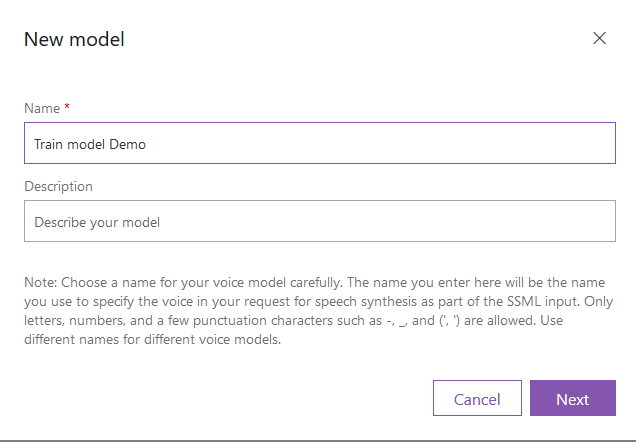

Give a name to your model and click Next.

Select your dataset and click Next again.

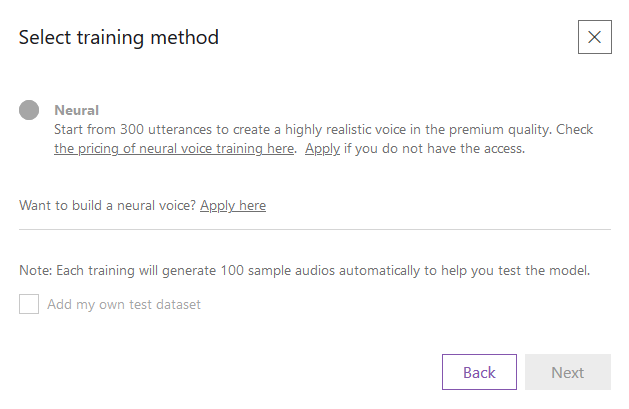

To start the training you will need at least 300 utterances in order to train the model. Once you have enough choose the Neural method and start training. You might also want to take a look at the pricing of neural voice training.

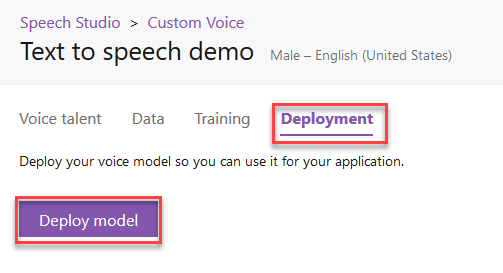

Once you have trained your model, you are now ready to publish it. Go to the Deployment tab and click Deploy model.

Once your model is deployed you will have an endpoint to your model to utilise for text to speech as a normal Speech resource. You can see how to import it tou your project using this post.

Get familiar with Azure Machine Learning

May 01, 2021

May 01, 2021

Azure Machine Learning is an enterprise-grade machine learning service to build and deploy models faster. Learn more about it here.

Create

This post aims to show you how to run your models the easy way using Azure Machine Learning.

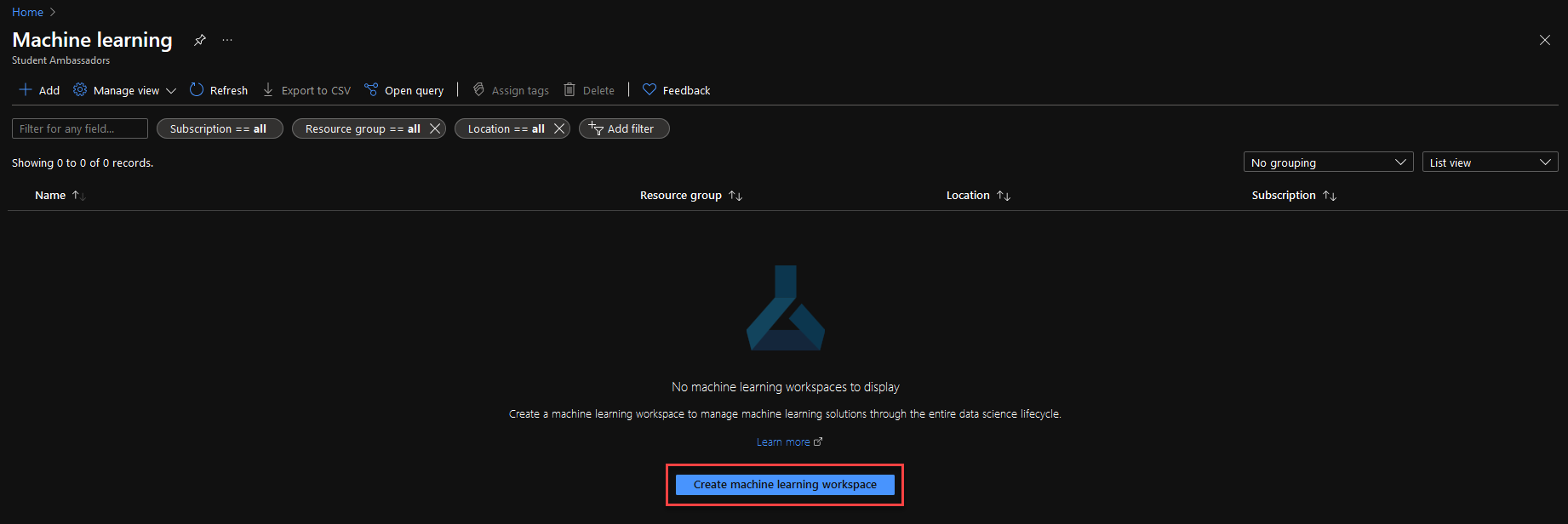

To start, go to the Azure portal, find the Machine Learning resource and click on the Create machine learning workspace button.

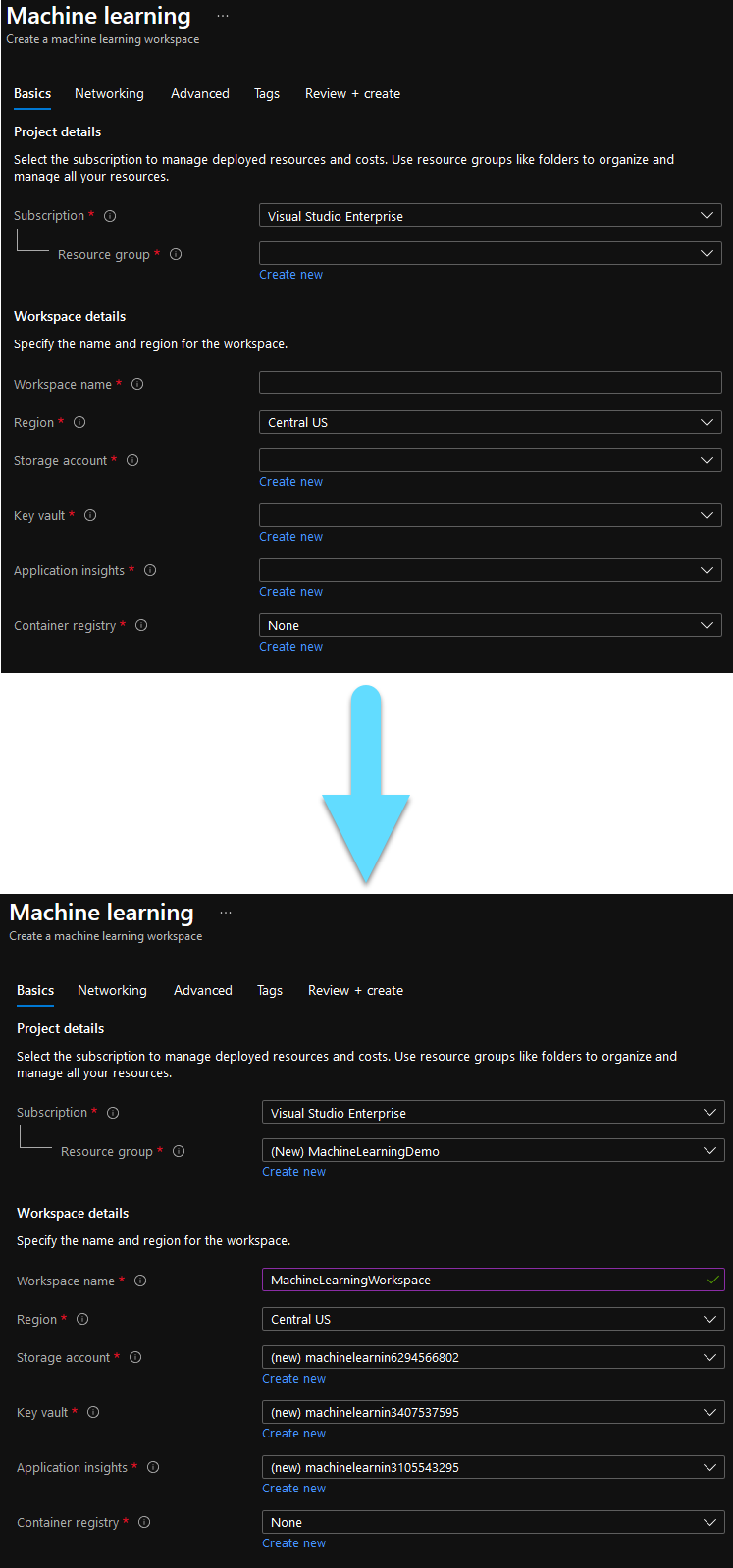

Fill the form. The fields with the ‘*’ are mandatory.

- Subscription should already be filled in with your default subscription.

- For Resource Group, you can use an existing one, or create a new one.

- Workspace Name is the name of your new Workspace.

The rest of the fields should auto-populate.

Then click Create.

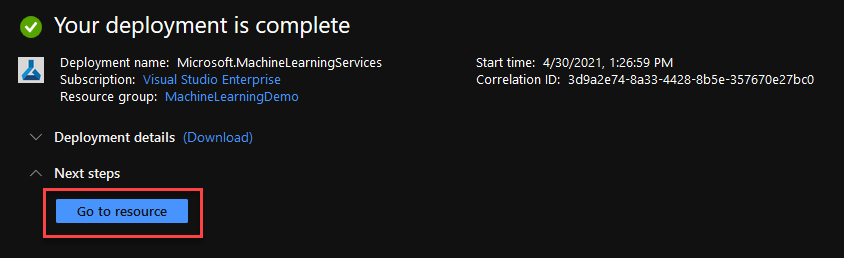

Wait until the deployment is complete and then click Go to your resource.

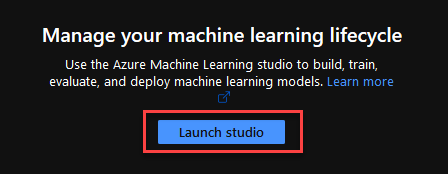

Now click the Launch studio button to navigate to the Azure Machine Learning Studio.

Import or choose Dataset

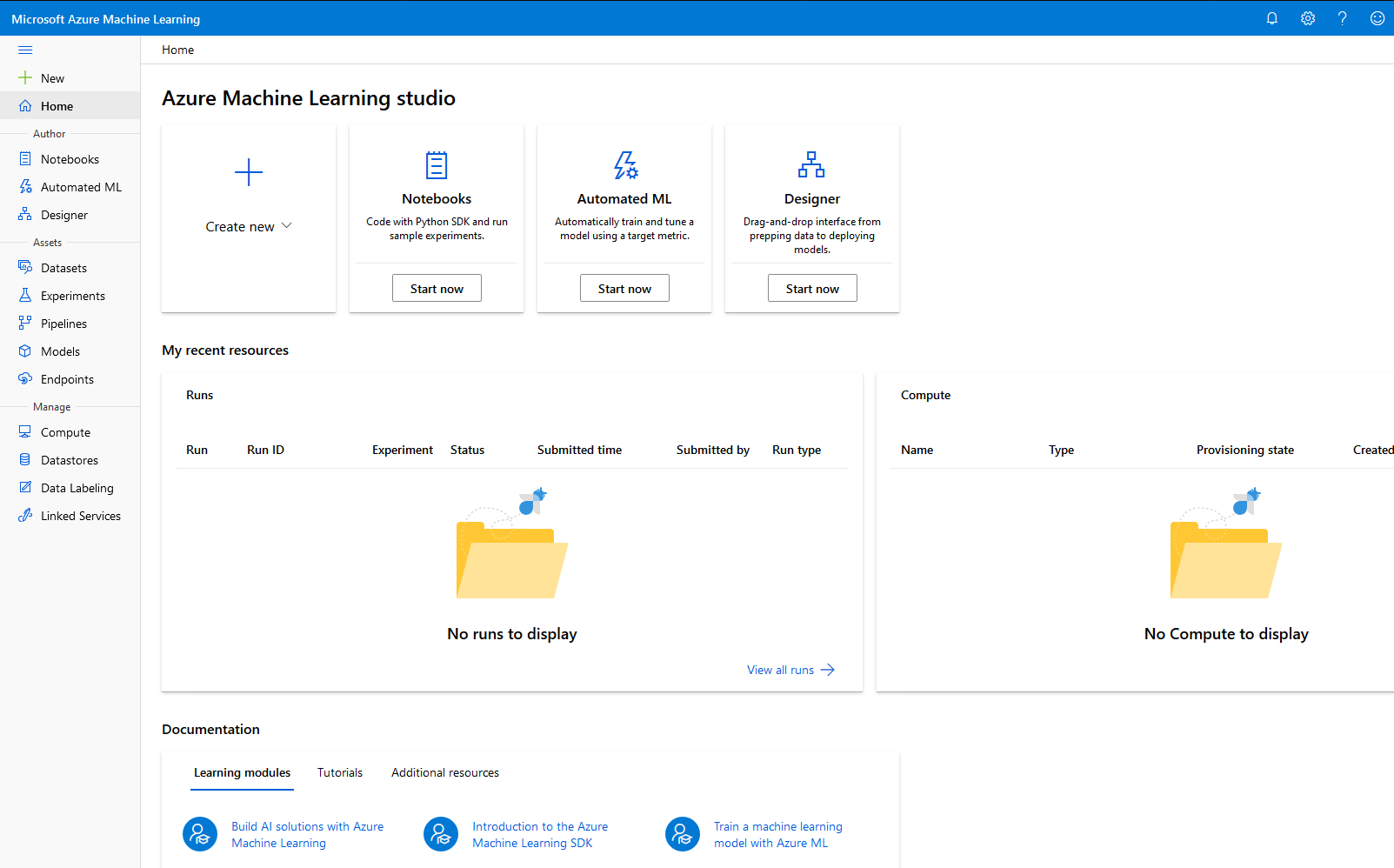

Here you can see the homepage of Azure Machine Learning Studio.

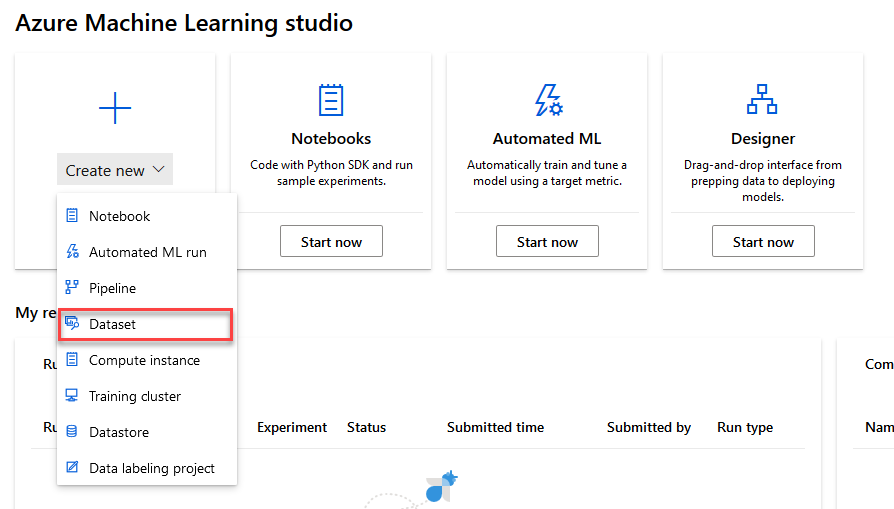

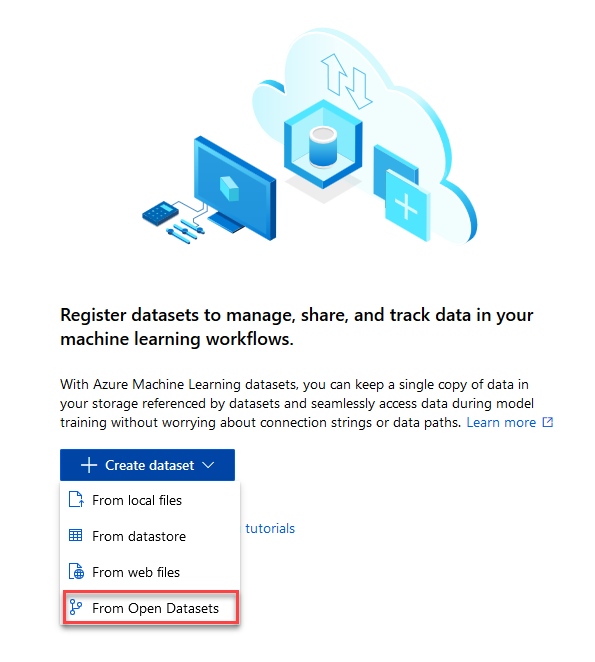

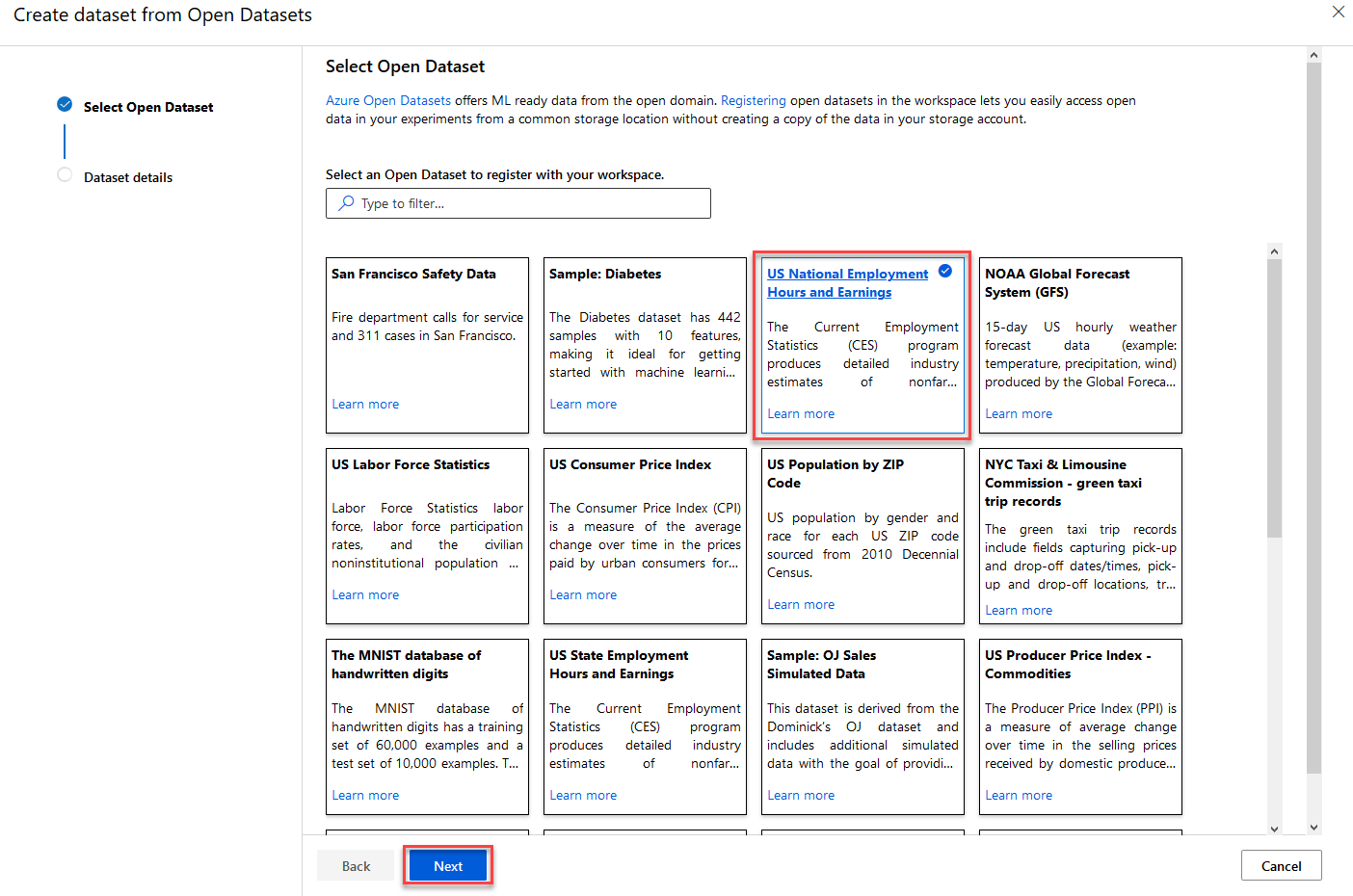

Go to Create new -> Dataset to create or import your dataset.

You can choose one from the open datasets to get familiar with the service, as we will do in this example.

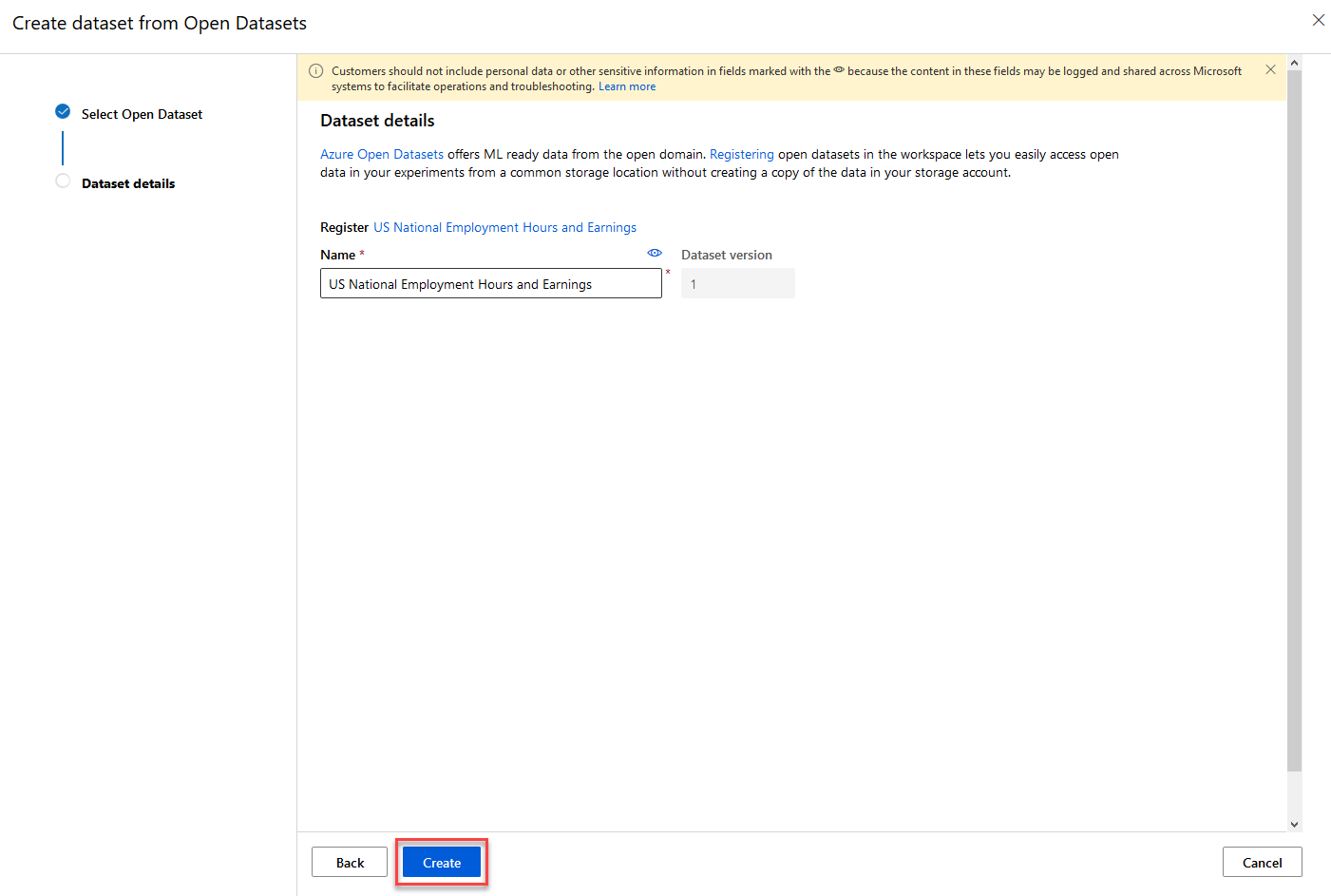

We are going to choose the US National Employment Hours and Earnings for our dataset and the click Next.

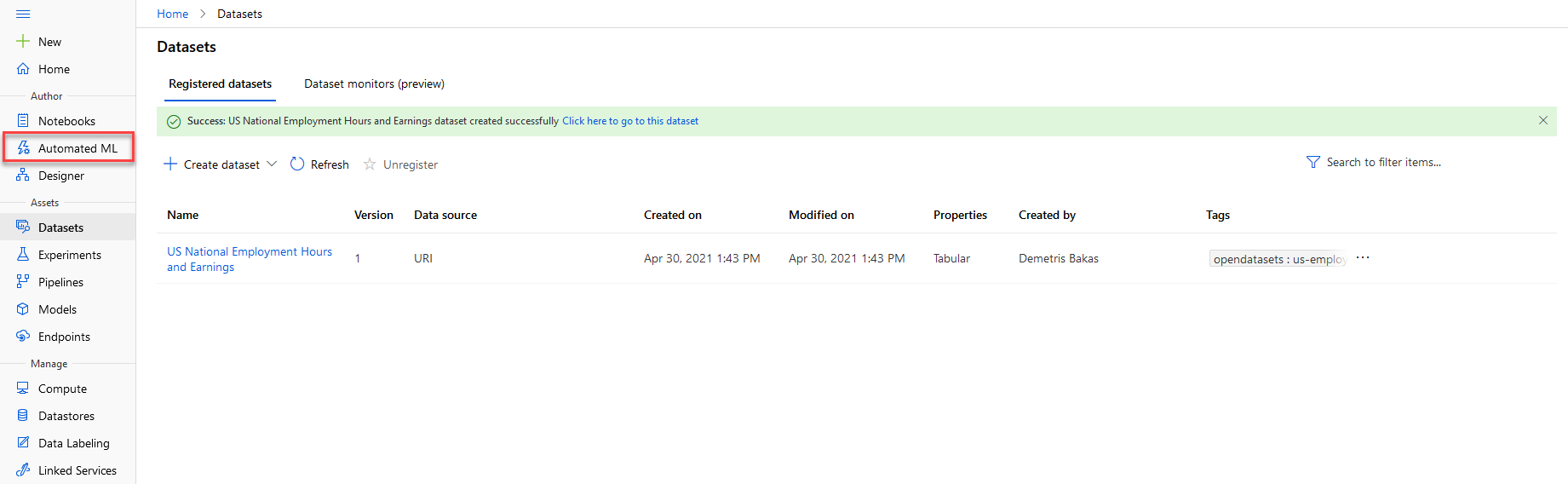

Here you can change the name of your dataset if you like, then click Create.

Implement Model

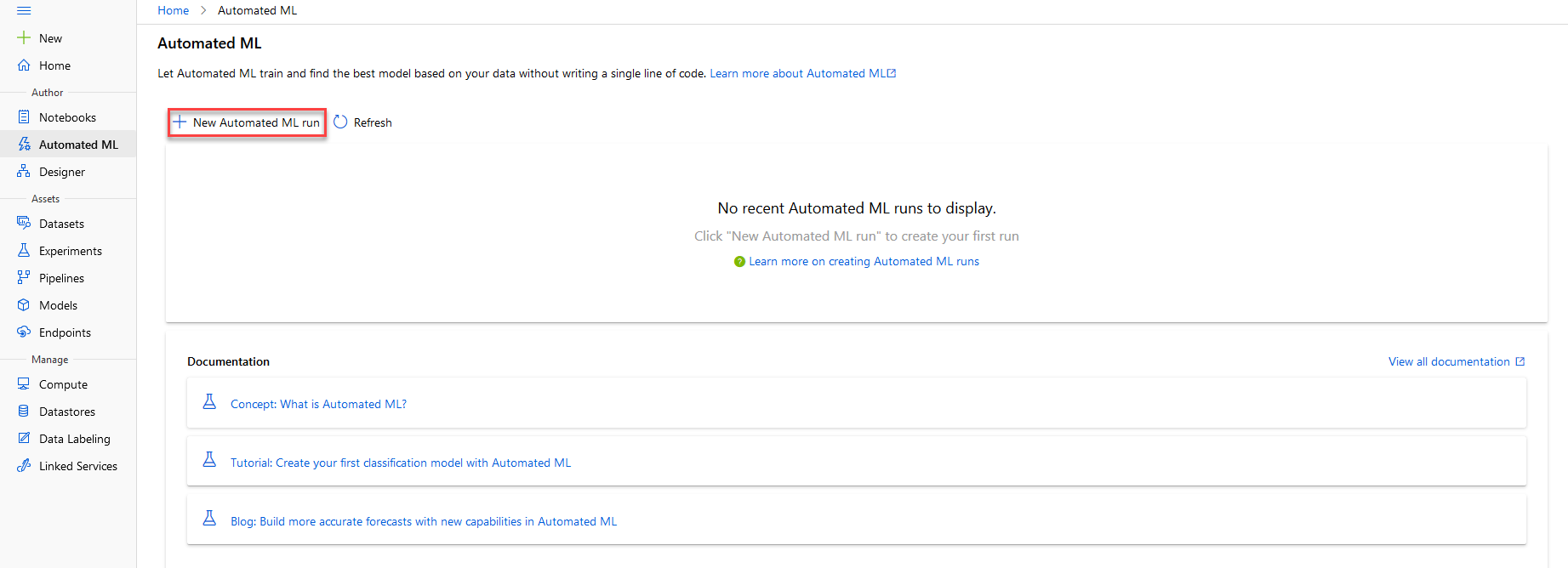

As you can see your new dataset is created. Now navigate to the Automated ML tab on the left to apply a model.

Click on the New Automated ML run button to configure your run.

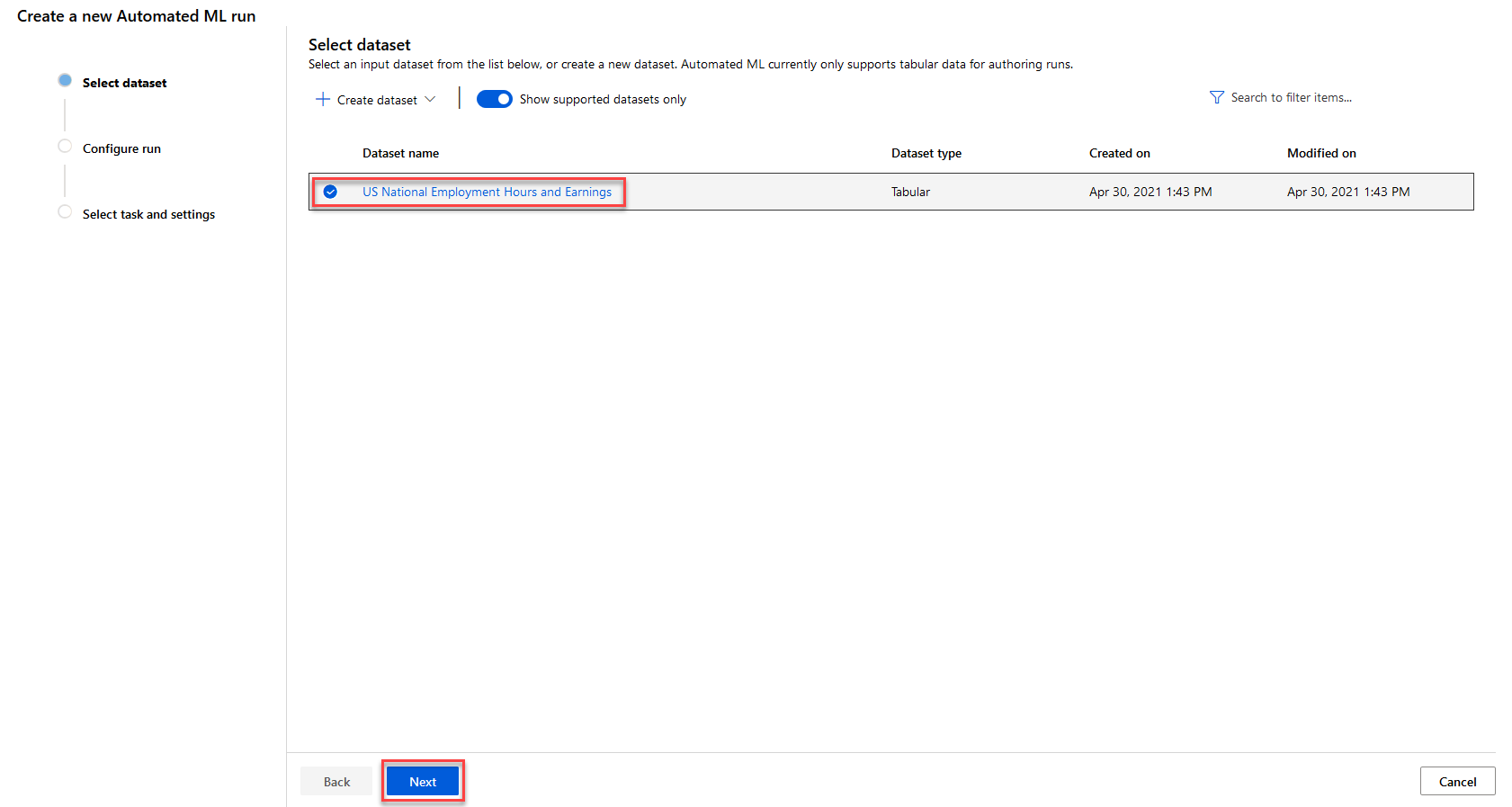

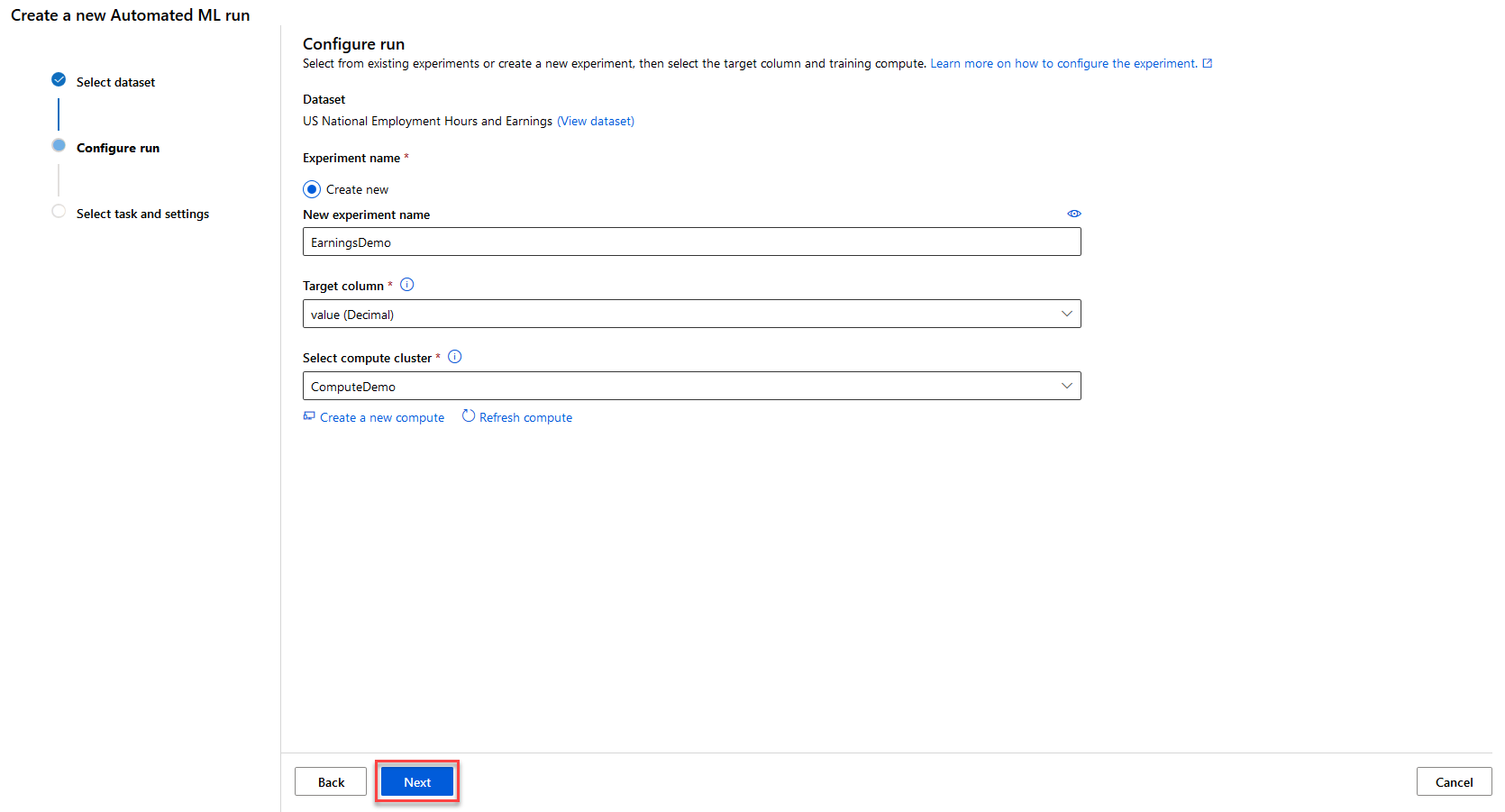

Select the dataset you would like to use and click Next.

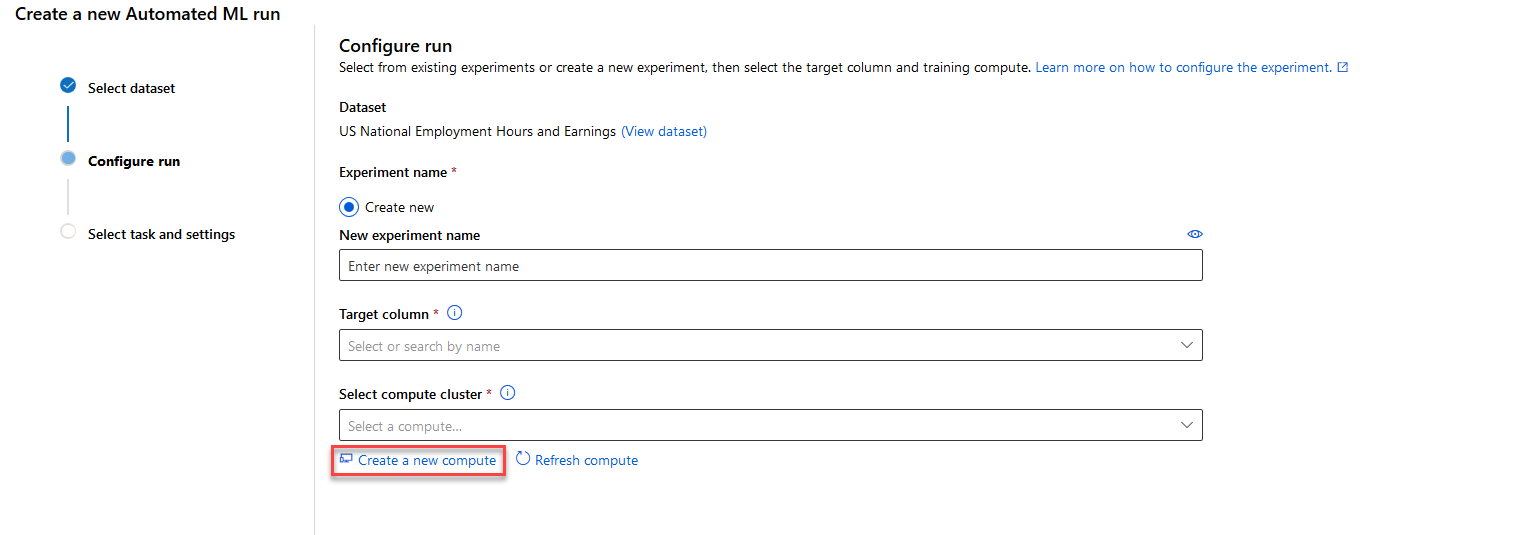

Fill the form. The fields with the ‘*’ are mandatory.

- New experiment name is the name of your experiment.

- Target column is the column you would like your experiment to focus on.

- Select compute cluster is the virtual machine that will run your experiment.

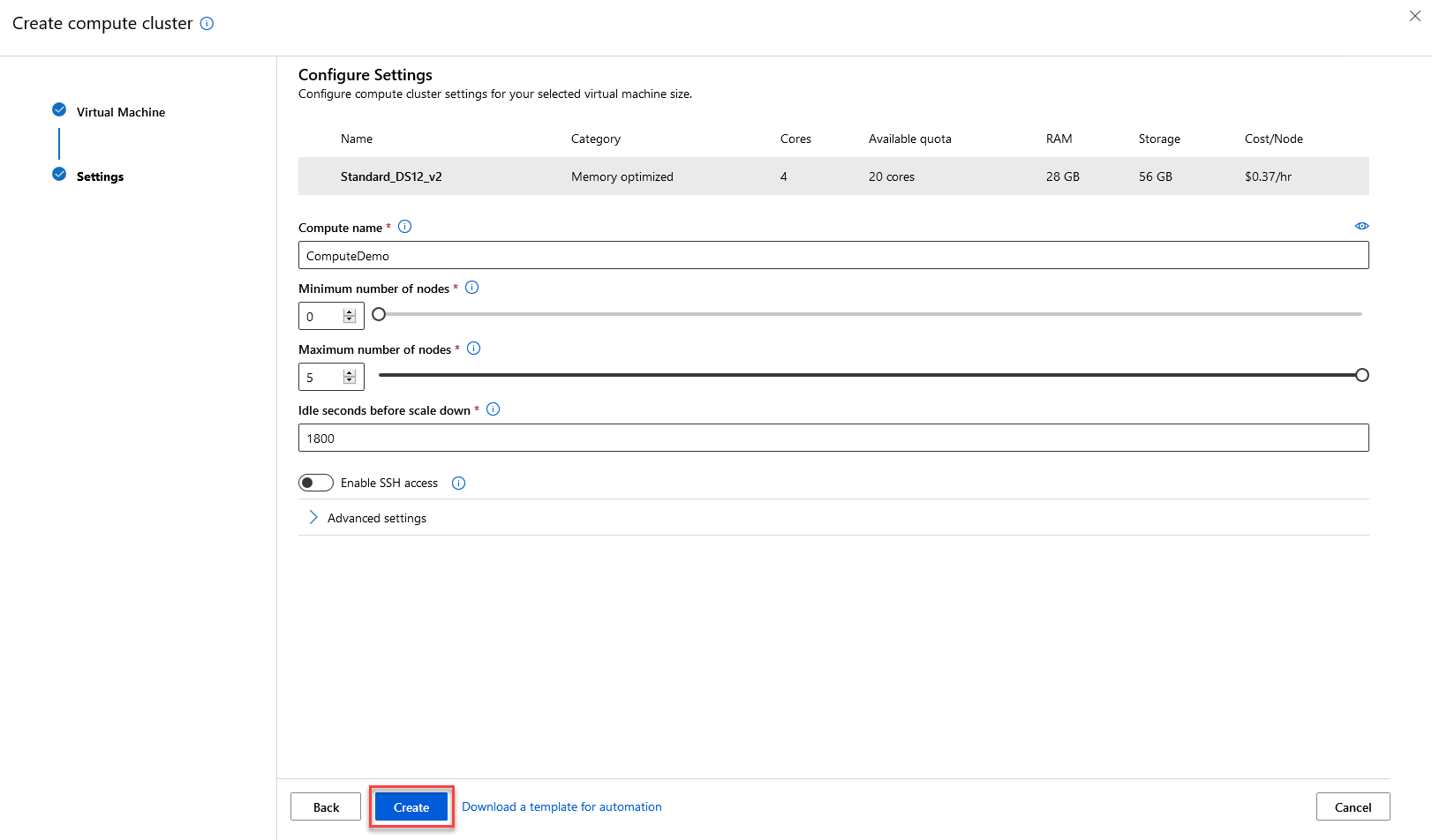

Click on Create a new compute to setup your virtual machine.

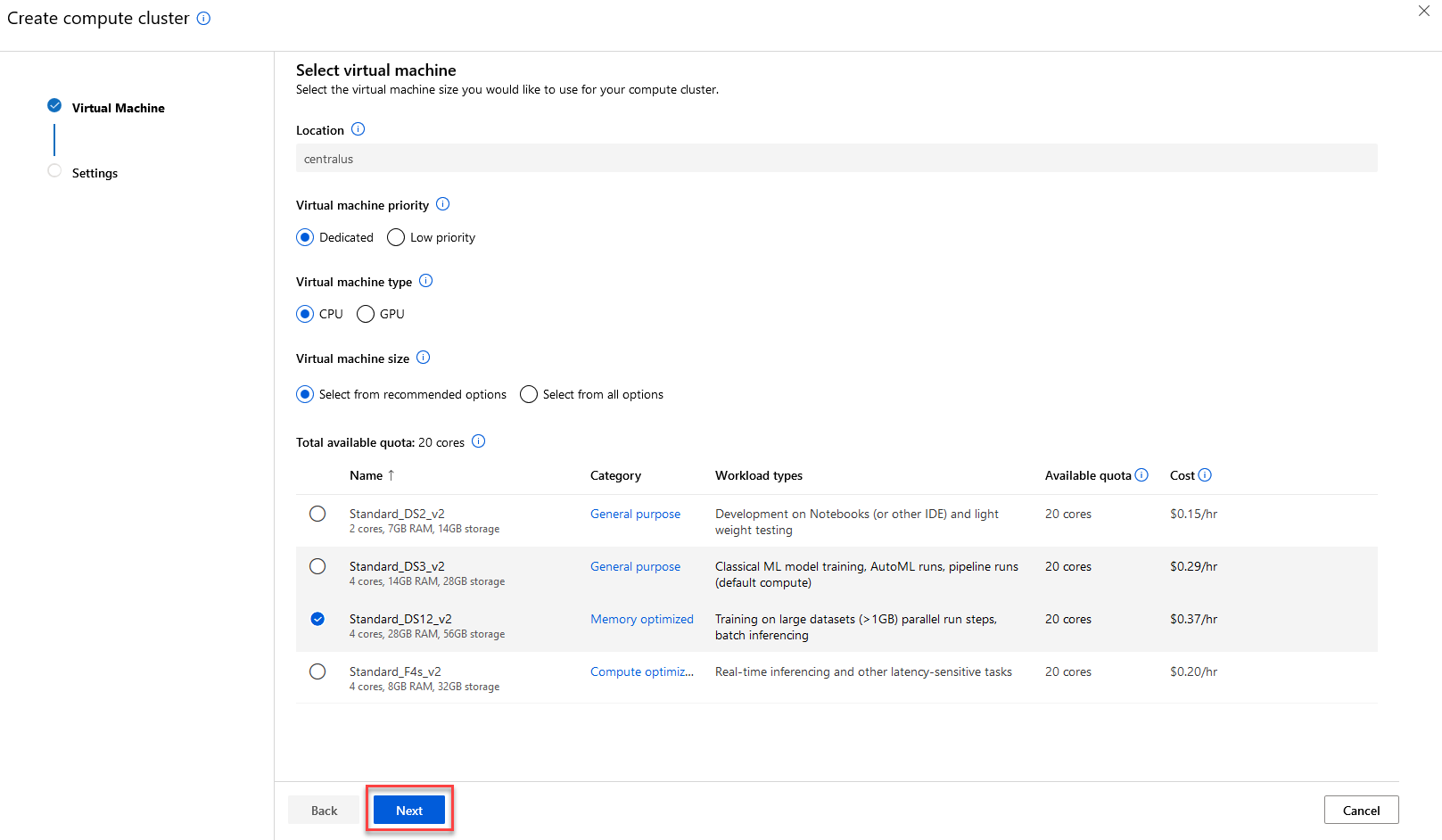

Here you can setup the specifications of your virtual machine. The more powerful your virtual machine is, tha faster your experiment will run.

After completing it, click Next.

Give a name to your virtual machine and setup some more options. It is adviced to have as many number of nodes as possible, for your experiment to run faster.

Then click Create to create your compute module.

When everything is completed, click Next.

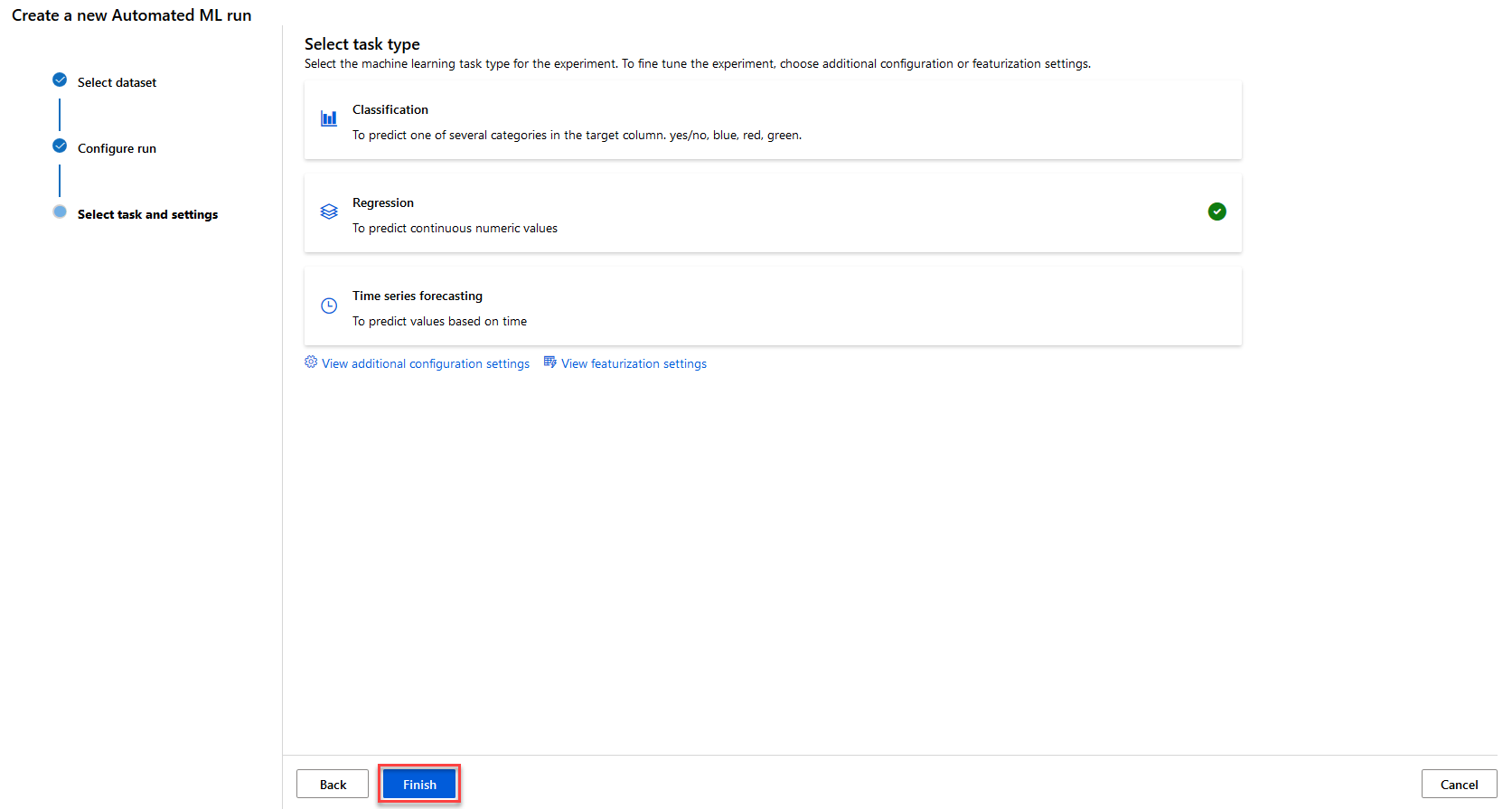

Now choose your model. We are going to choose a standard Regression model for this example, but you can choose watever you like.

After choosing, click Finish.

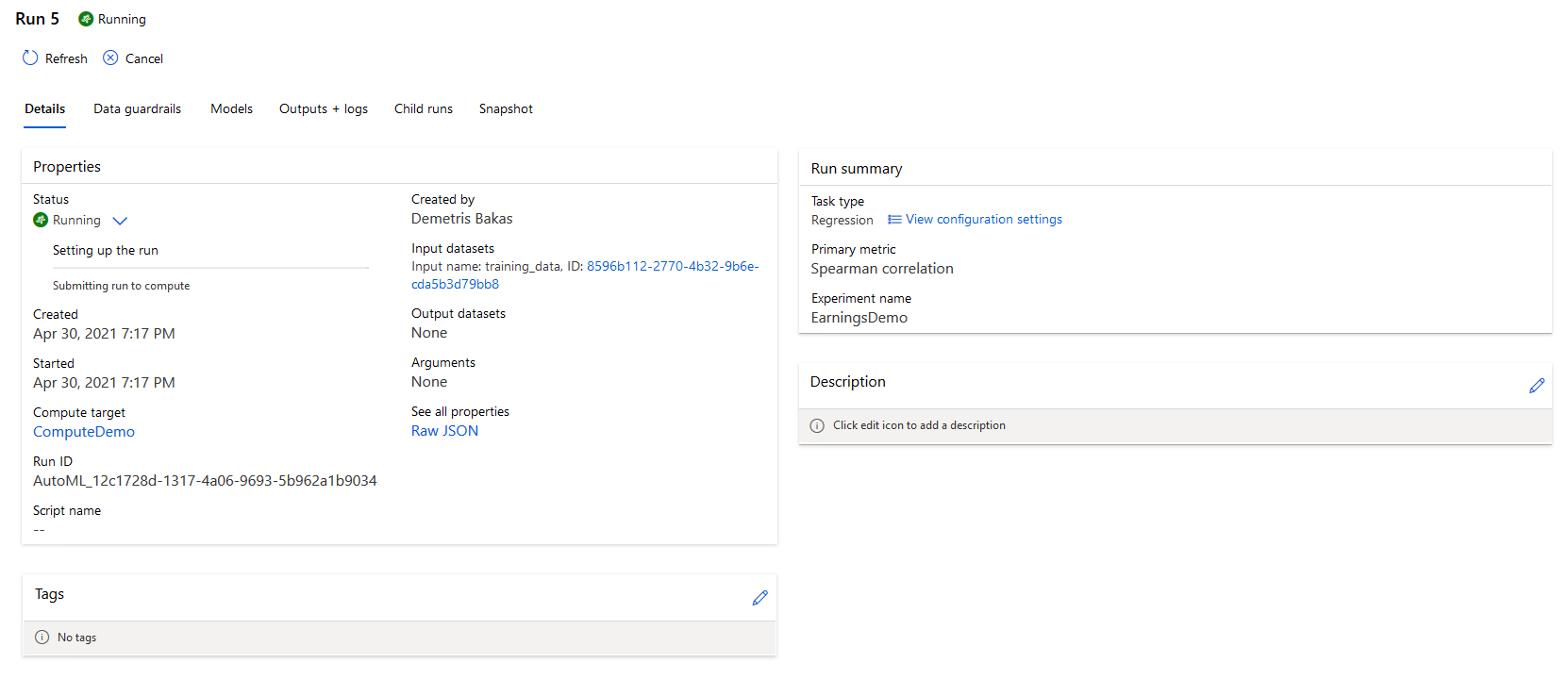

Now your experiment is running. It might take several minutes (or hours) depending of the nature of the experiment and the processing power of your compute module.

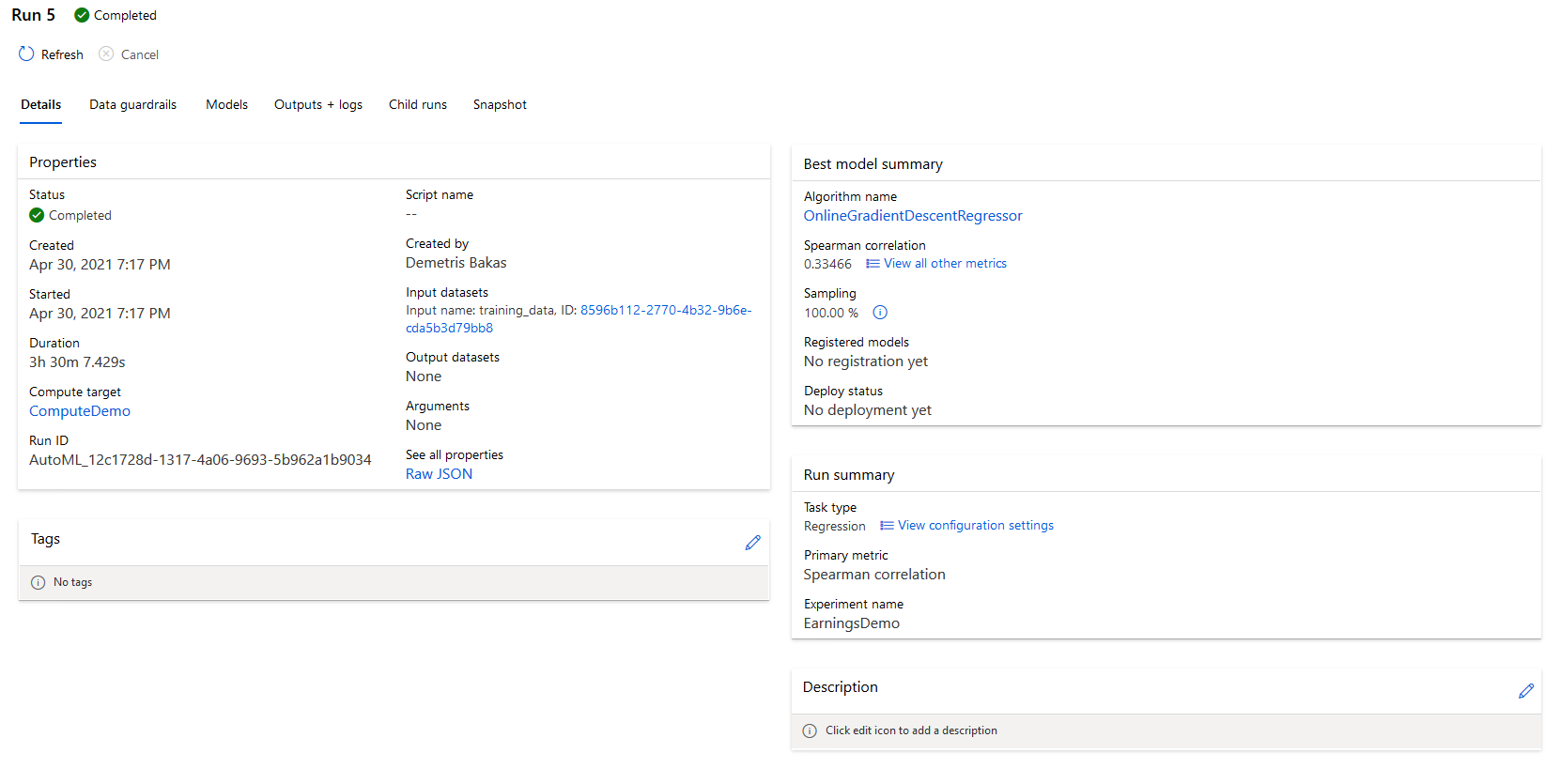

And here it is, your run has completed!

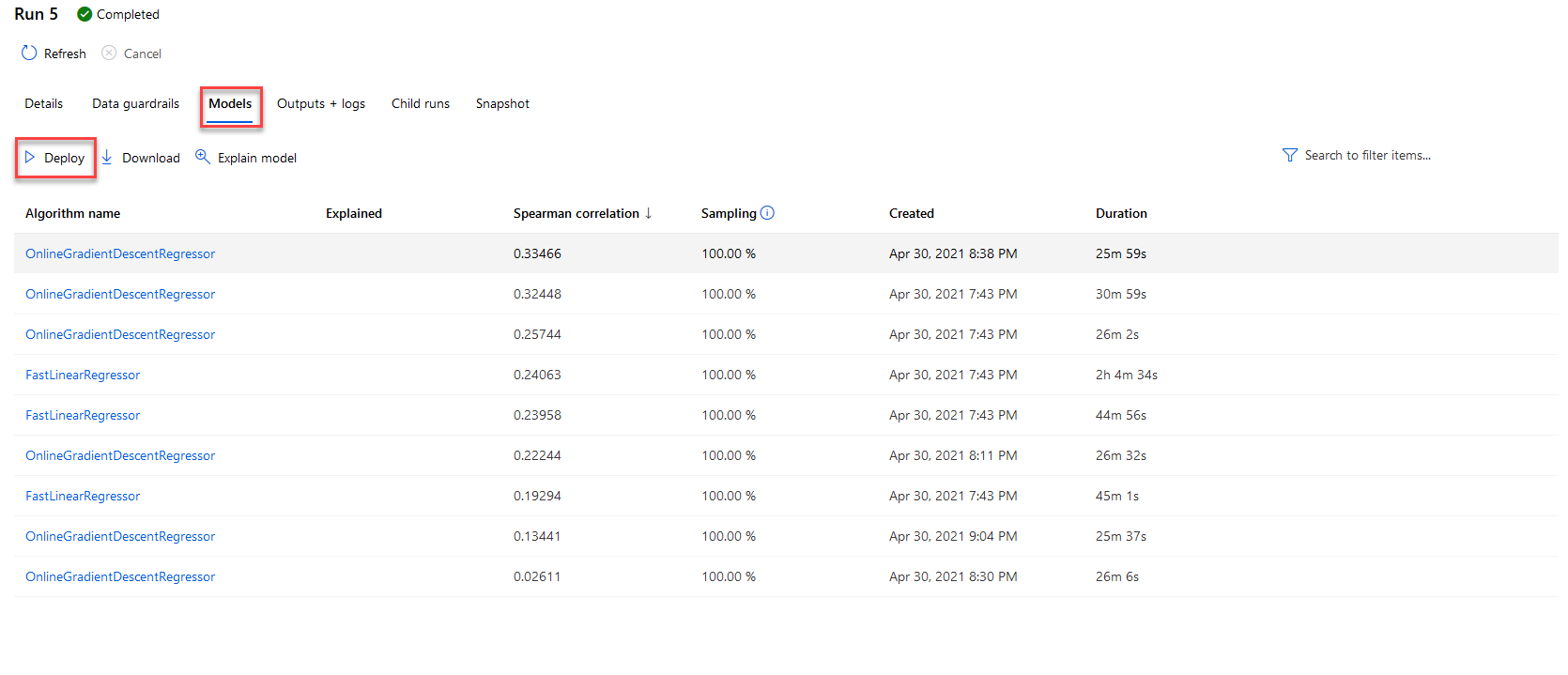

You can deploy your model by going into the Models tab, selecting your model and clicking Deploy.

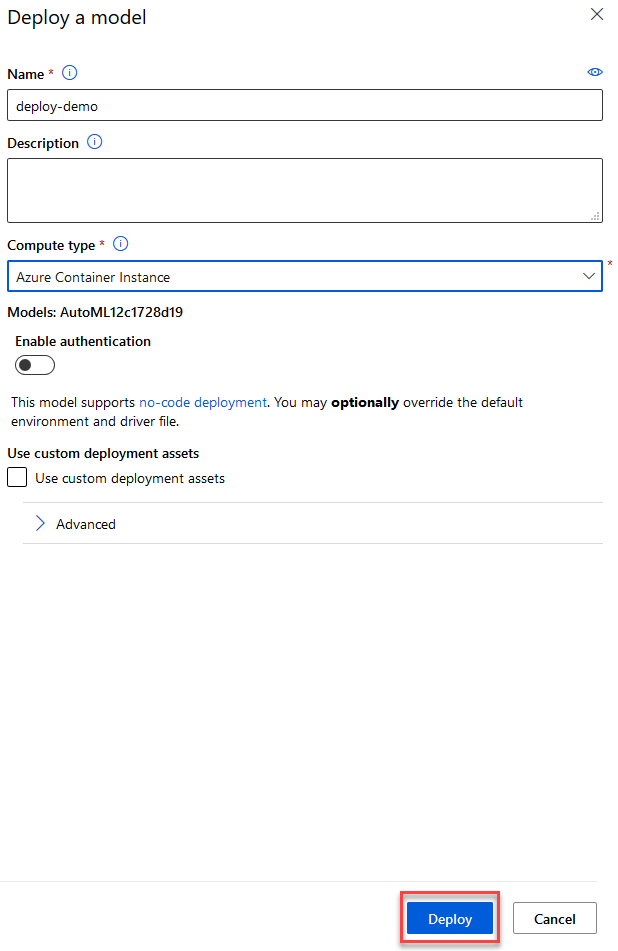

Give a Name and a Compute Type for your deployment. The Compute Type is the container that your deployment will be running inside.

After that click Deploy.

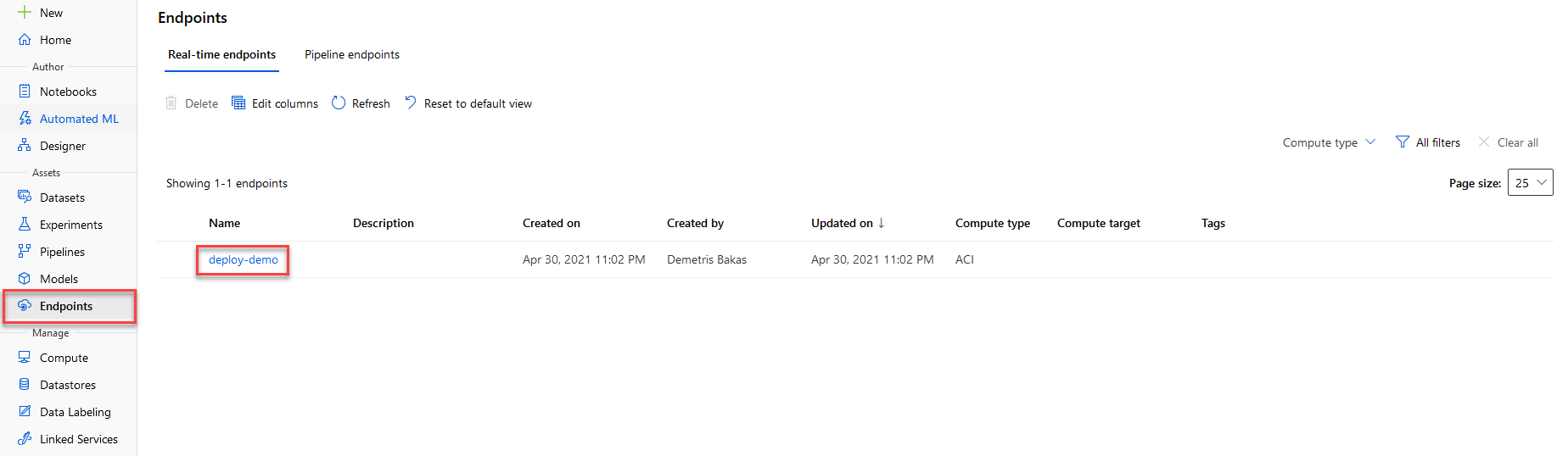

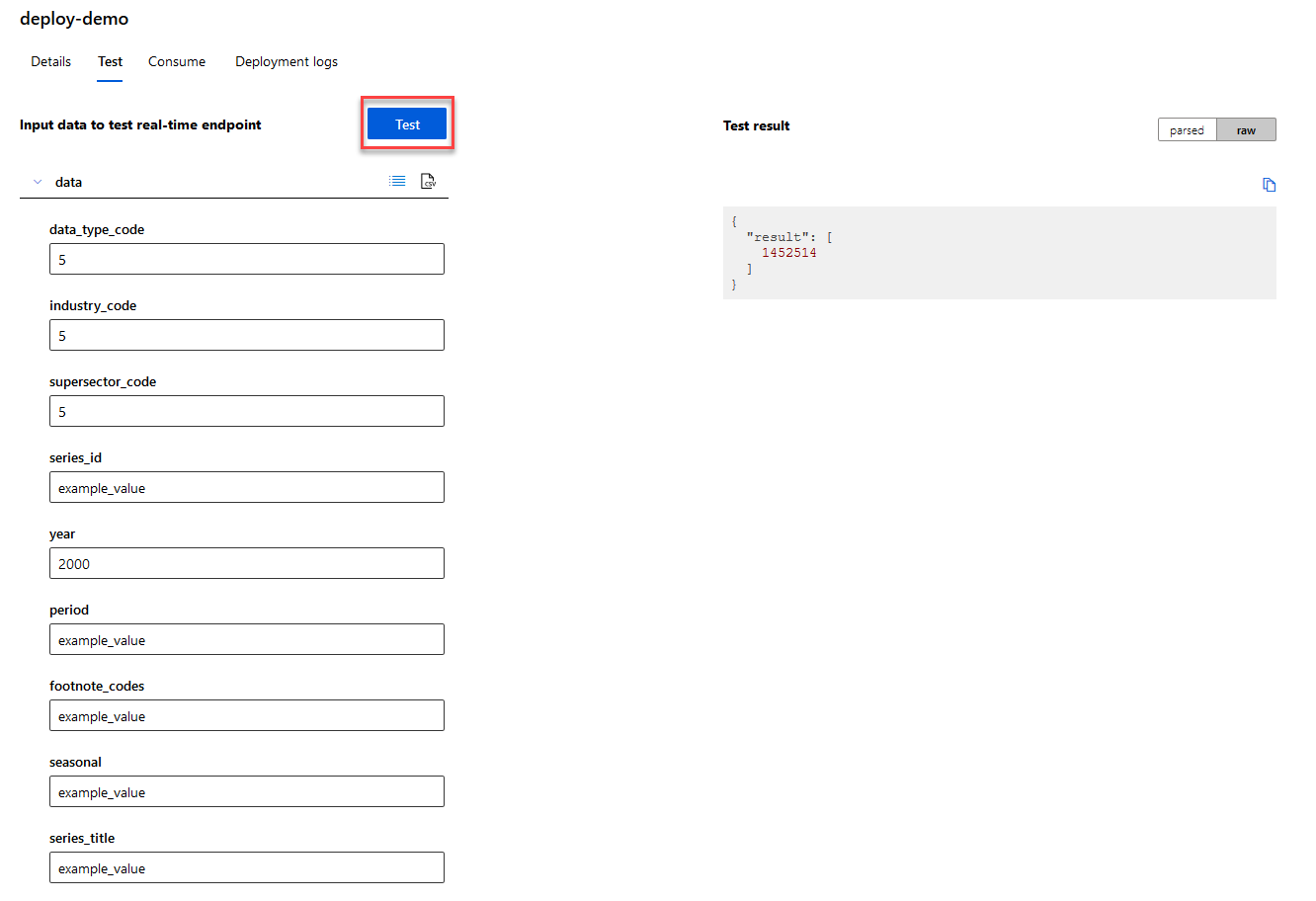

After deploying it, go to the Endpoints tab and you can find the endpoint that you just created there. You can click on it to do a fast test.

Go to the Test tab, input the details you want to test, and click on the Test button.

And thats how you train your own model with your data in Azure Machine Learning!

Proactive Messages in ASP.NETCore 3.1.1

April 19, 2021

April 19, 2021

Proactive messaging provides your bot the ability to notify the user with messages that can be written and modified by the developer.

Preface

This post serves as an update to an older post I made for proactive messages. It has come to my attention that my older post does not work for newer versions of .NETCore, thus an update is in order.

Create

Let’s get to it!

In this demo we will be using a basic bot created in Azure using Azure Bot Service. To create one you can visit this post.

Open your Visual Studio project and create a new class named NotifyController.cs. This is the controller that handles the proactive messages. In line 42 you can change the message that is presented to the user by the bot. In line 35 you can find the page that gets loaded when you hit the endpoint.

using System.Collections.Concurrent;

using System.Net;

using System.Threading;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Bot.Builder;

using Microsoft.Bot.Builder.Integration.AspNet.Core;

using Microsoft.Bot.Schema;

namespace Microsoft.BotBuilderSamples.Dialogs

{

[Route("api/notify")]

[ApiController]

public class NotifyController : ControllerBase

{

private IBotFrameworkHttpAdapter _externAdapter;

private ConcurrentDictionary<string, ConversationReference> _userReference;

public NotifyController(IBotFrameworkHttpAdapter adapter, ConcurrentDictionary<string, ConversationReference> conReferences)

{

_externAdapter = adapter;

_userReference = conReferences;

}

public async Task<IActionResult> Get()

{

foreach (var conversationReference in _userReference.Values)

{

await ((BotAdapter)_externAdapter).ContinueConversationAsync(string.Empty, conversationReference,

ExternalCallback, default(CancellationToken));

}

var result = new ContentResult();

result.StatusCode = (int)HttpStatusCode.OK;

result.ContentType = "text/html";

result.Content = "<html>Proactive messages have been sent.</html>";

return result;

}

private async Task ExternalCallback(ITurnContext turnContext, CancellationToken cancellationToken)

{

await turnContext.SendActivityAsync(MessageFactory.Text("This is a proactive message!"), cancellationToken);

}

}

}

Implement

Open the Startup.cs class and add the following using statements.

using System.Collections.Concurrent;

using Microsoft.Bot.Schema;

In the ConfigureServices function add the following service.

services.AddSingleton<ConcurrentDictionary<string, ConversationReference>>();

Open the DialogBot.cs and add the following using statement.

using System.Collections.Concurrent;

Add the ConcurrentDictionary

private ConcurrentDictionary<string, ConversationReference> _userConversationReferences;

Change the constructor by adding the ConcurrentDictionary in line 1 and line 7.

public DialogBot(ConversationState conversationState, UserState userState, T dialog, ILogger<DialogBot<T>> logger, ConcurrentDictionary<string, ConversationReference> userConversationReferences)

{

ConversationState = conversationState;

UserState = userState;

Dialog = dialog;

Logger = logger;

_userConversationReferences = userConversationReferences;

}

Add this function at the end of the class.

protected override Task OnConversationUpdateActivityAsync(ITurnContext<IConversationUpdateActivity> turnContext, CancellationToken cancellationToken)

{

if (turnContext.Activity is Activity activity)

{

var conReference = activity.GetConversationReference();

_userConversationReferences.AddOrUpdate(conReference.User.Id, conReference,

(key, newValue) => conReference);

}

return base.OnConversationUpdateActivityAsync(turnContext, cancellationToken);

}

Lastly, open the DialogAndWelcomeBot.cs class and add the following using statement.

using System.Collections.Concurrent;

And add the ConcurrentDictionary constructor method in lines 1-2.

public DialogAndWelcomeBot(ConversationState conversationState, UserState userState, T dialog, ILogger<DialogBot<T>> logger, ConcurrentDictionary<string, ConversationReference> userConversationReferences)

: base(conversationState, userState, dialog, logger, userConversationReferences)

{

}

Test

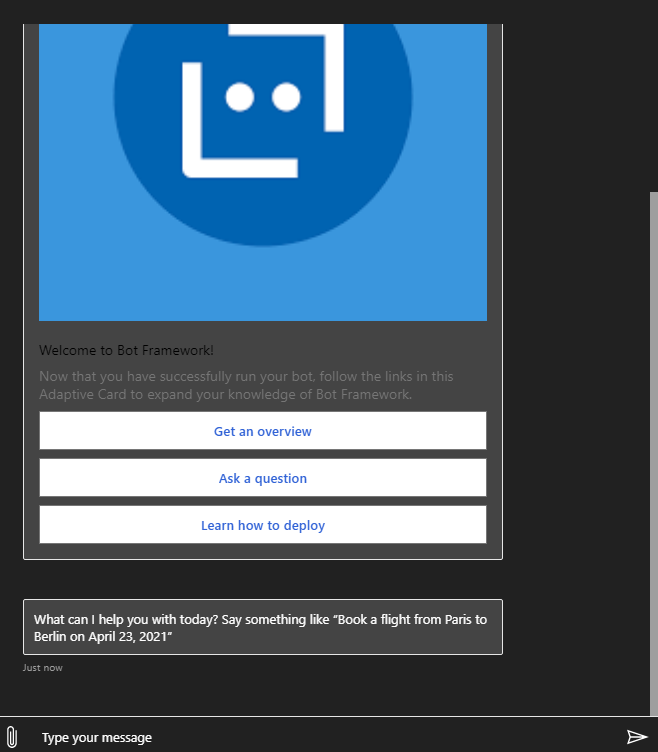

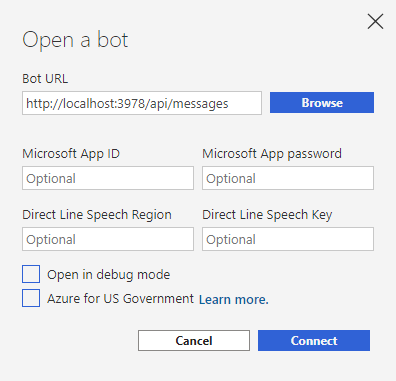

To test, simply run your bot and load up the emulator like normally, you should get the following messages.

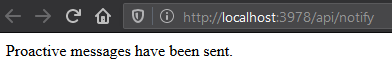

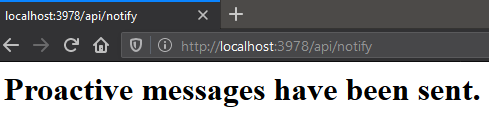

Now to trigger the proactive message click the following link: http://localhost:3978/api/notify

You should see this page in your browser informing you that the Proactive messages have been sent.

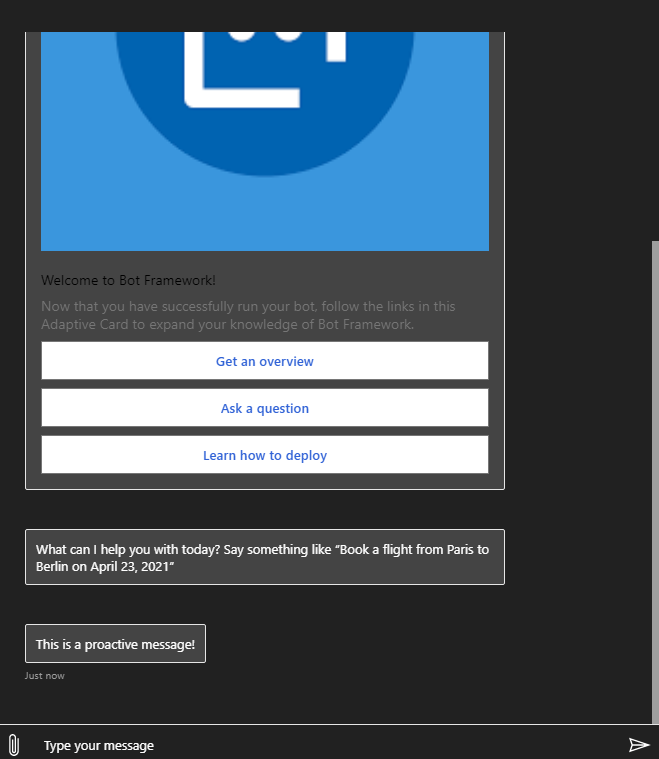

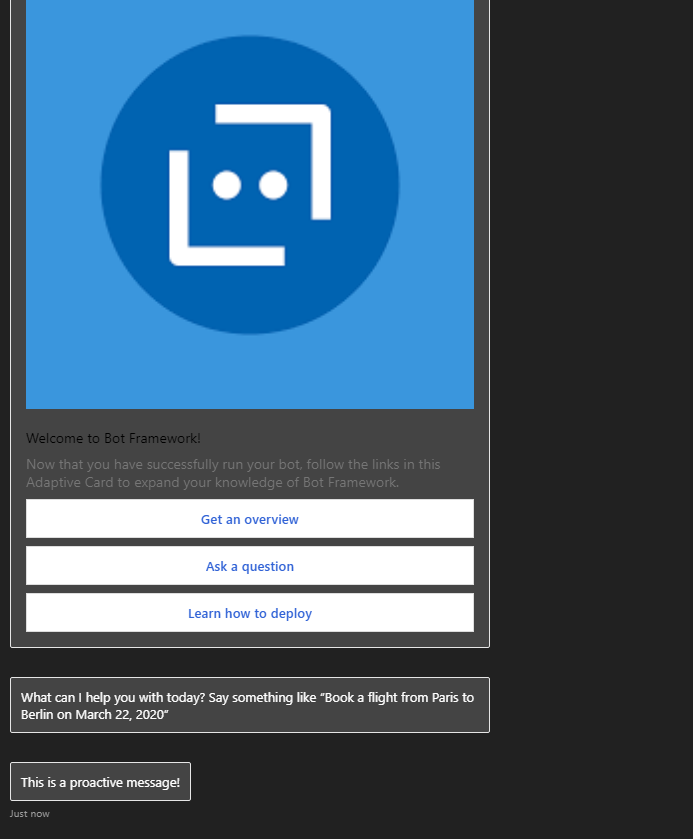

Now you should see the new message in your emulator!

This is how you implement proactive messages to your bot to notify the user in ASP.NETCore 3.1.1!

Get familiar with Azure Machine Learning Studio (classic)

April 09, 2021

April 09, 2021

Azure Machine Learning Studio (classic) is a drag & drop tool that you can use to build, test, and deploy machine learning models. It publishes models as web services, which can easily be consumed by custom apps or BI tools such as Excel. ML Studio (classic) is a standalone service that only offers a visual experience. It does not interoperate with Azure Machine Learning.

Azure Machine Learning is a separate, and modernized, service that delivers a complete data science platform. It supports both code-first and low-code experiences. It is not covered in this post, but it will be covered in the future.

Create

Ths scope of this post is to create a sample experiment in order to get familiar with the environment of Azure Machine Learning Studio (classic) and how it functions.

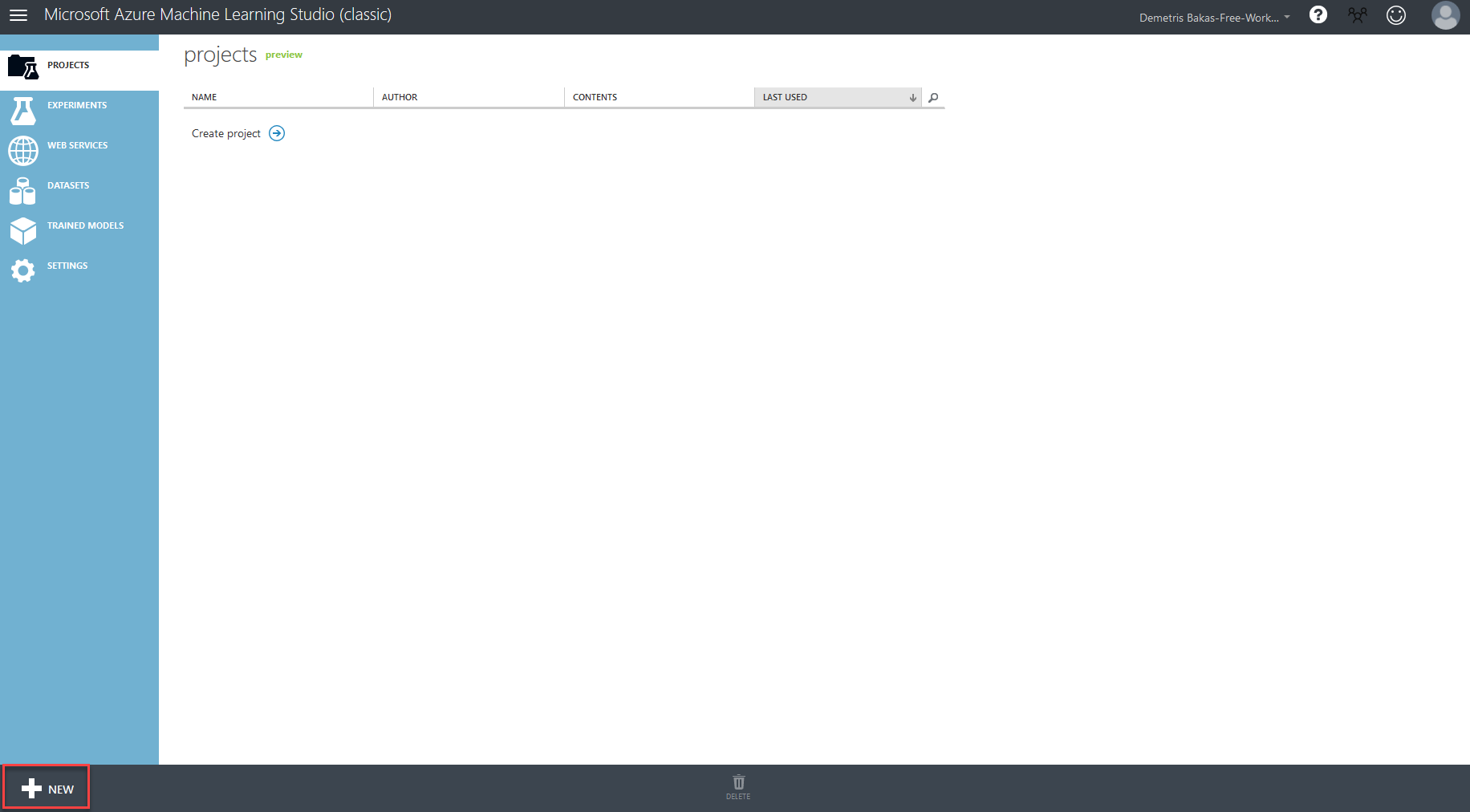

To begin, visit https://studio.azureml.net/ and log in, or create a free account. Then click NEW at the bottom left of your screen.

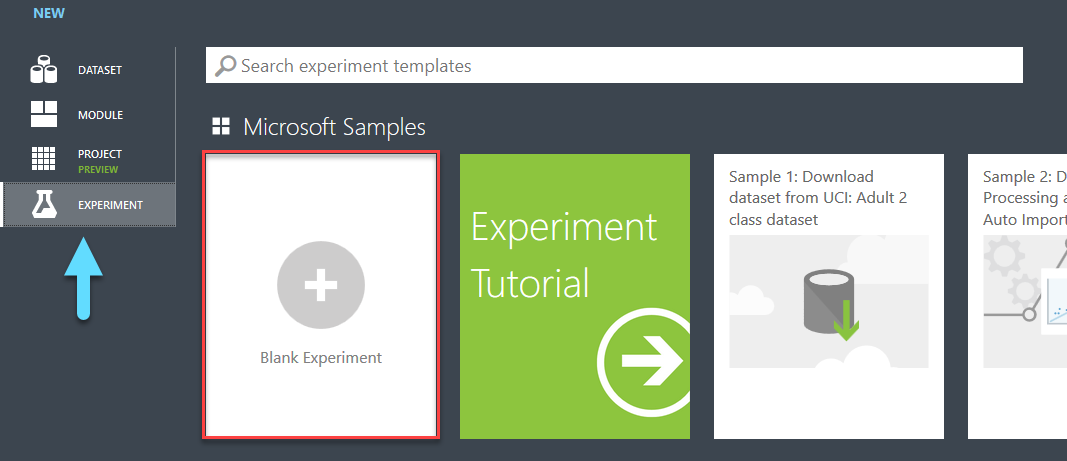

Navigate to the EXPERIMENT tab and click Blank Experiment. You can also try out the Experiment Tutorial which will show you the steps as well.

Input Dataset

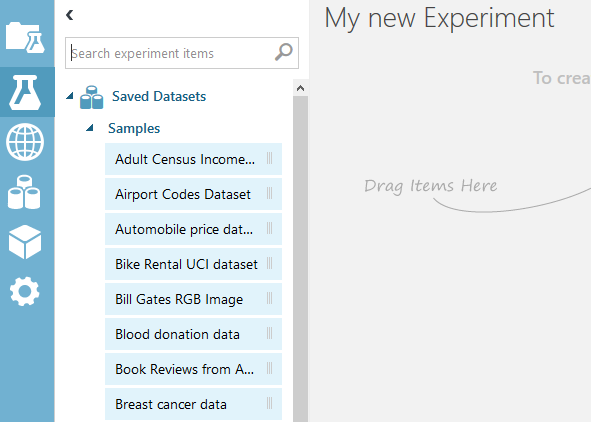

You can use a sample dataset from Saved Datasets -> Samples from the sidebar. We will be using the Adult Census Income Binary for this example. You can also change the name of your experiment if you like.

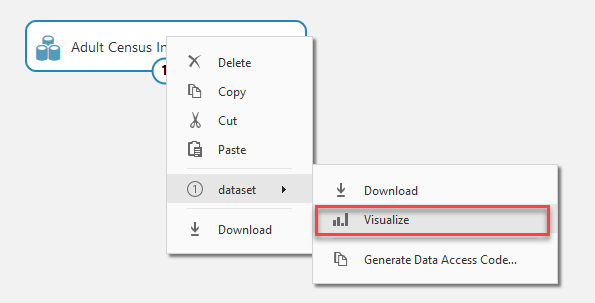

If you want to take a better look at your dataset you can right click on it and go to dataset -> Visualize.

Here you can see a visualization of our current dataset.

Implement Model

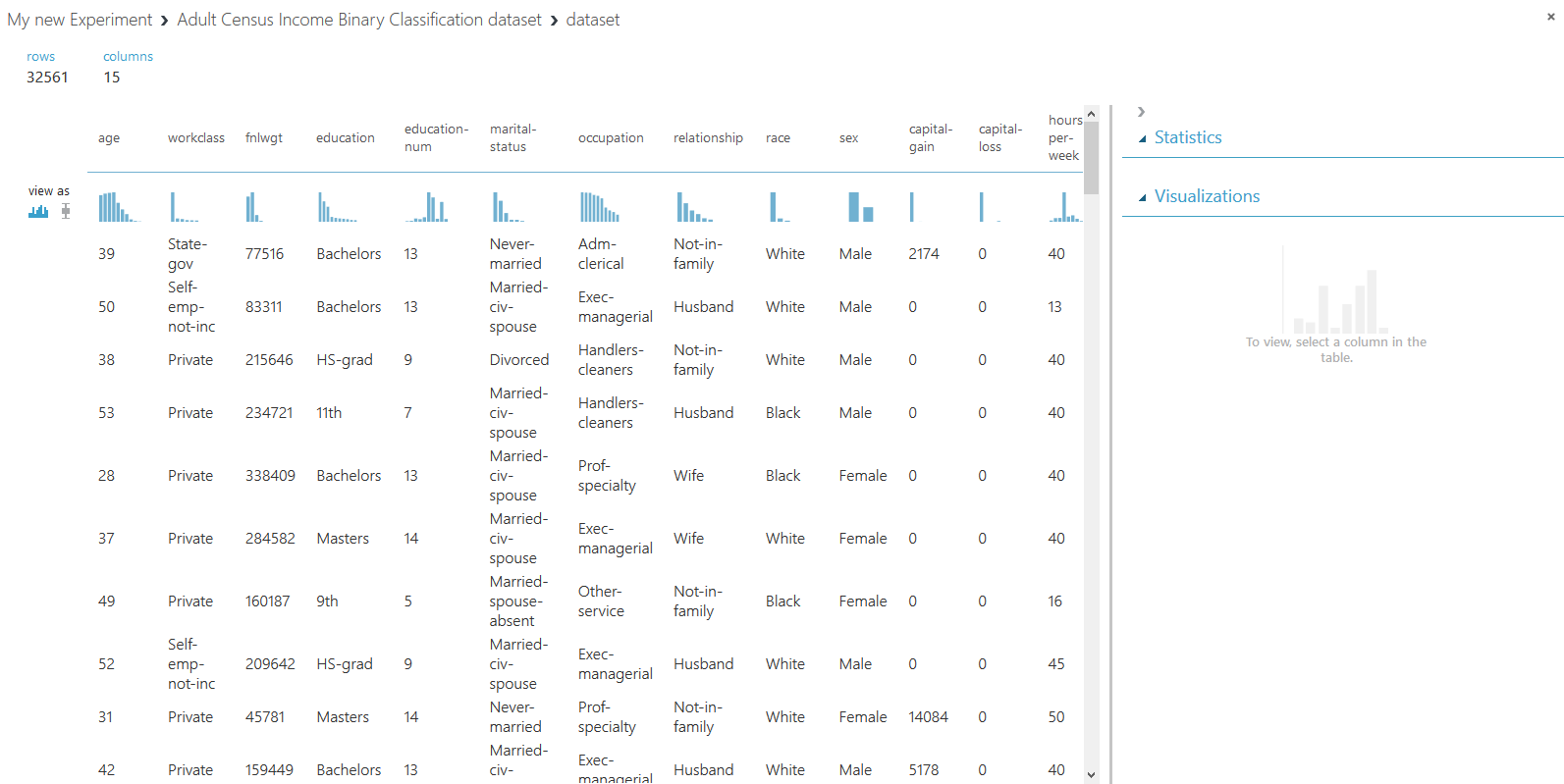

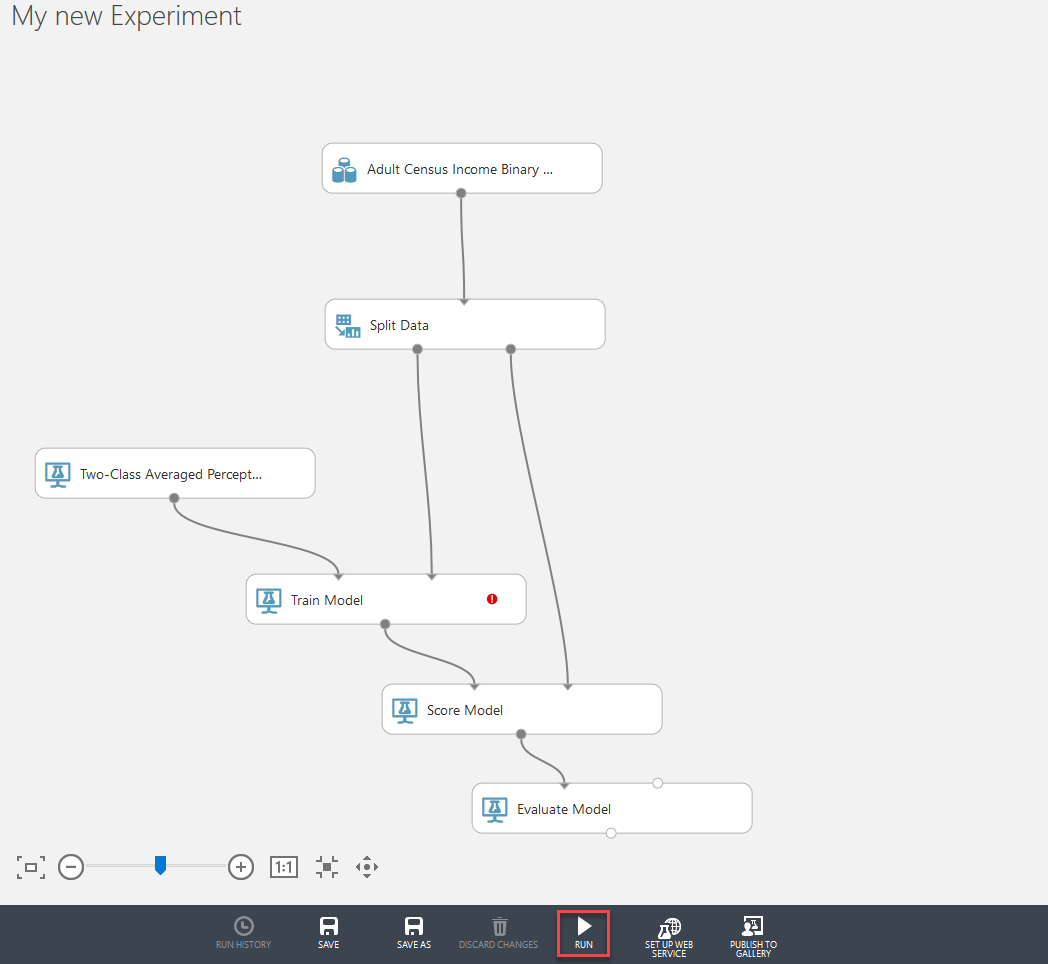

Go to Data Transformation -> Sample and Split at the sidebar and darg & drop the Split Data module to your experiment.

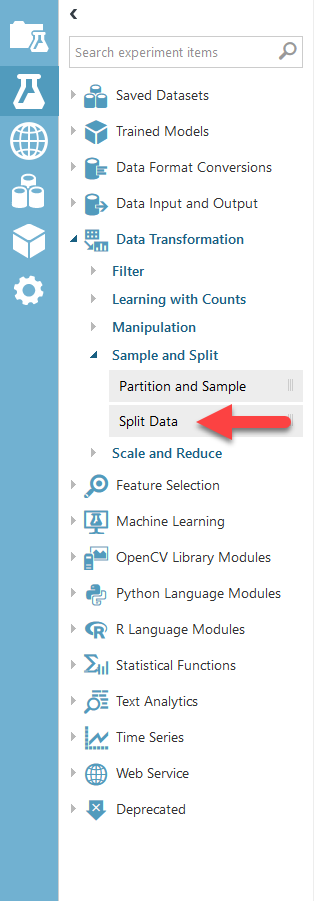

Connect the Split Data module with your dataset like in the picture below. You can click on the Split Data module inside your experiment to view the properties of the split at the right sidebar. There you can change the properties as you please, but we are using the default values for now.

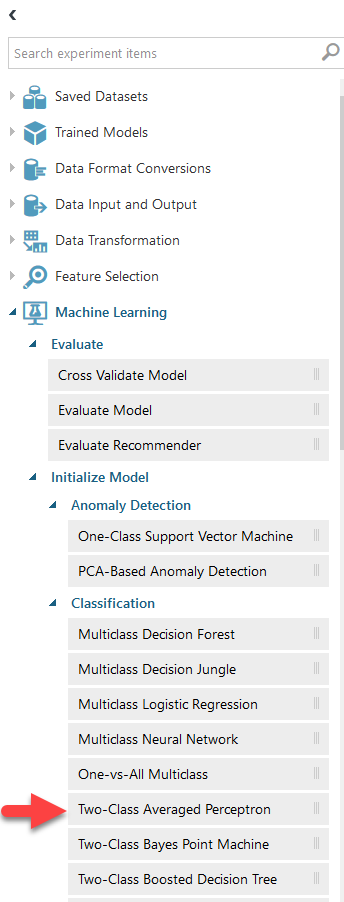

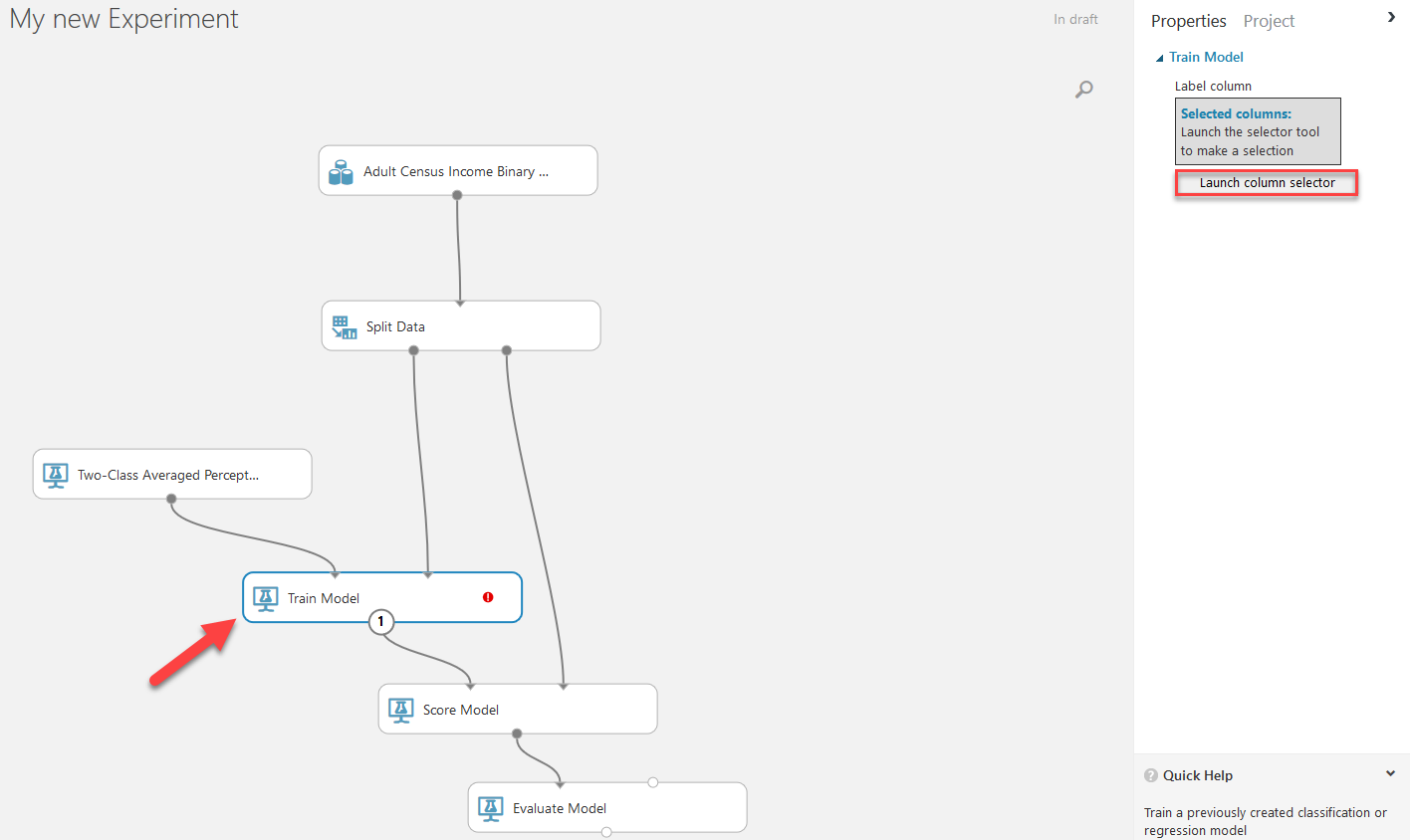

Here you can choose a model. We are choosing Two-Class Averaged Perception but you can change that to whatever best fits the task you want to achieve. Once you made your choice drag & drop your model in your experiment area.

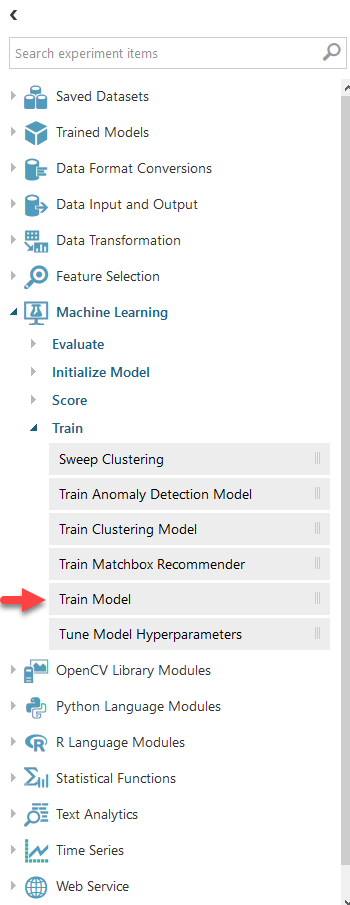

Next, drag & drop the Train Model module to your experiment from Machine Learning -> Train.

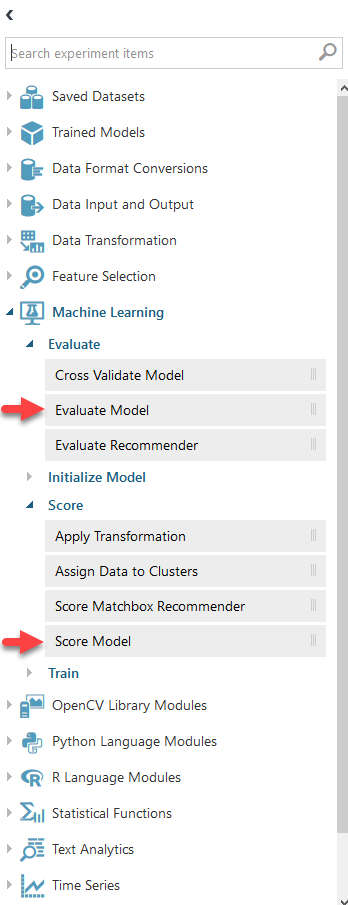

You will also need the Evaluate Model and Score Model modules.

Connect the modules as shown below. Then click on the Train Model module and select Launch column selector form the sidebar at you right.

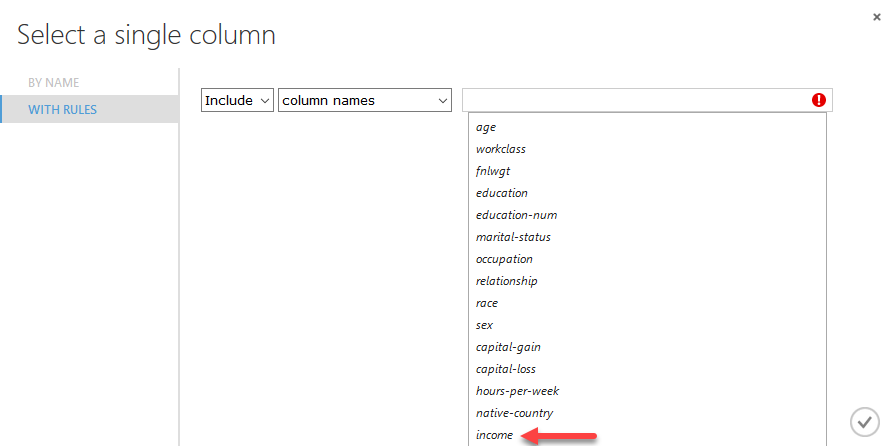

Here you can select the columns you need to include. We are only selecting the income column for now.

When everithing is ready hit RUN and wait for your model to process the dataset.

Visualize Results

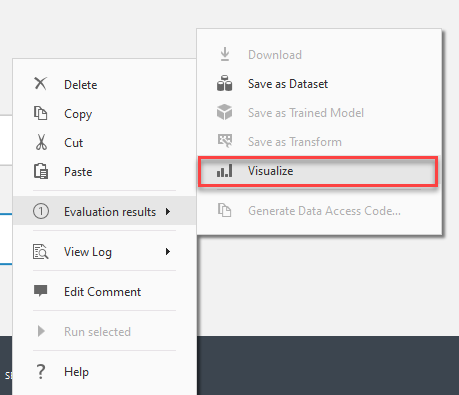

When the processing is finished you can right click on the Evaluate Model module and go Evaluation results -> Visualize to visualize your results.

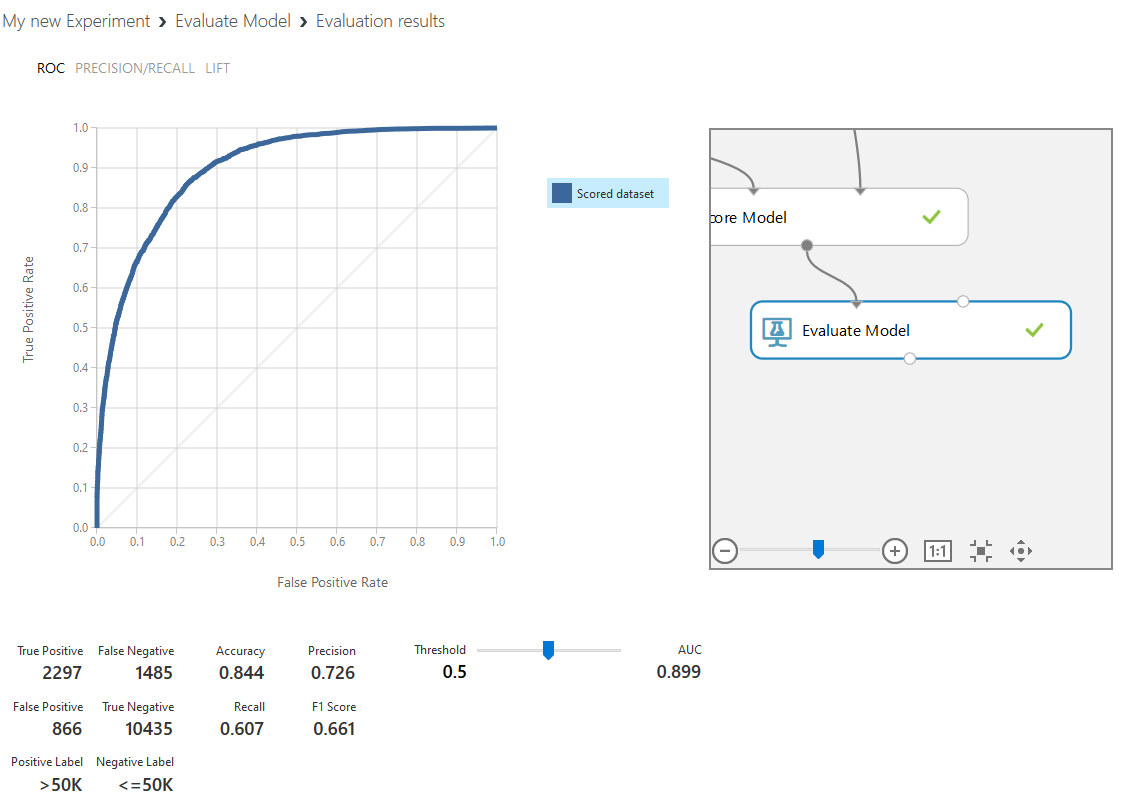

Here are the results for our current example.

And that is how you navigate around Azure Machine Learning Studio (classic) to create your own experiment, now you are prepared to crunch some numbers!

Give voice to your project using Azure Speech

March 29, 2021

March 29, 2021

Speech is an Azure service part of the Cognitive Services that converts text to lifelike speech.

Create

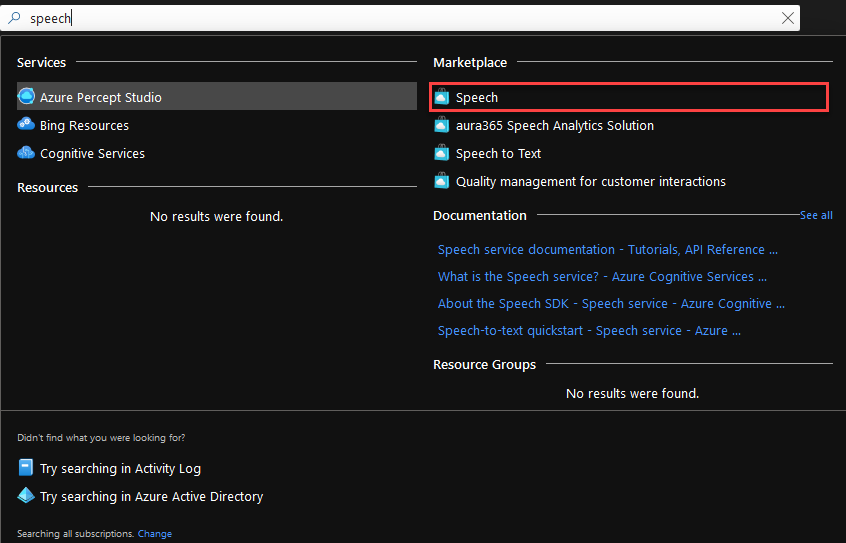

Go to Azure Portal and search for Speech. Select Speech from the Marketplace.

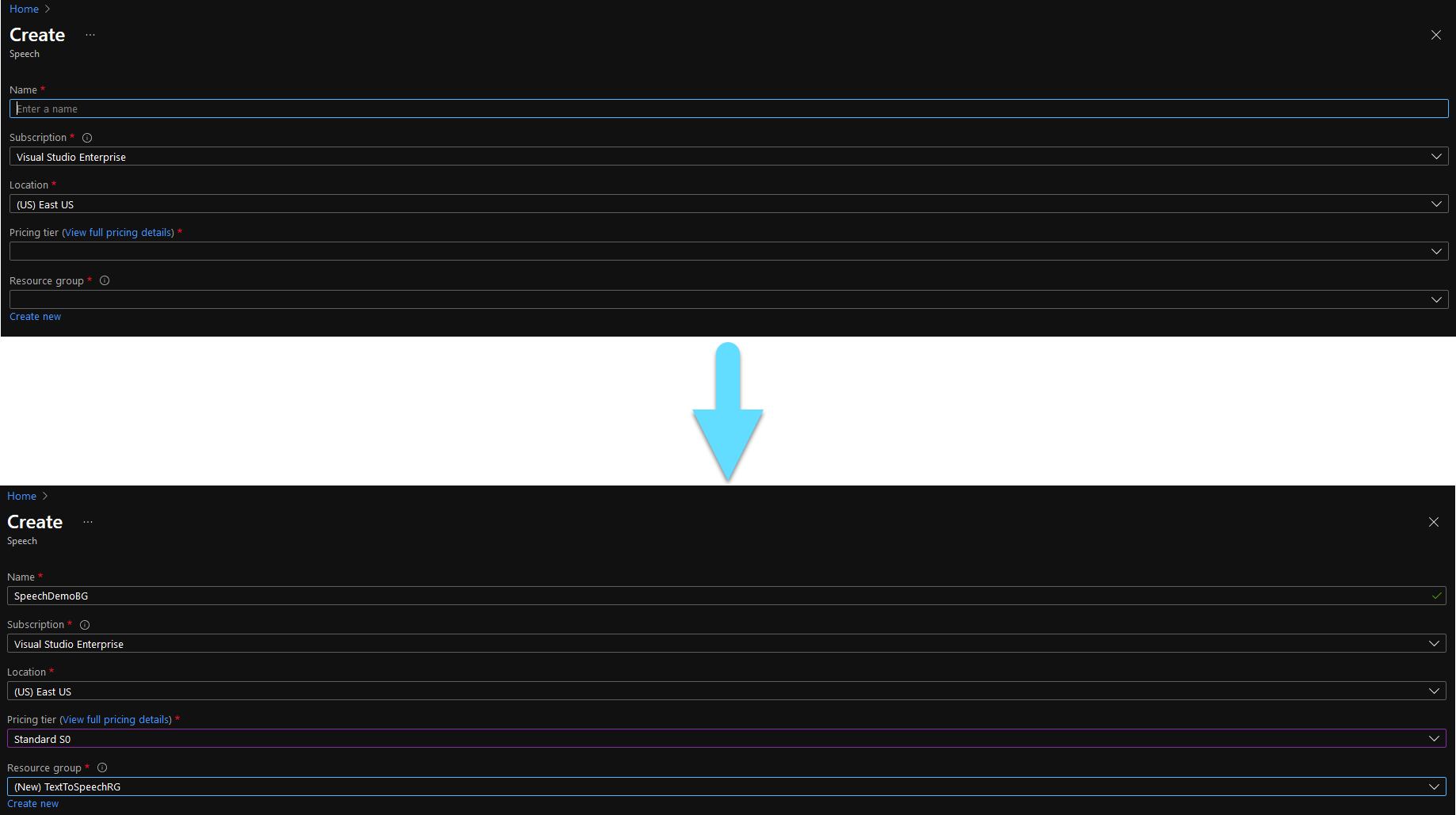

Fill the form. The fields with the ‘*’ are mandatory.

- Name is the name of your new Speech resource.

- Subscription should already be filled in with your default subscription.

- You can leave Region with the pre-selected region.

- Any Pricing tier will do for this demo.

- For Resource Group, you can use an existing one, or create a new one.

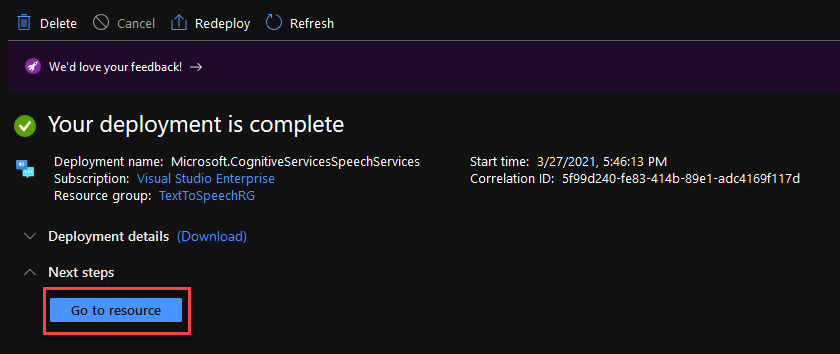

Click Create to deploy your resource. This might take a few minutes. After the deployment is done click Go to resource.

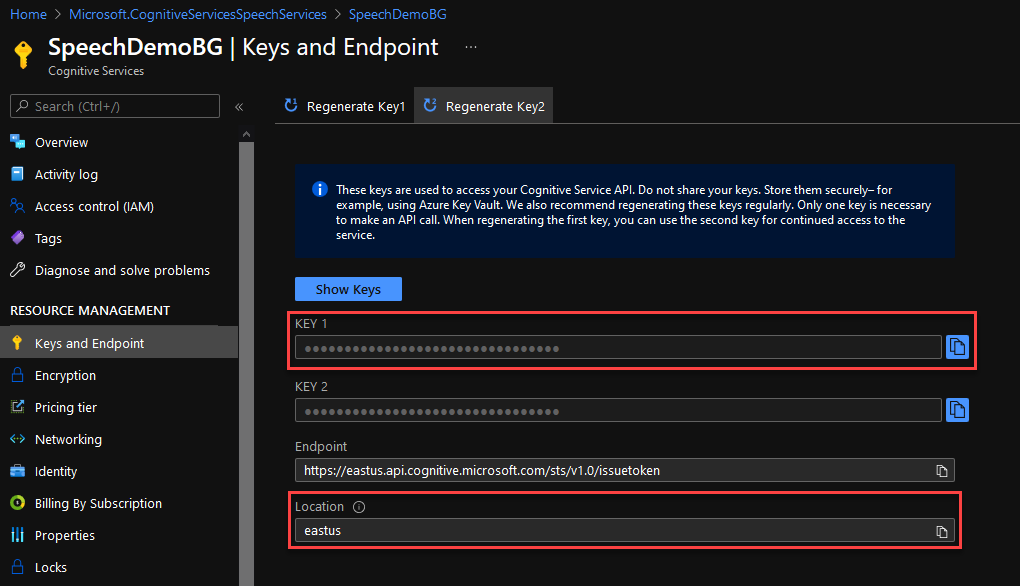

Navigate to the Keys and Endpoint tab at the left of your window. From here you can grab a Key and your Location. You will need theese later.

Implement

Open your existing project in Visual Studio. If you are not working on an existing project, simply create a C# (.NET Core) Console app.

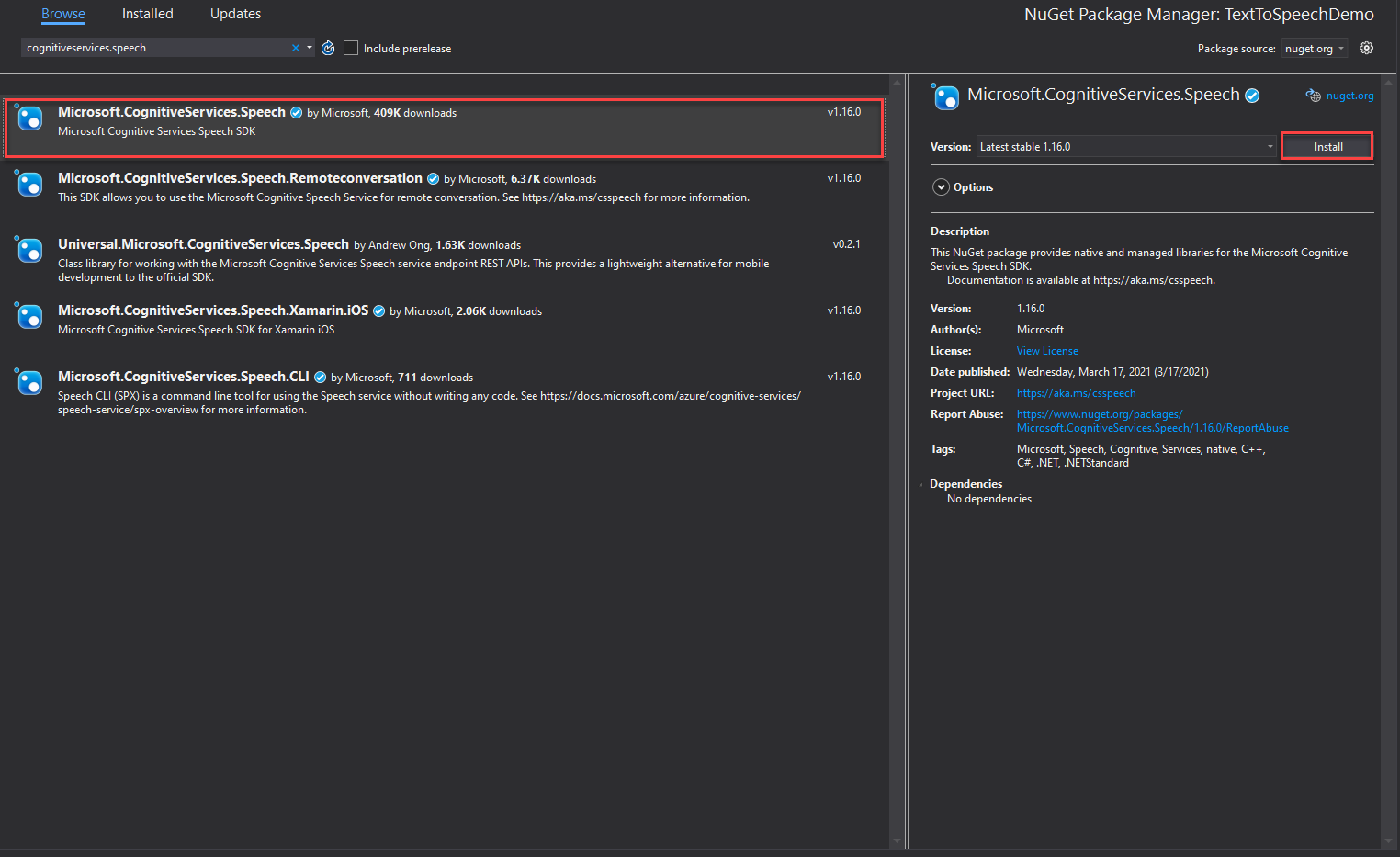

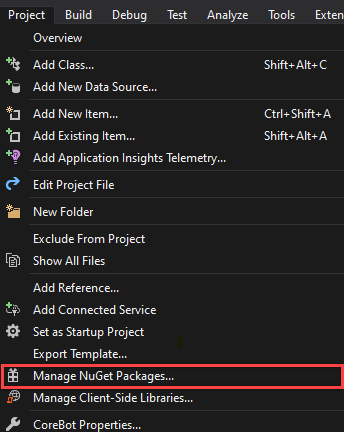

Navigate to Project -> Manage NuGet Packages, find and install the Microsoft.CognitiveServices.Speech package.

Open the class that you need to implement the speech synthesizer in. For a new project you can use the Program.cs.

Add the using statements you see below at the top of the file.

using System.Threading.Tasks;

using Microsoft.CognitiveServices.Speech;

Replace your Main with the code below. Do not worry about the SynthesizeAudioAsync function, we will implement it in the next step. The input argument of the function is the text that is going to get synthesized, you can change this to anythin you like.

static async Task Main()

{

await SynthesizeAudioAsync("Sample text to get synthesized.");

}

This is the function that connects to the Azure resource and synthesizes the text. Implement it under your Main function. The first argument of the FromSubscription is your key and the second your location.

static async Task SynthesizeAudioAsync(string textToSpeech)

{

var config = SpeechConfig.FromSubscription("7be282a06KEY_HEREb37d0c8f4a34", "eastus");

using var synthesizer = new SpeechSynthesizer(config);

await synthesizer.SpeakTextAsync(textToSpeech);

}

If you would like to try out more examples, or even output your spoken text to a file follow this link.

Test

Here is a sample of the output from the code above.

This is how you can rapidly intergrade a speech synthesizer to your project!

Change Choice Prompt appearance in Azure Bot Services

March 19, 2021

March 19, 2021

One of the most important reasons we use chat-bots to convey information to the user, is that we are aiming to provide the best possible interface, convincing the user to experience our application because is easier and more fun to use. With this in mind we come to the realization that interface is key to what the user perceives as a good application and would like to spend any time using it.

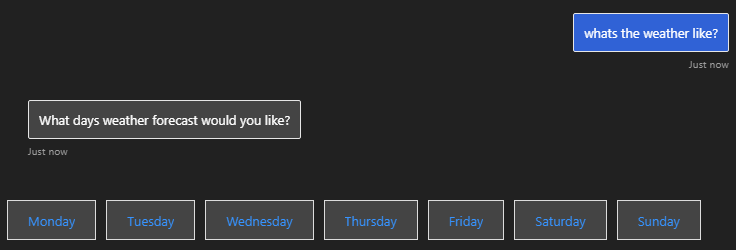

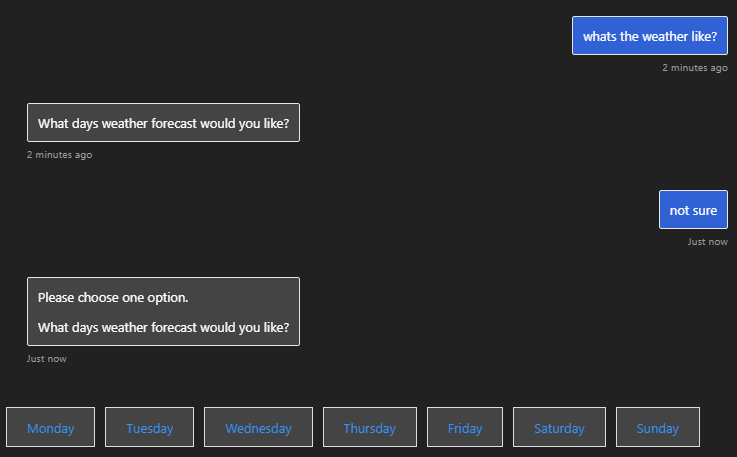

Helping us on this task are Choice Prompts, which provide a set of pre-determined answers to the user to choose from. This not only makes the job of the developer easier but the user’s as well. Developers does not need to think about every possible answer, only the ones given to the user beforehand and users can have answers suggested by the interface itself, so they do not need to think about what to say. (If you would like to create your own Choice Prompts you can find how to do it here.)

However what might look as an incredably helpful tool, is as helpful as the interface displaying it. The appearance of the prompt, makes a big difference on weather the user will enjoy it or not. This is where ListStyle comes in handy. This option provides you the ability to customize the appearance of your choice prompts and choose the one best suited to the application you have in mind.

Let’s have a look on all the options available:

- auto: Automatically select the appropriate style for the current channel.

- heroCard: Add choices to prompt as a HeroCard with buttons.

- inline: Add choices to prompt as an inline list.

- list: Add choices to prompt as a numbered list.

- none: Don’t include any choices for prompt.

- suggestedAction: Add choices to prompt as suggested actions.

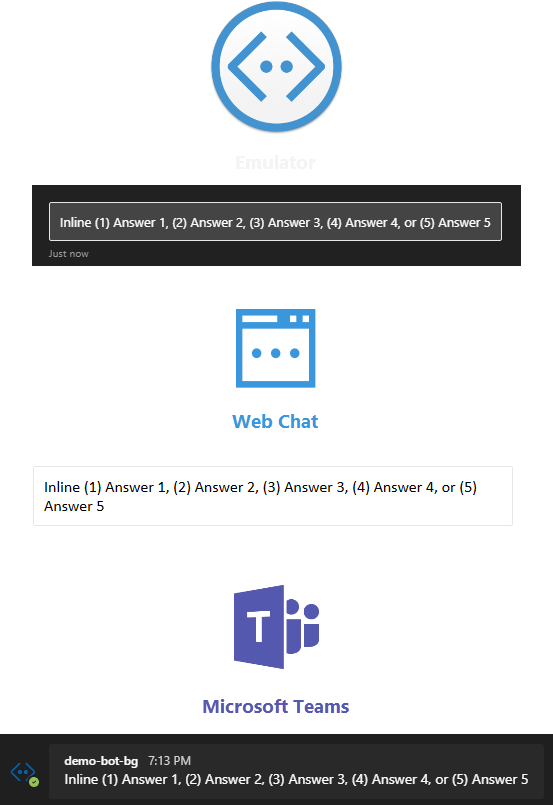

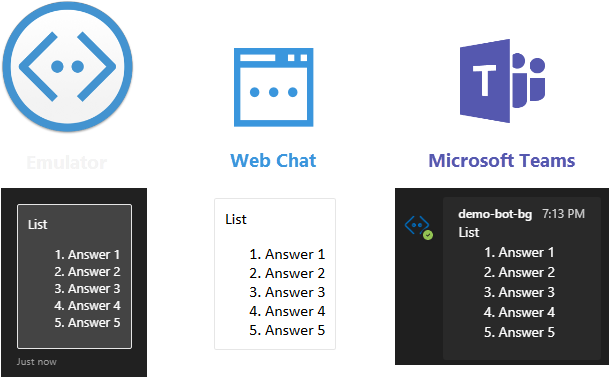

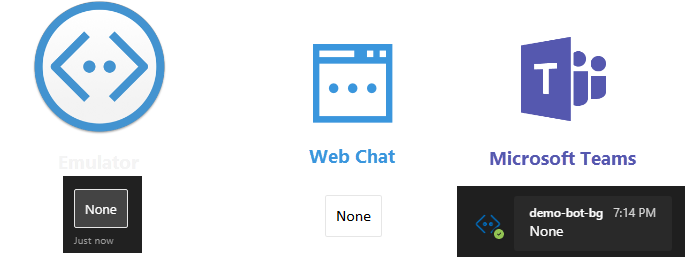

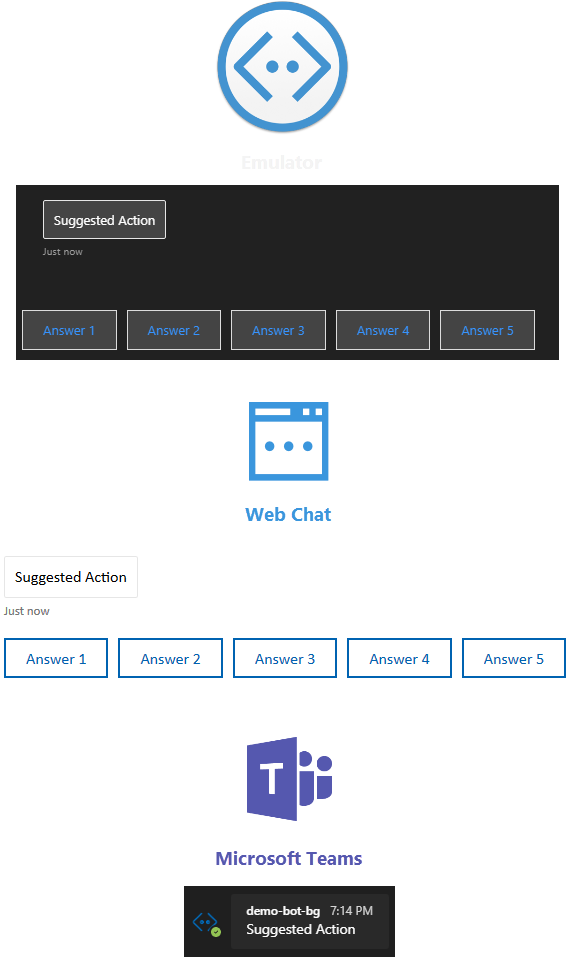

With this provided information I decided to try all the options on common used channels. The channels I selected are the Web Chat and Microsoft Teams. I will also have available the appearance inside the Emulator to help you out.

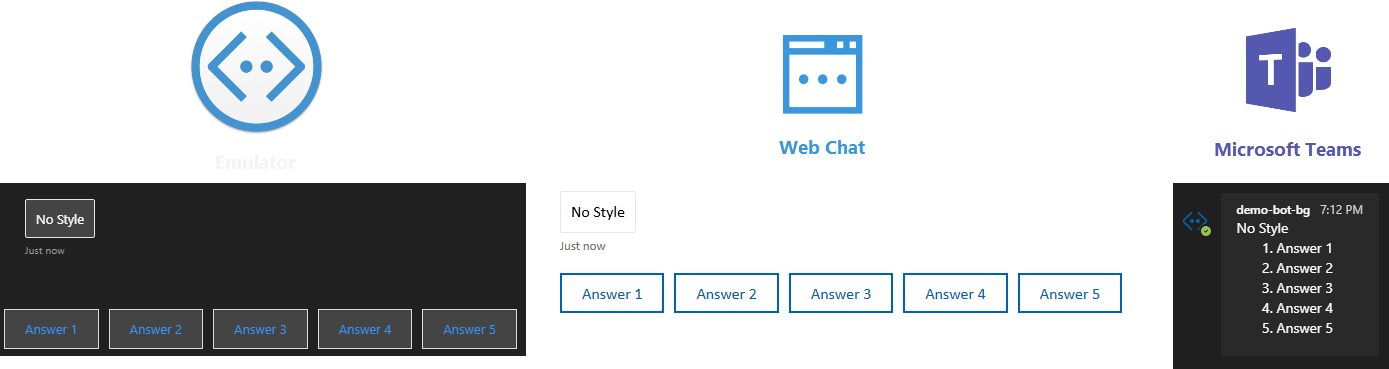

No Style

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("No Style"), Choices = choiceList }, cancellationToken);

This is how your choice prompt will look without any ListStyle option selected. As you can see, despite having all the options as buttons in the emulator and web chat, Microsoft Teams displays only a list and the user is expected to write the number corresponding to his/her choice. Have in mind that the appearance might change depending of the amount of answers you need to display.

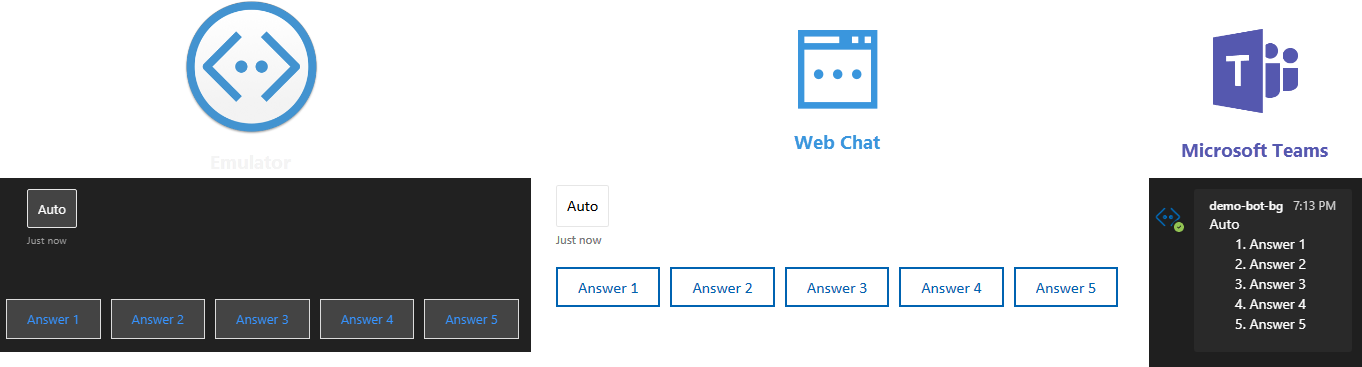

Auto

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("Auto"), Choices = choiceList, Style = ListStyle.Auto }, cancellationToken);

Auto seems to work exactly like not choosing any style option, as you can see above.

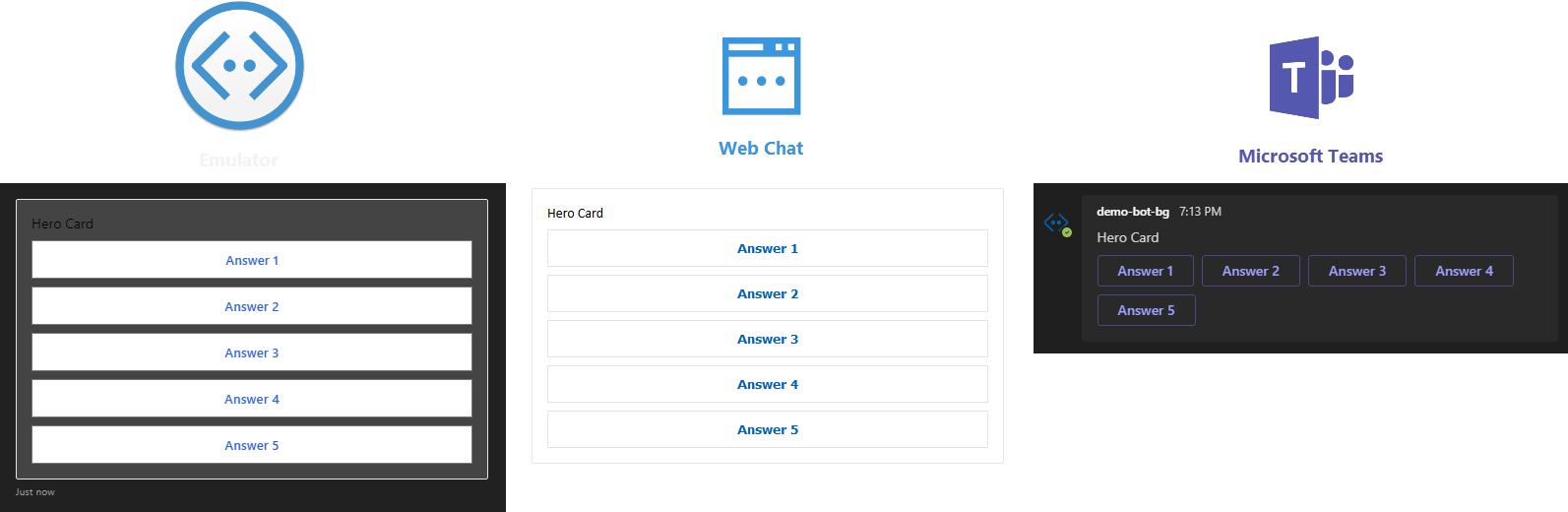

Hero Card

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("Hero Card"), Choices = choiceList, Style = ListStyle.HeroCard }, cancellationToken);

Although Hero Card might look a bit bulky ine emulator and web chat, in Microsoft Teams it looks exactly like the rest of the channels in the previous examples. Which in my opinion looks really intuitive for the user.

Inline

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("Inline"), Choices = choiceList, Style = ListStyle.Inline }, cancellationToken);

Inline has the same appearance throughout the different channels. However, in my opinion, it should only be used in cases where the available space that the bot interface is displayed on is limited.

List

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("List"), Choices = choiceList, Style = ListStyle.List }, cancellationToken);

List is also consistent throughout hte channels and it provides a numbered list, expected from the user to write the number corresponding to his/her choice. In my opinion this is not ideal, but it can be useful when there are too many options available.

None

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("None"), Choices = choiceList, Style = ListStyle.None }, cancellationToken);

None is not helpful in our case because it does not provide any answers to the user.

Suggested Action

return await stepContext.PromptAsync(nameof(ChoicePrompt), new PromptOptions { Prompt = MessageFactory.Text("Suggested Action"), Choices = choiceList, Style = ListStyle.SuggestedAction }, cancellationToken);

Lastly, although Suggested Action looks good in emulator and web chat, in Microsoft Teams no answers are provided rendering it much less desired than the rest of the options.

I hope this helps you decide which ListStyle is better suited to your use case to help you achieve the best interface possible!

Translate Text form Pictures using Azure

March 09, 2021

March 09, 2021

Computer Vision is an AI Service part of the Azure Cognitive Services that analyzes content in images and video.

Translator in Azure is an AI service, part of Azure Cognitive Services, used for real-time text translation and detection. It is fast and easy to implement, to bring intelligence to your text processing projects.

Create

In this post we will build upon two older posts: the post about Computer Vision and the post about Azure Translator. Follow the Create steps of both of theese posts to create your Azure resources.

Implement

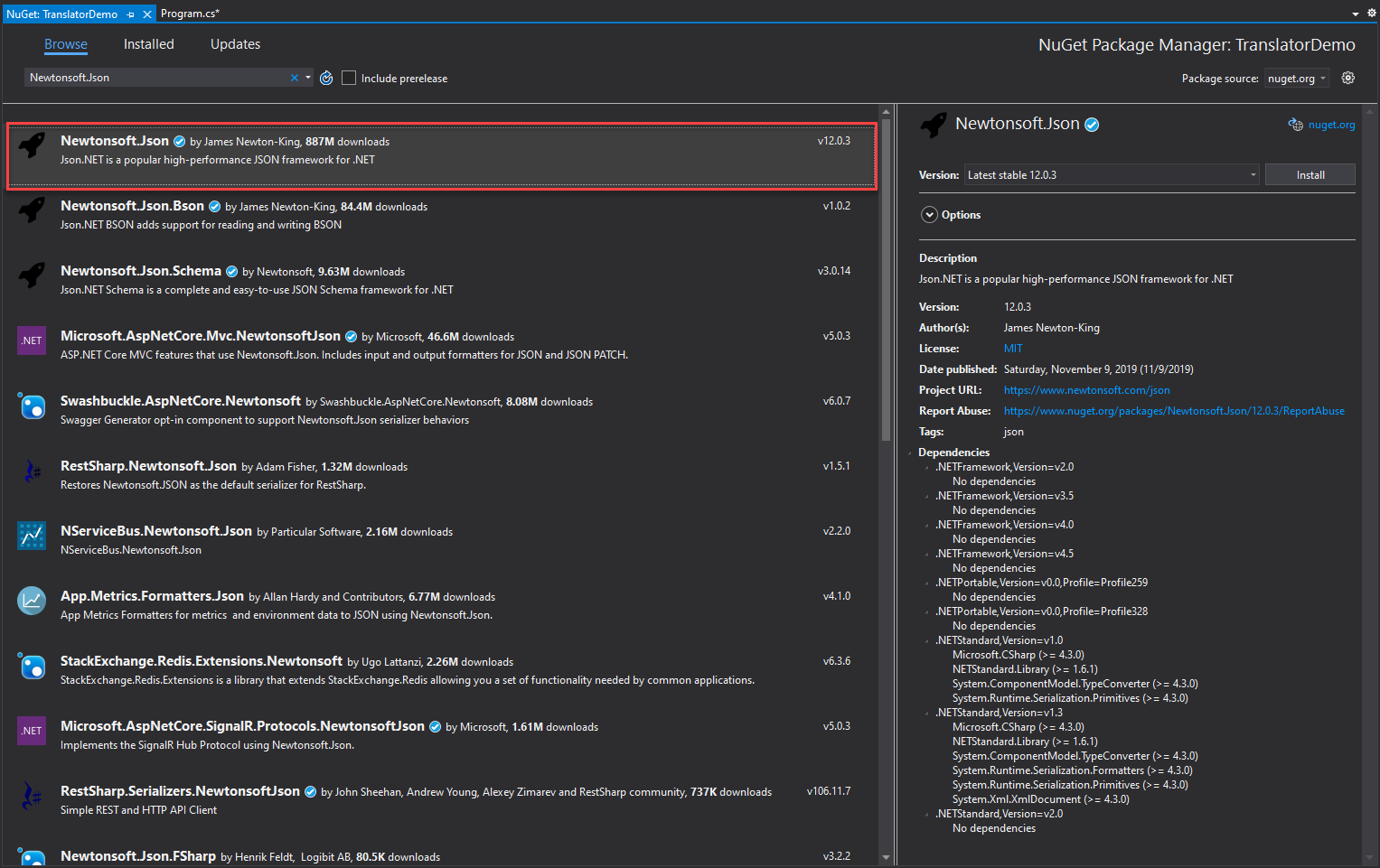

Create a new C# console project in Visual Studio or open an existing one. Follow the previous posts to install the Microsoft.Azure.CognitiveServices.Vision.ComputerVision and Newtonsoft.Json NuGet packages.

Open the class that you need to implement the image analyser and translator in. For a new project you can use the Program.cs.

Add the using statements you see below at the top of the file.

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision;

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision.Models;

using System.Collections.Generic;

using System.Threading.Tasks;

using System.Threading;

using System.Linq;

using System.Net.Http;

using System.Text;

using Newtonsoft.Json;

Input your Subscription Keys, Endpoints and Location (Location is only for the Translator resource). You can see where to find them in the previous posts. The READ_TEXT_URL_IMAGE string at line 9 should contain the URL of the image you wish to analyse.

// Add your Computer Vision subscription key and endpoint private static readonly string ComputerVisionsubScriptionKey = "1e6cd418eKEY_HERE450704d3e63c"; private static readonly string ComputerVisionEndpoint = "https://compvisiondemobinarygrounds.cognitiveservices.azure.com/"; private static readonly string TranslatorSubscriptionKey = "5b50844fKEY_HERE8be8f6f8f40f7"; private static readonly string TranslatorEndpoint = "https://api.cognitive.microsofttranslator.com/"; private static readonly string TranslatorLocation = "eastus2"; // URL image used for analyzing an image private const string READ_TEXT_URL_IMAGE = "";

Replace your Main function with the following code. Your Main should be asynchronous because it needs to wait before all the asynchronous functions return their results before exiting. Do not worry about the missing functions, we will create them next.

You can change the translated language by changing the “&to=de” part of the string of route variable in line 9. You can find a list of the supported languages along with their codes here.

static async Task Main(string[] args)

{

// Create a client

ComputerVisionClient client = Authenticate(ComputerVisionEndpoint, ComputerVisionsubScriptionKey);

var analisedText = await ReadFileUrl(client, READ_TEXT_URL_IMAGE);

// Output languages are defined as parameters, input language detected.

string route = "/translate?api-version=3.0&to=de";

string textToTranslate = analisedText;

object[] body = new object[] { new { Text = textToTranslate } };

var requestBody = JsonConvert.SerializeObject(body);

using (var client2 = new HttpClient())

using (var request = new HttpRequestMessage())

{

// Build the request.

request.Method = HttpMethod.Post;

request.RequestUri = new Uri(TranslatorEndpoint + route);

request.Content = new StringContent(requestBody, Encoding.UTF8, "application/json");

request.Headers.Add("Ocp-Apim-Subscription-Key", TranslatorSubscriptionKey);

request.Headers.Add("Ocp-Apim-Subscription-Region", TranslatorLocation);

// Send the request and get response.

HttpResponseMessage response = await client2.SendAsync(request).ConfigureAwait(false);

// Read response as a string.

string resultJson = await response.Content.ReadAsStringAsync();

try

{

List<Rootobject> output = JsonConvert.DeserializeObject<List<Rootobject>>(resultJson);

Console.WriteLine($"Input Text: {textToTranslate}\nPredicted Language: {output.FirstOrDefault().detectedLanguage.language}\nPredicted Score: {output.FirstOrDefault().detectedLanguage.score}\n\n");

foreach (Translation obj in output.FirstOrDefault().translations)

Console.WriteLine($"Translated Language: {obj.to}\nResult: {obj.text}\n\n");

}

catch (Exception e)

{

Console.WriteLine(e);

}

}

}

Create the Authenticate function below your Main.

public static ComputerVisionClient Authenticate(string endpoint, string key)

{

ComputerVisionClient client =

new ComputerVisionClient(new ApiKeyServiceClientCredentials(key))

{ Endpoint = endpoint };

return client;

}

The following function extracts the text from the given picture. Place it under the Authenticate function.

public static async Task<string> ReadFileUrl(ComputerVisionClient client, string urlFile)

{

Console.WriteLine("Extracted Text:");

Console.WriteLine();

// Read text from URL

var textHeaders = await client.ReadAsync(urlFile, language: "en");

// After the request, get the operation location (operation ID)

string operationLocation = textHeaders.OperationLocation;

Thread.Sleep(2000);

// Retrieve the URI where the extracted text will be stored from the Operation-Location header.

// We only need the ID and not the full URL

const int numberOfCharsInOperationId = 36;

string operationId = operationLocation.Substring(operationLocation.Length - numberOfCharsInOperationId);

// Extract the text

ReadOperationResult results;

do

{

results = await client.GetReadResultAsync(Guid.Parse(operationId));

}

while ((results.Status == OperationStatusCodes.Running ||

results.Status == OperationStatusCodes.NotStarted));

// Display the found text.

Console.WriteLine();

var textUrlFileResults = results.AnalyzeResult.ReadResults;

string output = "";

foreach (ReadResult page in textUrlFileResults)

{

foreach (Line line in page.Lines)

{

Console.WriteLine(line.Text);

output += " " + line.Text;

}

}

Console.WriteLine();

return output;

}

Add theese classes to deserialize your JSON. You can place them in separate files, or in the same file under the class you are working on.

public class Rootobject

{

public Detectedlanguage detectedLanguage { get; set; }

public List<Translation> translations { get; set; }

}

public class Detectedlanguage

{

public string language { get; set; }

public float score { get; set; }

}

public class Translation

{

public string text { get; set; }

public string to { get; set; }

}

Now everithing should be working as intended, let’s try testing our new project!

Test

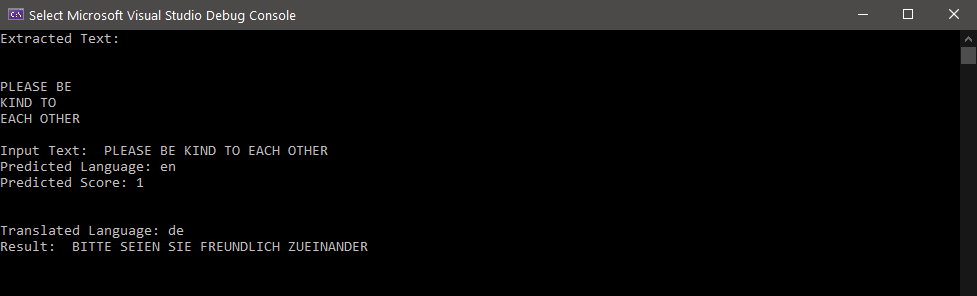

Place the URL of this picture as an input.

This is the result for German translation.

And thats how you can translate text from a picture to any supported language you wish!

Get familiar with Azure Translator

February 25, 2021

February 25, 2021

Translator in Azure is an AI service, part of cognitive services, used for real-time text translation and detection. It is fast and easy to implement, to bring intelligence to your text processing projects.

Create

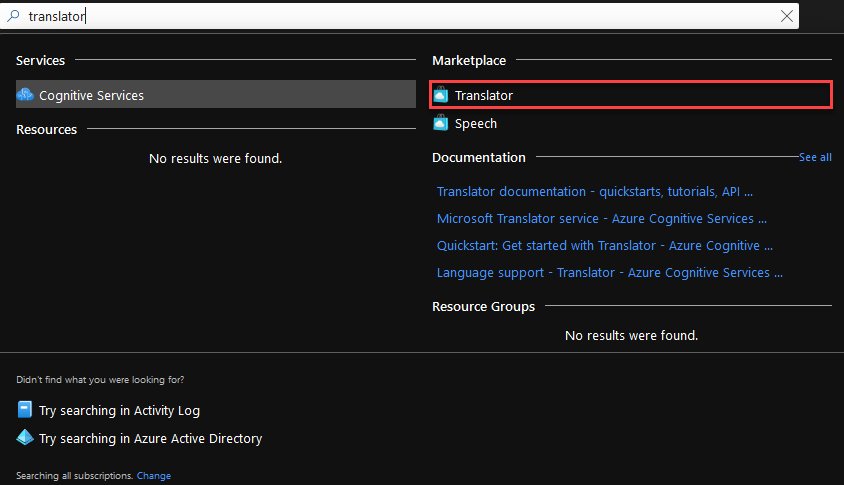

To create a translator resource go to Azure Portal and search for translator. Select Translator from the Marketplace.

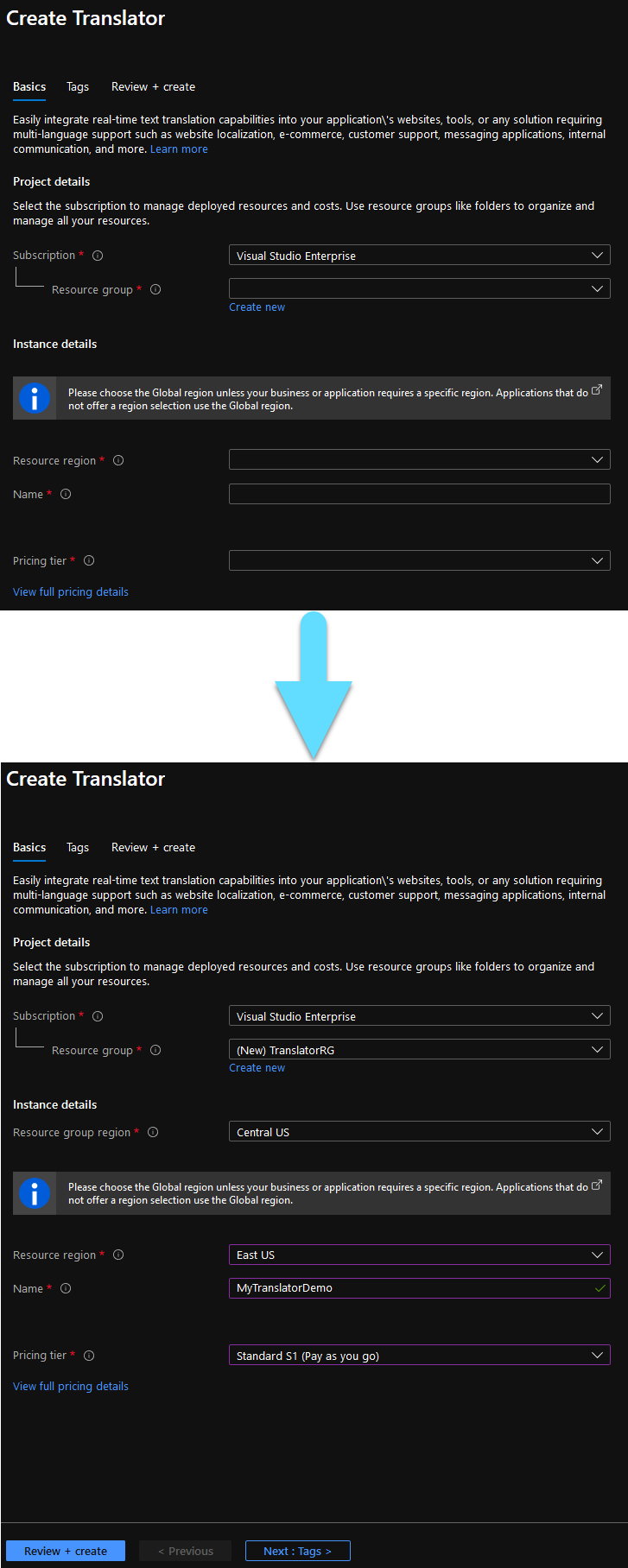

Fill the form. The fields with the ‘*’ are mandatory.

- Subscription should already be filled in with your default subscription.

- For Resource Group, you can use an existing one, or create a new one.

- Choose Region your preferred region.

- Name is the name of your new Translator resource.

- Any Pricing tier will do for this demo.

Click Review + Create at the bottom of the page and wait for your resource to deploy.

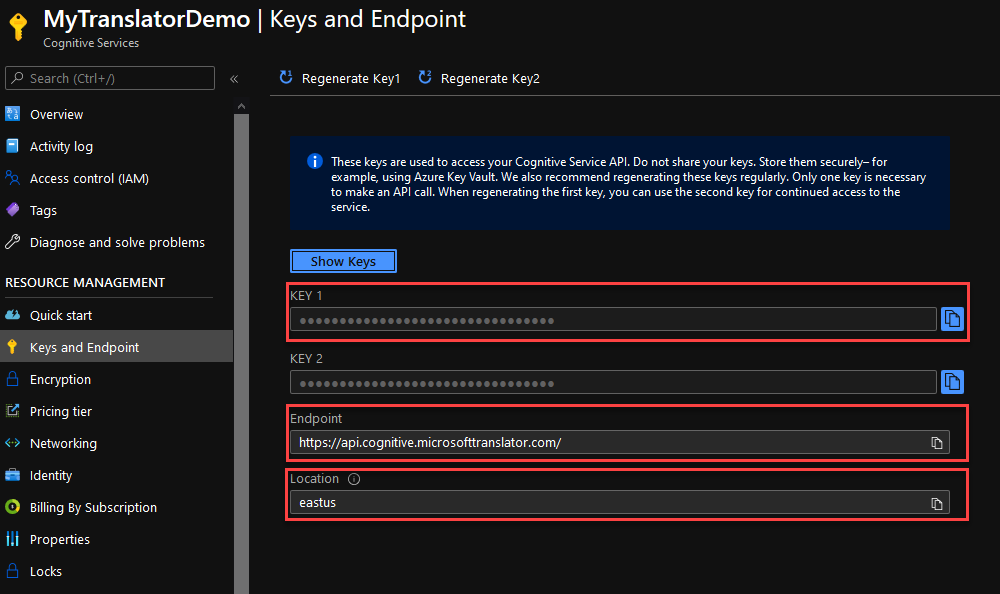

Go to your resource and navigate to the Keys and Endpoint tab at the left of your window. From here you can grab a Key, your Endpoint and your Location. You will need theese later.

Implement

Open your existing project in Visual Studio. If you are not working on an existing project, simply create a C# (.NET Core) Console app.

Navigate to Project -> Manage NuGet Packages, find and install the Newtonsoft.Json package.

Open the class that you need to implement the translator in. For a new project you can use the Program.cs.

Add the using statements you see below at the top of the file.

using System.Collections.Generic;

using System.Linq;

using System.Net.Http;

using System.Text;

using System.Threading.Tasks;

using Newtonsoft.Json;

Add your resource data at the top of your class. For the string in subscriptionKey you can use one of the keys you got before, the endpoint for the string in endpoint and the location for your location at line 3.

private static readonly string subscriptionKey = "73e0c30084KEY_HEREd94ae362b47"; private static readonly string endpoint = "https://api.cognitive.microsofttranslator.com/"; private static readonly string location = "eastus";

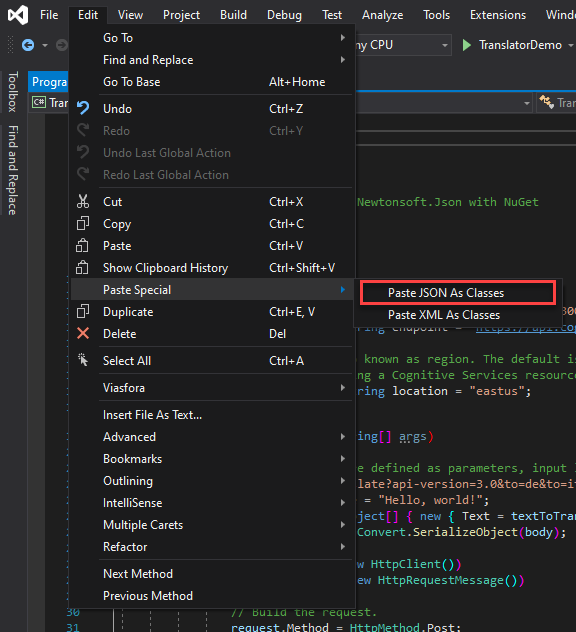

Now we need to create the classes that will contain our deserialized json. You can do that in Visual Studio by pasting the following json As JSON Classes. Go to Edit->Paste Special->Paste JSON As Classes. However, following this you might need to do some modifications in order to work, so I attached the resulting classes below to import directly in your project!

Here is the json you could copy.

[

{

"detectedLanguage": {

"language": "en",

"score": 1.0

},

"translations": [

{

"text": "Hallo Welt!",

"to": "de"

},

{

"text": "Salve, mondo!",

"to": "it"

}

]

}

]

And that is how you paste it.

If you skipped the last step, copy the following classes in your project. You can put them either in a separate file, or in Program.cs directly below Main.

public class Rootobject

{

public Detectedlanguage detectedLanguage { get; set; }

public List<Translation> translations { get; set; }

}

public class Detectedlanguage

{

public string language { get; set; }

public float score { get; set; }

}

public class Translation

{

public string text { get; set; }

public string to { get; set; }

}

Here is the code that does all the magic! Inside your Main paste the following code. In string route at line 2 you can put all the languages you wish to translate your text to. Simply write &to= and then attach the Language code. You can find all available language codes here. For now, we will translate the text to German and Italian.

The textToTranslate string at line 3 is the text you wish to translate. The service will automatically detect which language is written at before translating it. So you can try inputing text in languages other than English if you so desire.

From lines 22 - 32 is where we deserialize the json, we also use a try statement to catch any errors that might appear.

// Output languages are defined as parameters, input language detected.

string route = "/translate?api-version=3.0&to=de&to=it";

string textToTranslate = "Hello, world!";

object[] body = new object[] { new { Text = textToTranslate } };

var requestBody = JsonConvert.SerializeObject(body);

using (var client = new HttpClient())

using (var request = new HttpRequestMessage())

{

// Build the request.

request.Method = HttpMethod.Post;

request.RequestUri = new Uri(endpoint + route);

request.Content = new StringContent(requestBody, Encoding.UTF8, "application/json");

request.Headers.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

request.Headers.Add("Ocp-Apim-Subscription-Region", location);

// Send the request and get response.

HttpResponseMessage response = await client.SendAsync(request).ConfigureAwait(false);

// Read response as a string.

string resultJson = await response.Content.ReadAsStringAsync();

try

{

List<Rootobject> output = JsonConvert.DeserializeObject<List<Rootobject>>(resultJson);

Console.WriteLine($"Input Text: {textToTranslate}\nPredicted Language: {output.FirstOrDefault().detectedLanguage.language}\nPredicted Score: {output.FirstOrDefault().detectedLanguage.score}\n\n");

foreach (Translation obj in output.FirstOrDefault().translations)

Console.WriteLine($"Translated Language: {obj.to}\nResult: {obj.text}\n\n");

}

catch(Exception e)

{

Console.WriteLine(e);

}

}

If you want to experiment more with text translator try some of the code segments from here.

Test

Now simply run the program to see how well the translator works. You can try changing the input text to test it. Here is the output of the text we had above.

Now you have a ready to go translator for your next project!

Sentiment Analysis using Azure Text Analytics

February 13, 2021

February 13, 2021

Text Analytics is an easy to learn and fast to implement AI service, part of the Azure Cognitive Services, that uncovers insights such as sentiment, entities, relations and key phrases in unstructured text.

Create

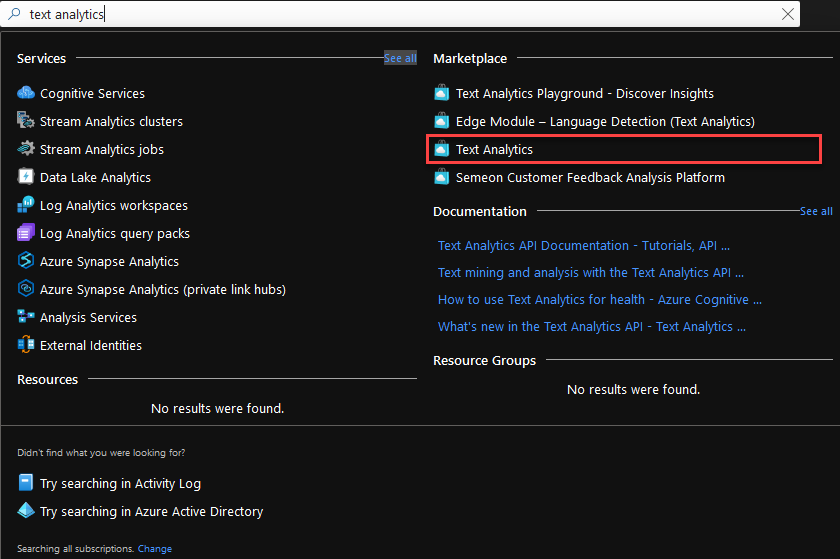

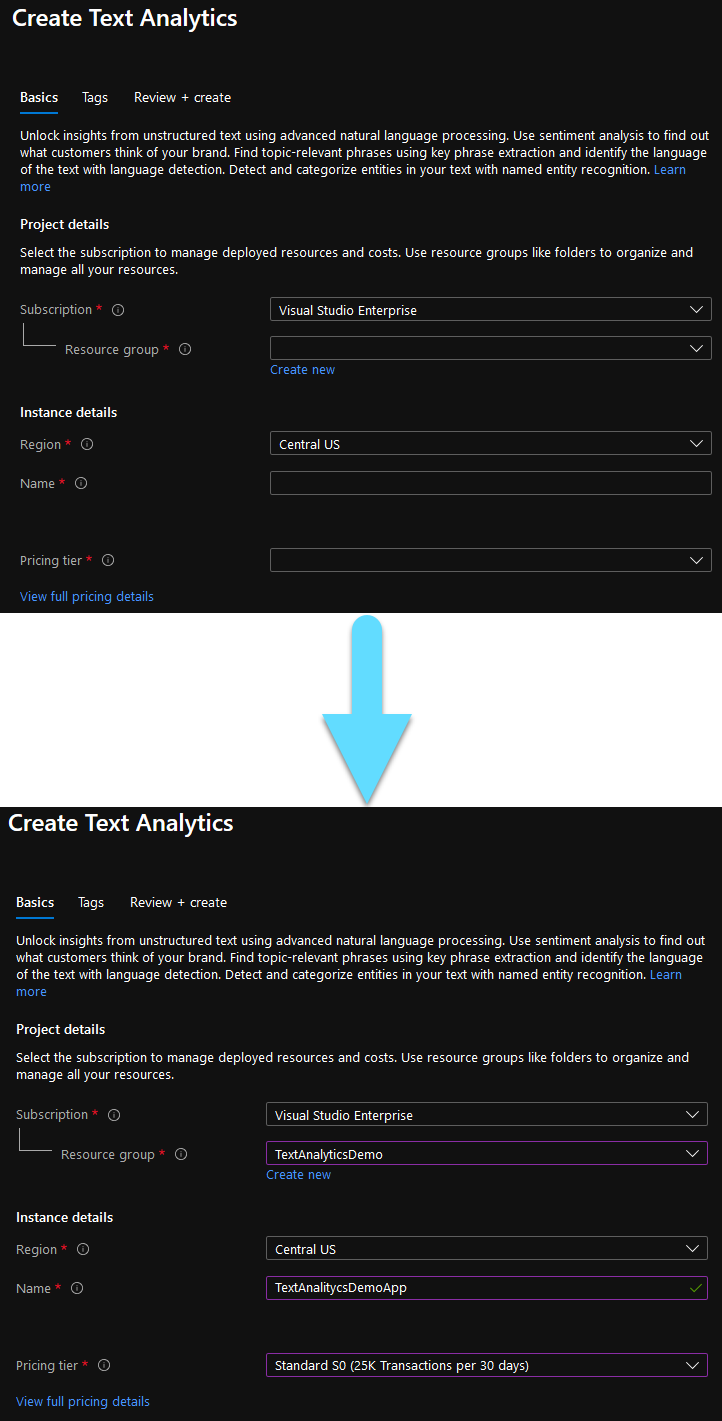

To create a text analytics resource go to Azure Portal and search for Text Analytics. Select Text Analytics from the Marketplace.

Fill the form. The fields with the ‘*’ are mandatory.

- Subscription should already be filled in with your default subscription.

- For Resource Group, you can use an existing one, or create a new one.

- You can leave Region with the pre-selected region.

- Name is the name of your new Text Analytics resource.

- Any Pricing tier will do for this demo.

Continue to the last step and click Create.

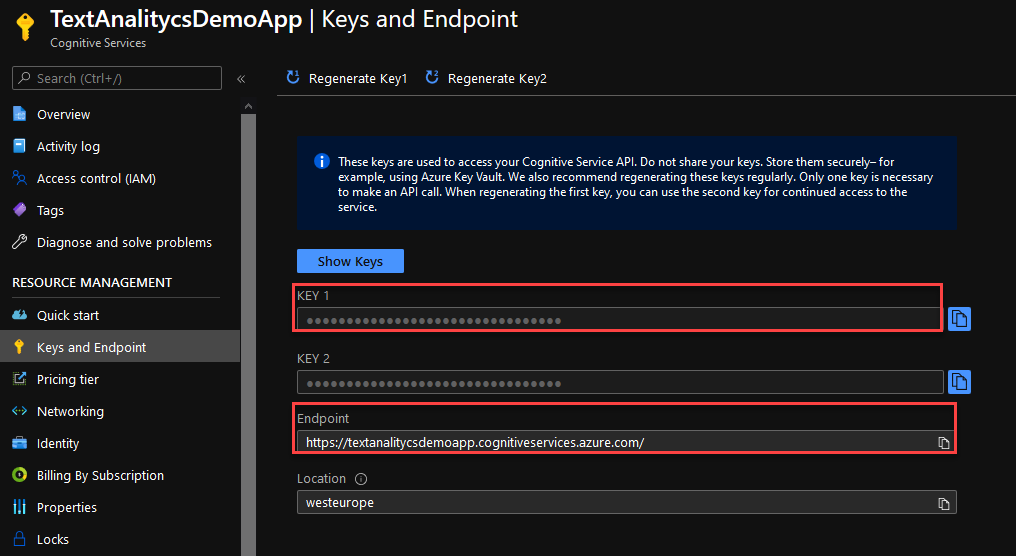

Go to your resource and navigate to the Keys and Endpoint tab at the left of your window. From here you can grab a Key and your Endpoint. You will need theese later.

Implement

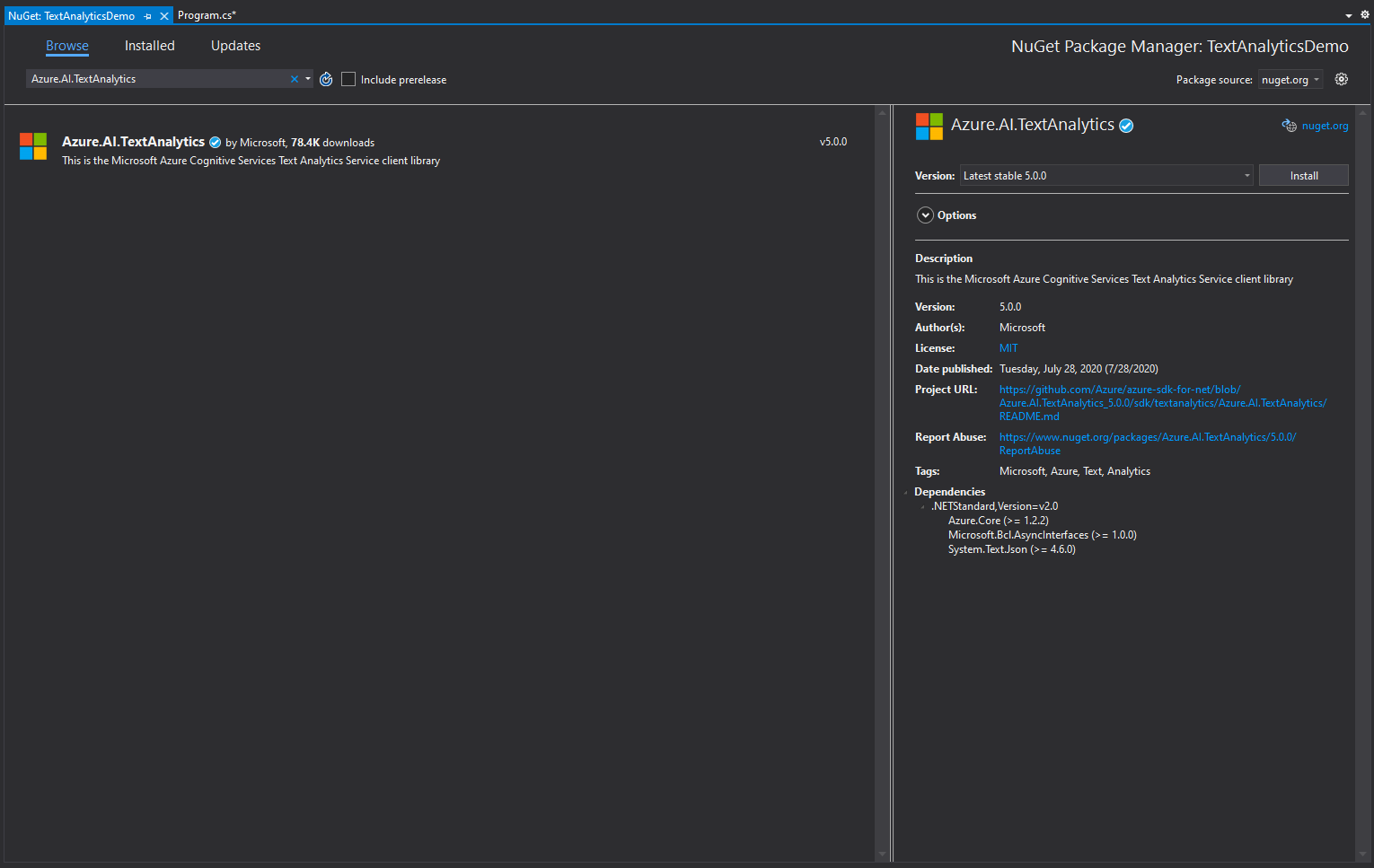

Open your existing project in Visual Studio. If you are not working on an existing project, simply create a C# (.NET Core) Console app.

Navigate to Project -> Manage NuGet Packages, find and install the Azure.AI.TextAnalytics package.

Open the class that you need to implement the sentiment analyser in. For a new project you can use the Program.cs.

Add the using statements you see below at the top of the file.

using Azure;

using Azure.AI.TextAnalytics;

Add your credentials and endpoint at the top of your class. For the string in credentials you can use one of the keys you got before and the endpoint for the string in endpoint.

private static readonly AzureKeyCredential credentials = new AzureKeyCredential("30da76KEY_HERE5ef63b3993f03");

private static readonly Uri endpoint = new Uri("https://textanalitycsdemoapp.cognitiveservices.azure.com/");

Inside your Main put the code that appears below. Do not worry about the missing function, we will create it in the next step.

static void Main(string[] args)

{

var client = new TextAnalyticsClient(endpoint, credentials);

SentimentAnalysisExample(client);

}

The SentimentAnalysisExample is the function that analyses the text and determins weather the intention was positive or negative. In the inputText string at line 3 you can put the document you want to analyse. Every sentense will be analysed separately and a overall result for the whole document will appear.

static void SentimentAnalysisExample(TextAnalyticsClient client)

{

string inputText = "I am feeling happy. I am sick.";

DocumentSentiment documentSentiment = client.AnalyzeSentiment(inputText);

Console.WriteLine($"Document sentiment: {documentSentiment.Sentiment}\n");

foreach (var sentence in documentSentiment.Sentences)

{

Console.WriteLine($"\tText: \"{sentence.Text}\"");

Console.WriteLine($"\tSentence sentiment: {sentence.Sentiment}");

Console.WriteLine($"\tPositive score: {sentence.ConfidenceScores.Positive:0.00}");

Console.WriteLine($"\tNegative score: {sentence.ConfidenceScores.Negative:0.00}");

Console.WriteLine($"\tNeutral score: {sentence.ConfidenceScores.Neutral:0.00}\n");

}

}

If you wish to add extra functionality to your text analyser you can add functions from here and call them from Main. You can try Opinion mining, Language detection, Named Entity Recognition and many more.

Test

Now simply run the program to see how well the sentiment analysis works. You can try changing the text to test it. Here is the output of the text we had above.

And thats how you implement sentiment analysis into your project in a few easy steps!

Introduction to Computer Vision using Azure

February 02, 2021

February 02, 2021

Computer Vision is an AI Service part of the Azure Cognitive Services that analyzes content in images and video.

Create

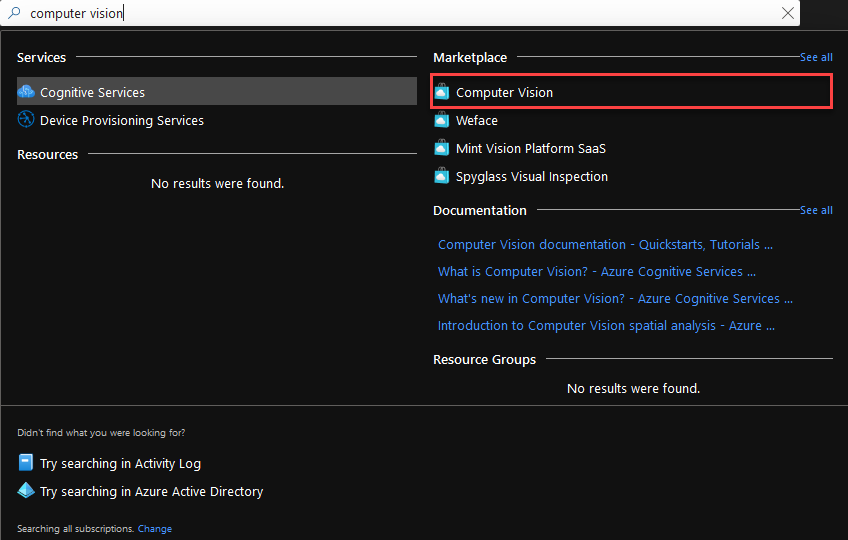

Go to Azure Portal and search for Computer Vision. Select Computer Vision from the Marketplace.

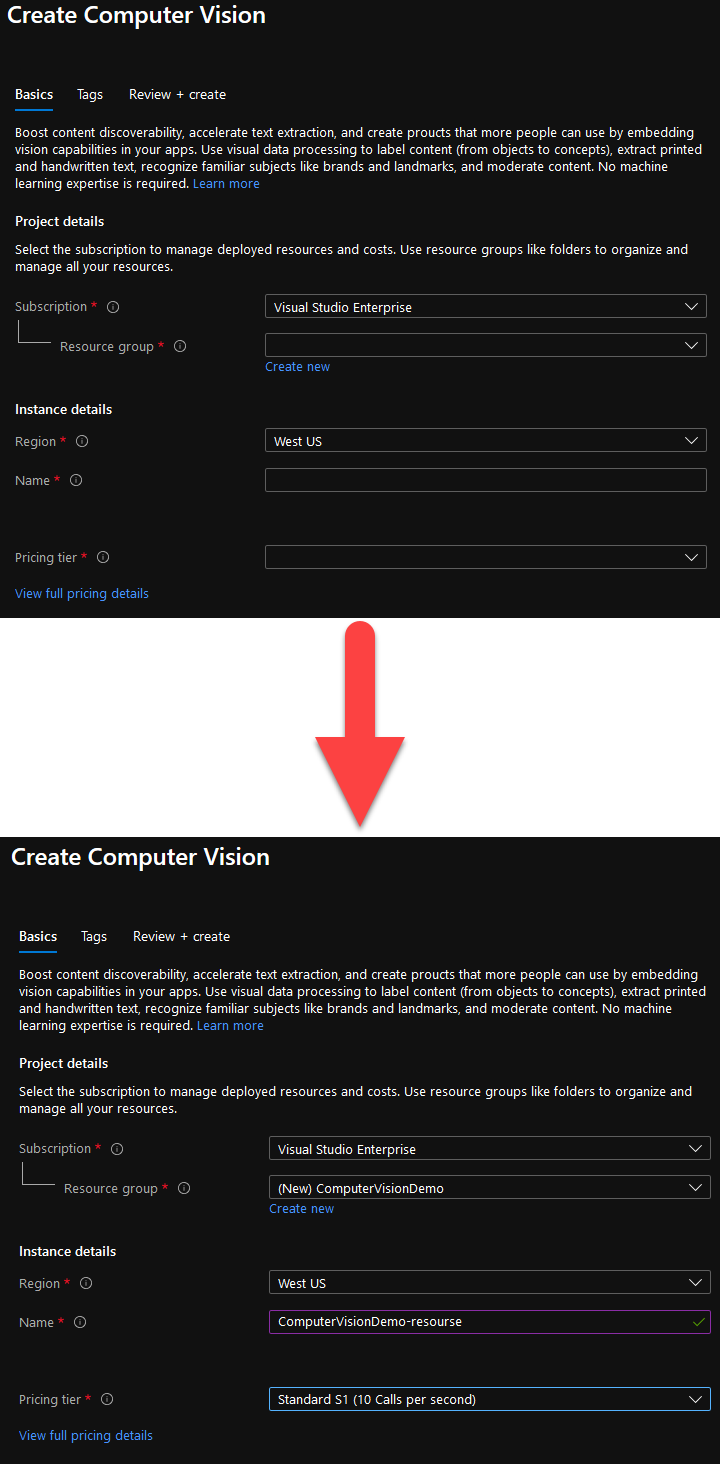

Fill the form. The fields with the ‘*’ are mandatory.

- Subscription should already be filled in with your default subscription.

- For Resource Group, you can use an existing one, or create a new one.

- You can leave Region with the pre-selected region.

- Name is the name of your new Computer Vision resource.

- Any Pricing tier will do for this demo.

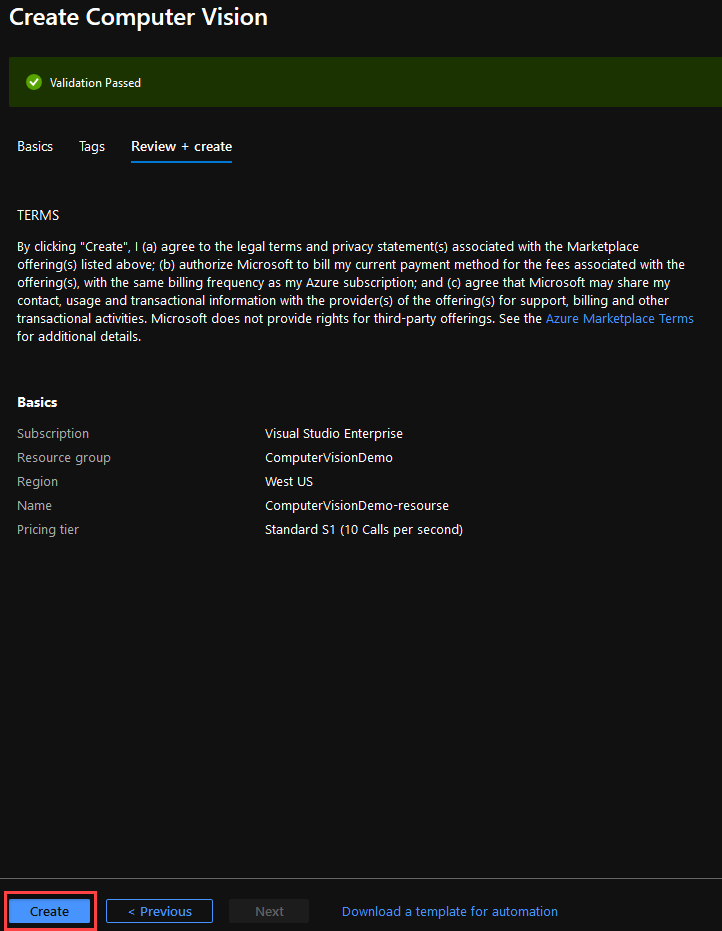

Continue to the last step and click Create.

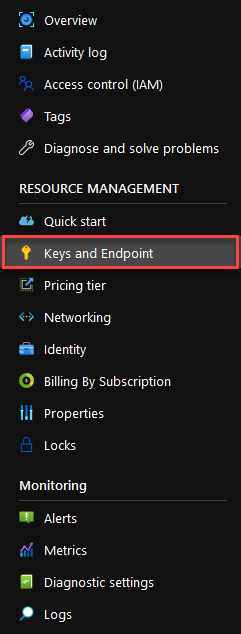

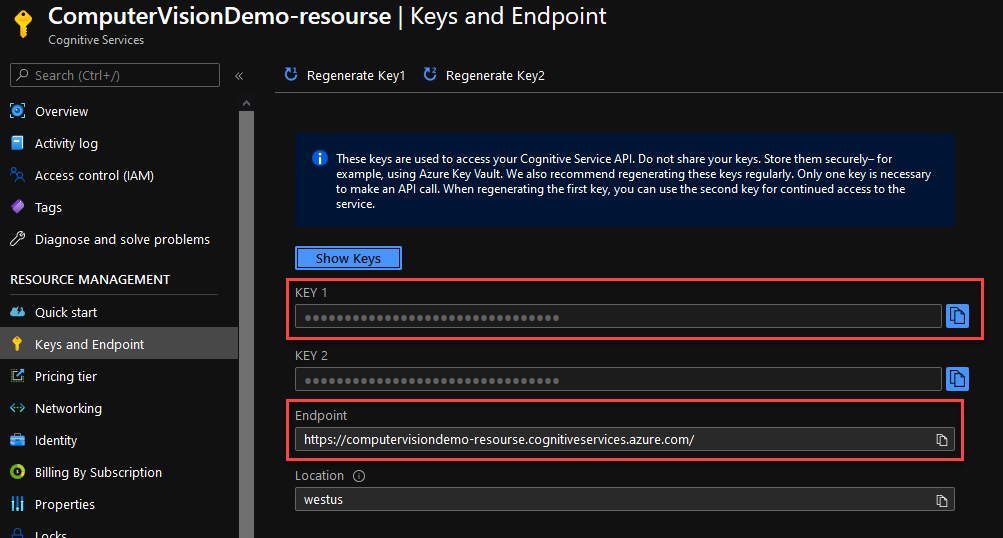

Go to your resource and navigate to the Keys and Endpoint tab at the left of your window.

From here you can grab a Key and your Endpoint. You will need theese later.

Implement

Open your existing project in Visual Studio. If you are not working on an existing project, simply create a C# (.NET Core) Console app.

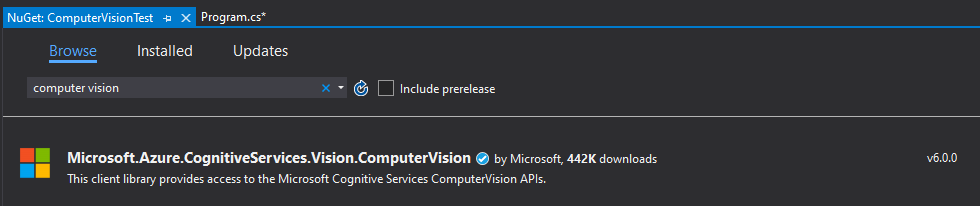

Navigate to Project -> Manage NuGet Packages, find and install the Microsoft.Azure.CognitiveServices.Vision.ComputerVision package.

Open the class that you need to implement the image analyser in. For a new project you can use the Program.cs.

Add the using statements you see below at the top of the file.

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision;

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision.Models;

using System;

using System.Collections.Generic;

using System.Threading.Tasks;

Add these strings at the top of your class. The subscriptionKey should be the Key and endpoint is the Endpoint you found in the last step. At line 6 the ANALYZE_URL_IMAGE constant must be populated with the URL of the image you want to analyse. You can grab the URL of the images in the Test section of this post.

// Add your Computer Vision subscription key and endpoint static string subscriptionKey = "b5c6KEY_HERE81400602c7"; static string endpoint = "https://computervisiondemo-resourse.cognitiveservices.azure.com/"; // URL image used for analyzing an image private const string ANALYZE_URL_IMAGE = "";

Inside your Main put the code that appears below. Do not worry about the missing functions, we will create them in the next step.

// Create a client ComputerVisionClient client = Authenticate(endpoint, subscriptionKey); // Analyze an image to get features and other properties. AnalyzeImageUrl(client, ANALYZE_URL_IMAGE).Wait();

Add the Authenticate function to create a connection between your project and your Copmuter Vision resource.

public static ComputerVisionClient Authenticate(string endpoint, string key)

{

ComputerVisionClient client =

new ComputerVisionClient(new ApiKeyServiceClientCredentials(key))

{ Endpoint = endpoint };

return client;

}

The AnalyzeImageUrl is the function that analyses the image and outputs the results. In this example it will only output the summary of the image, however much more functionality is supported.

public static async Task AnalyzeImageUrl(ComputerVisionClient client, string imageUrl)

{

// Creating a list that defines the features to be extracted from the image.

List<VisualFeatureTypes?> features = new List<VisualFeatureTypes?>()

{

VisualFeatureTypes.Categories, VisualFeatureTypes.Description,

VisualFeatureTypes.Faces, VisualFeatureTypes.ImageType,

VisualFeatureTypes.Tags, VisualFeatureTypes.Adult,

VisualFeatureTypes.Color, VisualFeatureTypes.Brands,

VisualFeatureTypes.Objects

};

// Analyze the URL image

ImageAnalysis results = await client.AnalyzeImageAsync(ANALYZE_URL_IMAGE, features);

// Summarizes the image content.

Console.WriteLine("Summary:");

foreach (var caption in results.Description.Captions)

{

Console.WriteLine($"{caption.Text} with confidence {caption.Confidence}");

}

Console.WriteLine();

}

If you wish to add extra functionality to your image analyser you can add code segments into the AnalyzeImageUrl function from here. You can also take a look here to see more examples of what is supported with the Computer Vision service.

Test

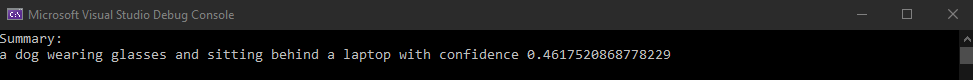

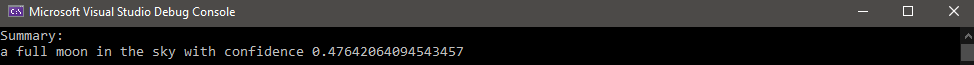

To test, simply put the URL of one of the images below to the ANALYZE_URL_IMAGE constant and run the project.

Example 1

Example 2

As you can see it works pretty well! And this is how you can utilise the power of Computer Vision to make an image analyser! It is also extremely easy to give this functionality to a bot, let the user show photos to the bot and receive information about them.

Proactive Messages in Microsoft Bot Framework

January 21, 2021

January 21, 2021

Proactive messaging provides your bot the ability to notify the user with messages that can be written and modified by the developer.

Create

In this demo we will be using a core bot created in Azure using Azure Bot Service. To create one you can visit this post.

Open the project in Visual Studio and create a new class named NotifyController.cs. Copy and paste the following code. Change the string in line 59 to the message you would like to appear in your bot.

using Microsoft.AspNetCore.Mvc;

using Microsoft.Bot.Builder;

using Microsoft.Bot.Builder.Integration.AspNet.Core;

using Microsoft.Bot.Schema;

using Microsoft.BotBuilderSamples.Dialogs;

using Microsoft.Extensions.Configuration;

using System;

using System.Collections.Concurrent;

using System.Net;

using System.Threading;

using System.Threading.Tasks;

namespace CoreBot.Controllers

{

[Route("api/notify")]

[ApiController]

public class NotifyController : ControllerBase

{

private readonly IBotFrameworkHttpAdapter _adapter;

private readonly string _appId;

private readonly ConcurrentDictionary<string, ConversationReference> _conversationReferences;

public NotifyController(IBotFrameworkHttpAdapter adapter, IConfiguration configuration, ConcurrentDictionary<string, ConversationReference> conversationReferences)

{

_adapter = adapter;

_conversationReferences = conversationReferences;

_appId = configuration["MicrosoftAppId"];

// If the channel is the Emulator, and authentication is not in use,

// the AppId will be null. We generate a random AppId for this case only.

// This is not required for production, since the AppId will have a value.

if (string.IsNullOrEmpty(_appId))

{

_appId = Guid.NewGuid().ToString(); //if no AppId, use a random Guid

}

}

public async Task<IActionResult> Get()

{

foreach (var conversationReference in _conversationReferences.Values)

{

await ((BotAdapter)_adapter).ContinueConversationAsync(_appId, conversationReference, BotCallback, default);

}

// Let the caller know proactive messages have been sent

return new ContentResult()

{

Content = "<html><body><h1>Proactive messages have been sent.</h1></body></html>",

ContentType = "text/html",

StatusCode = (int)HttpStatusCode.OK,

};

}

private async Task BotCallback(ITurnContext turnContext, CancellationToken cancellationToken)

{

// If you encounter permission-related errors when sending this message, see

// https://aka.ms/BotTrustServiceUrl

await turnContext.SendActivityAsync("This is a proactive message!");

}

}

}

Implement

Open the MainDialog.cs class and add the following using statement.

using System.Collections.Concurrent;

Create the ConcurrentDictionary at the top of the file.